B is for Batching

In the second instalment of the Flexciton Tech Glossary Series, we're taking you on an insightful journey through the world of batching. Find out about the many complexities of batching, the existing methods of solving the problem and the wider solution space.

Welcome back to the Flexciton Tech Glossary Series: A Deep Dive into Semiconductor Technology and Innovation. Our second entry of the series is all about Batching. Let's get started!

A source of variability

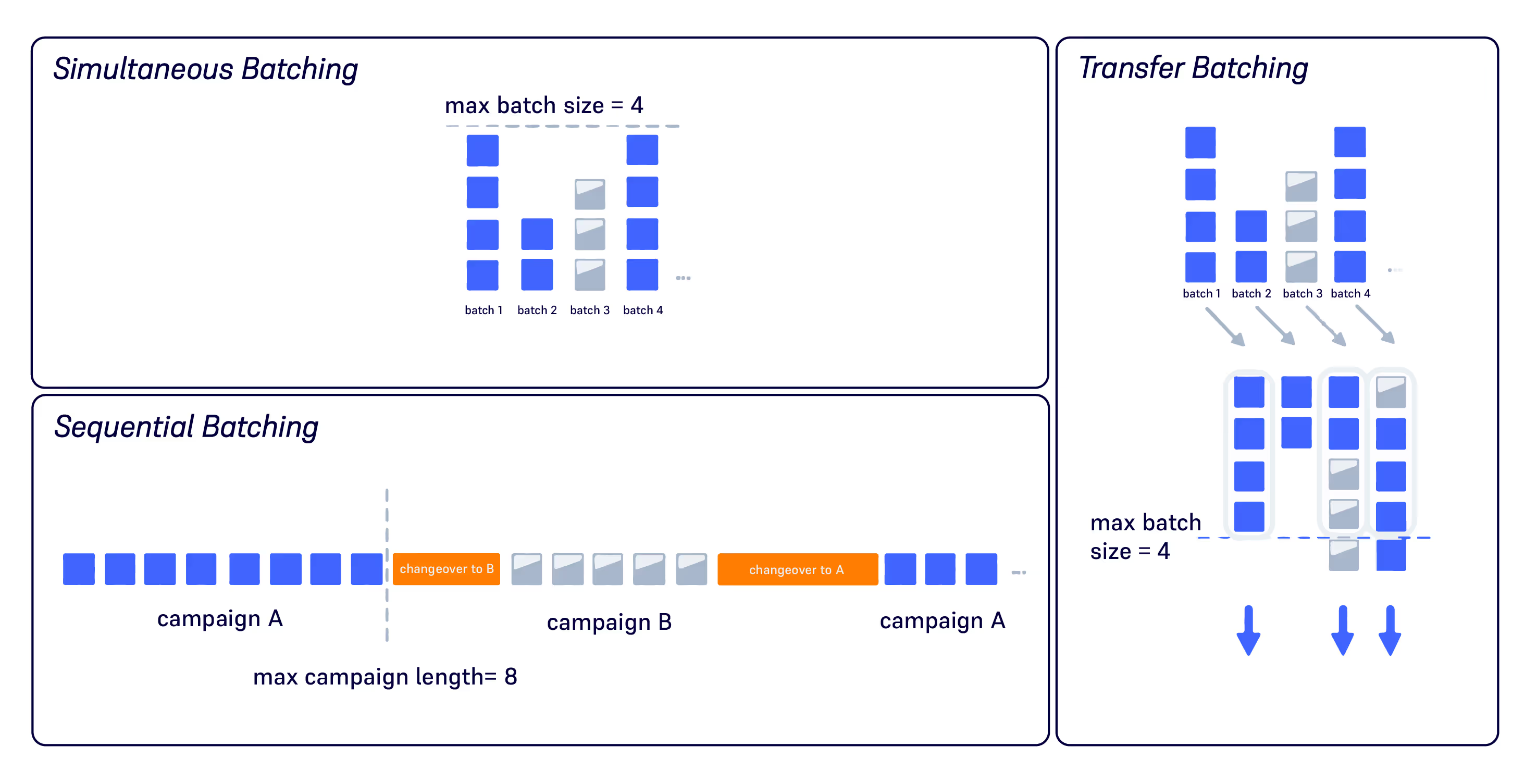

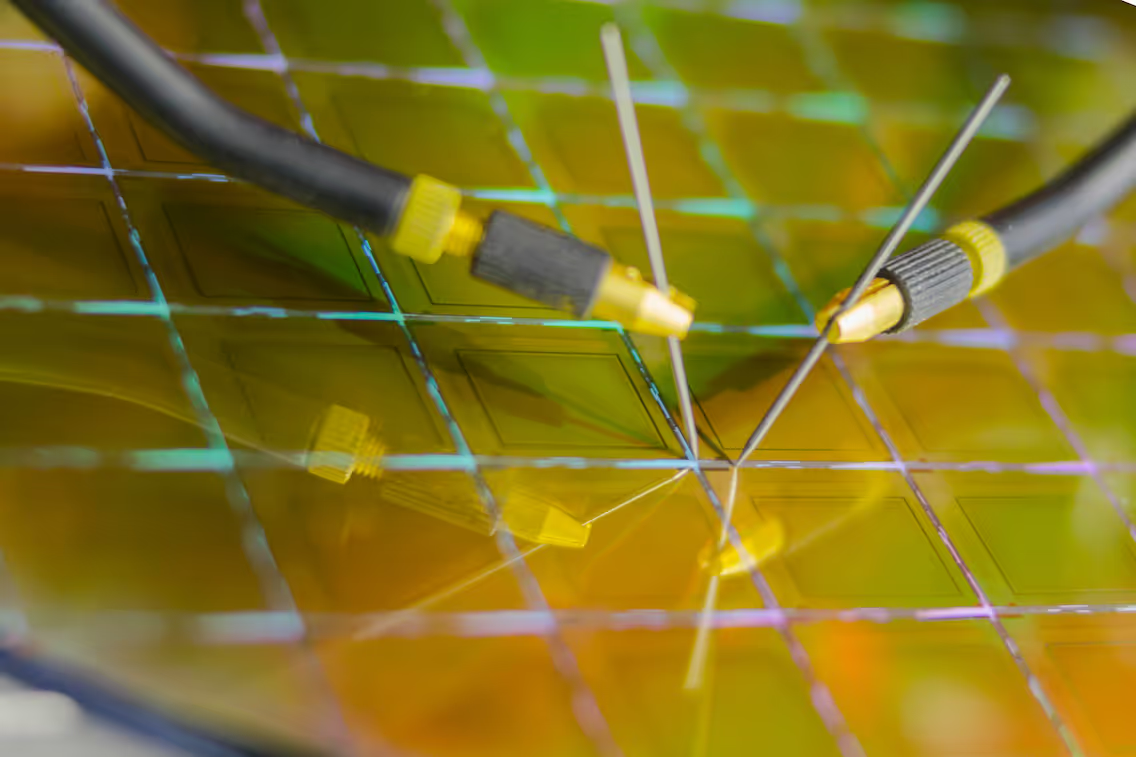

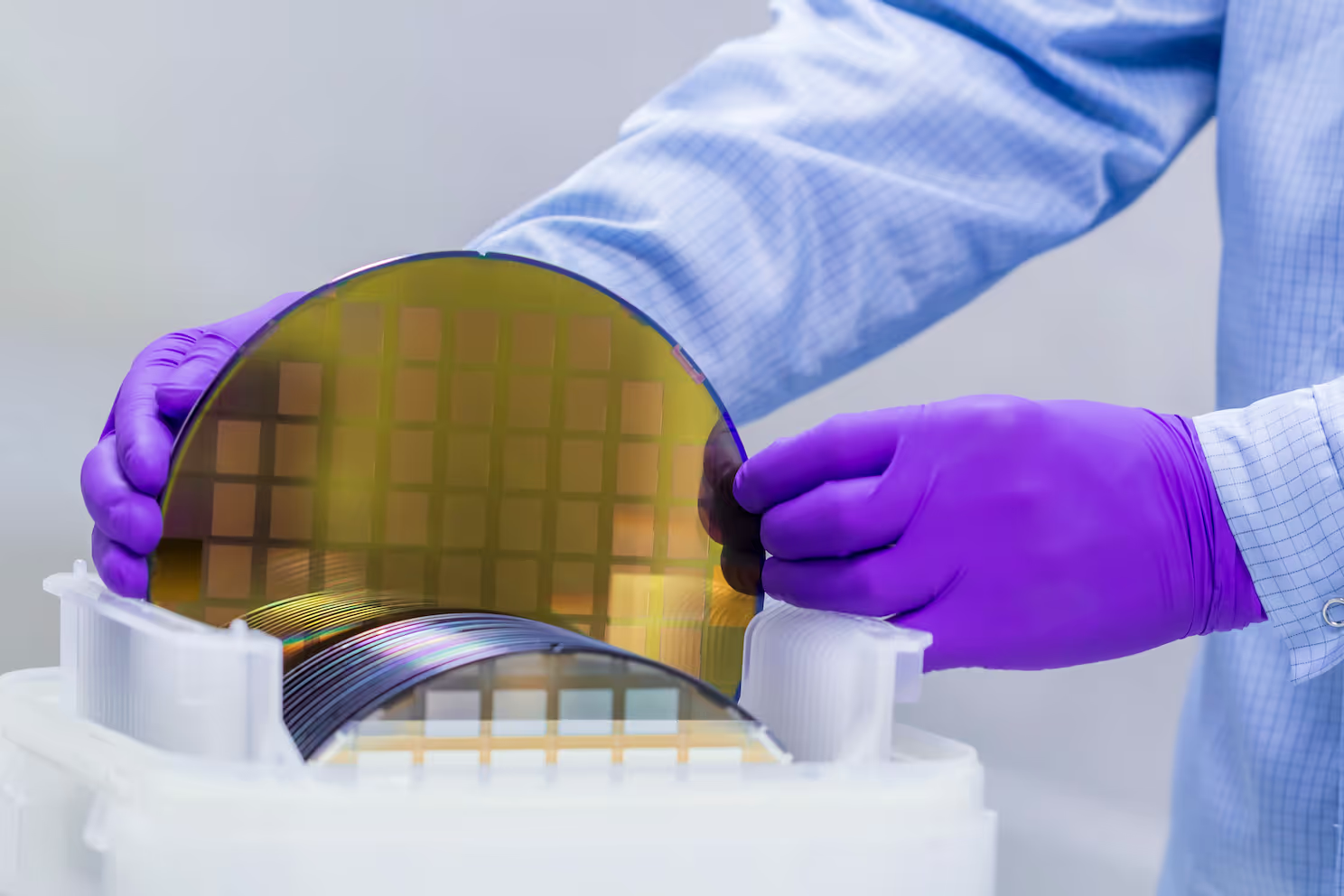

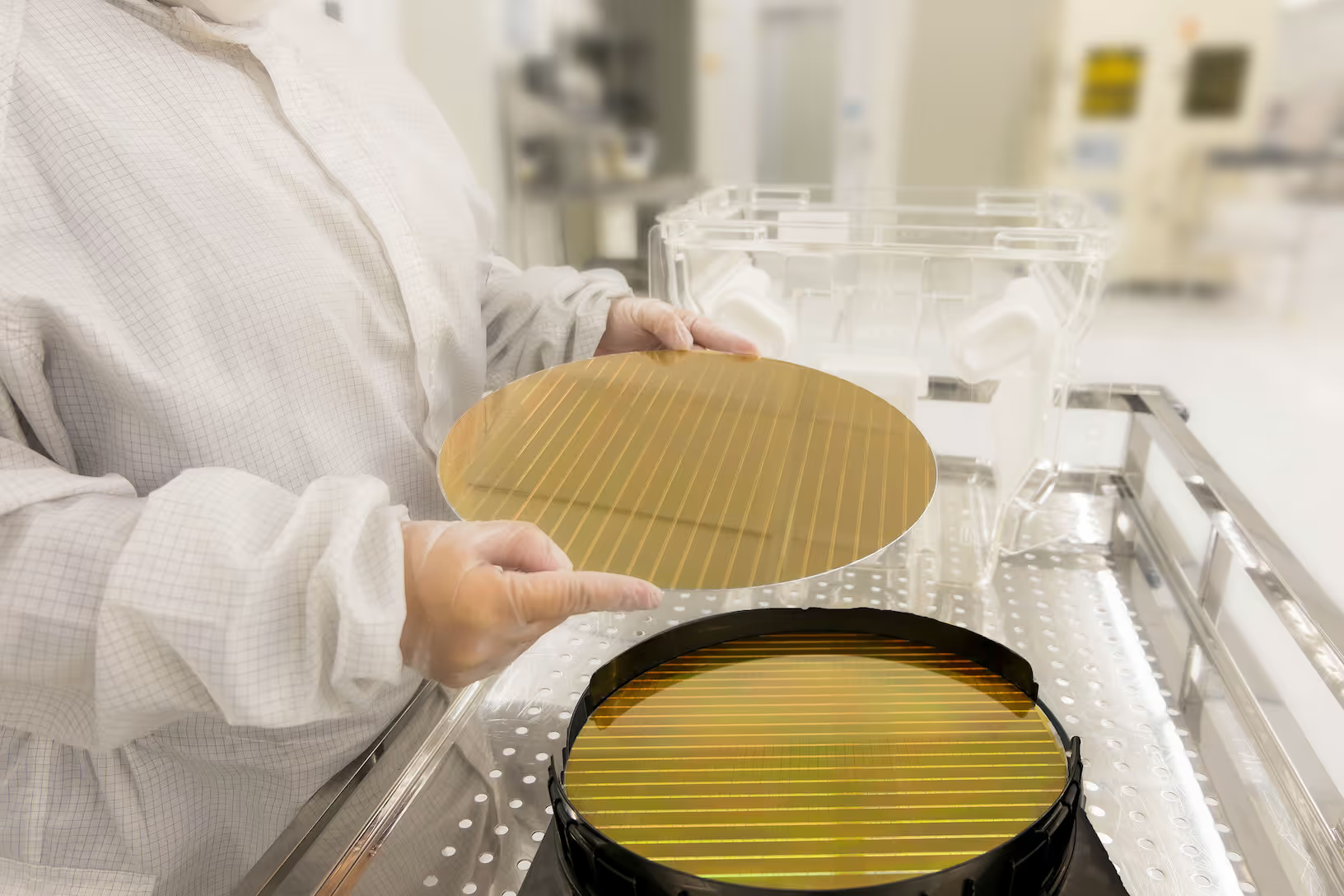

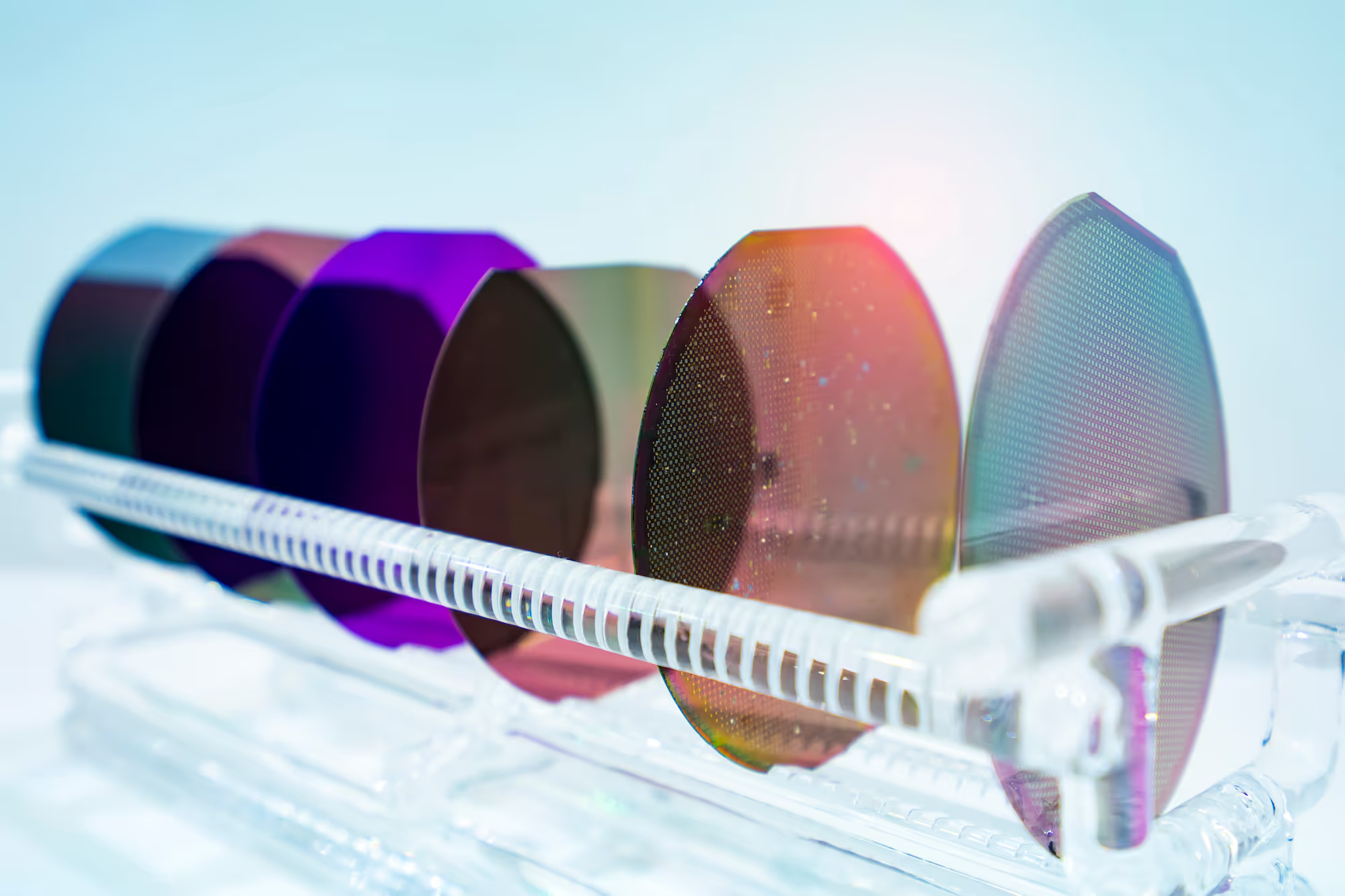

Let's begin with the basics: what exactly is a batch? In wafer fabrication, a wafer batch is a group of wafers that are processed (or transported) together. Efficiently forming batches is a common challenge in fabs. While both logistics and processing both wrestle with this issue, our article will focus on batching for processing, which can be either simultaneous or sequential.

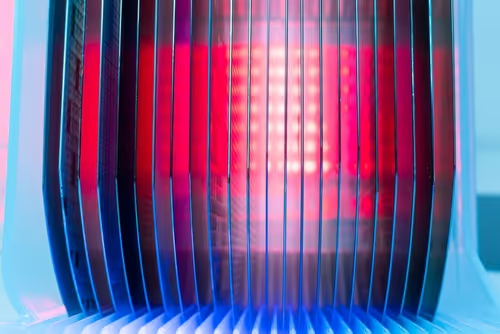

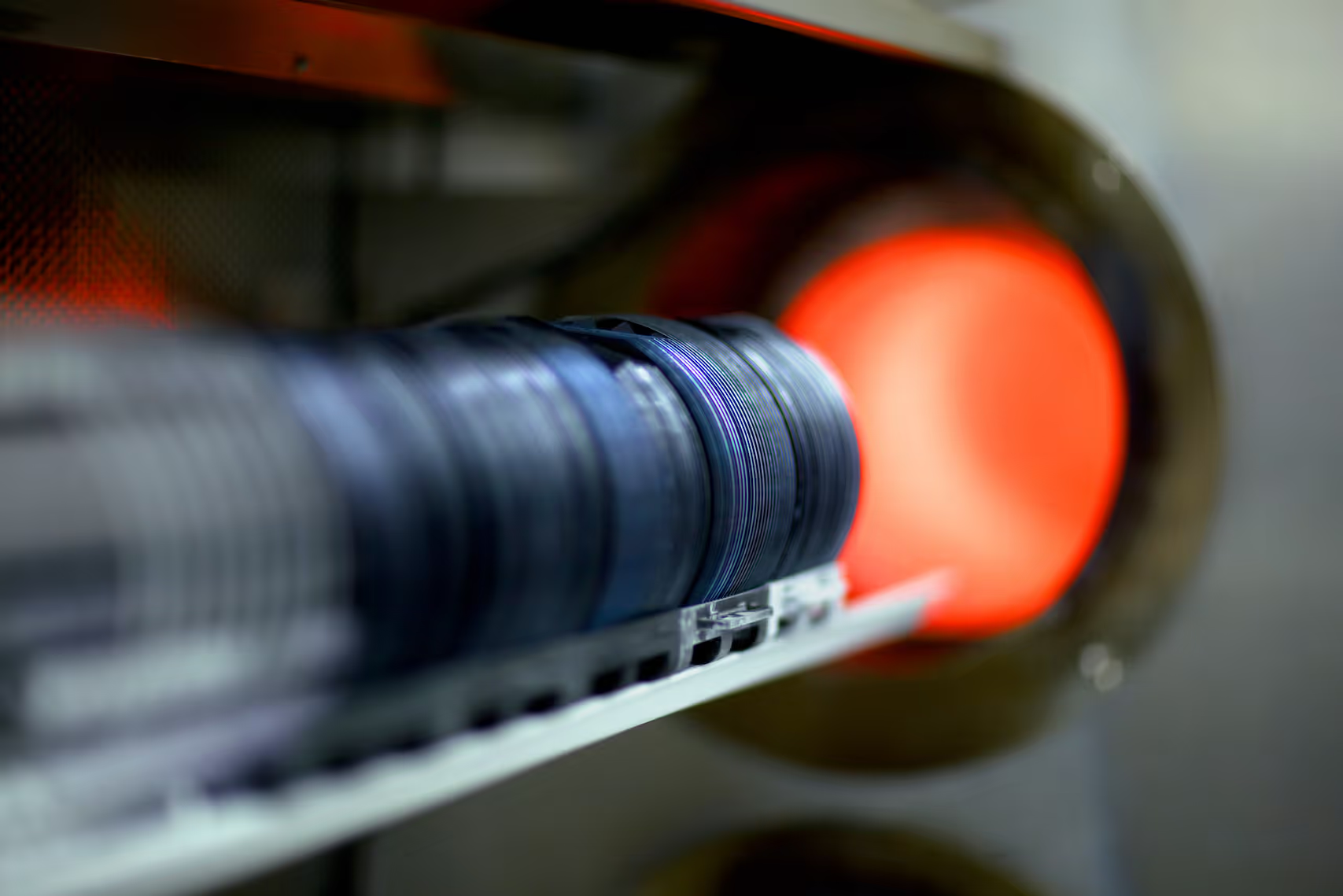

Simultaneous batching is when wafers are processed at the same time on the same machine. It is very much inherent to the entire industry, as most of the machines are designed for handling lots of 25 wafers. There are also process types – such as thermal processing (e.g. diffusion, oxidation & annealing), certain deposition processes, and wet processes (e.g. cleaning) – that benefit from running multiple lots in parallel. All of these processes get higher uniformity and machine efficiency from simultaneous batching.

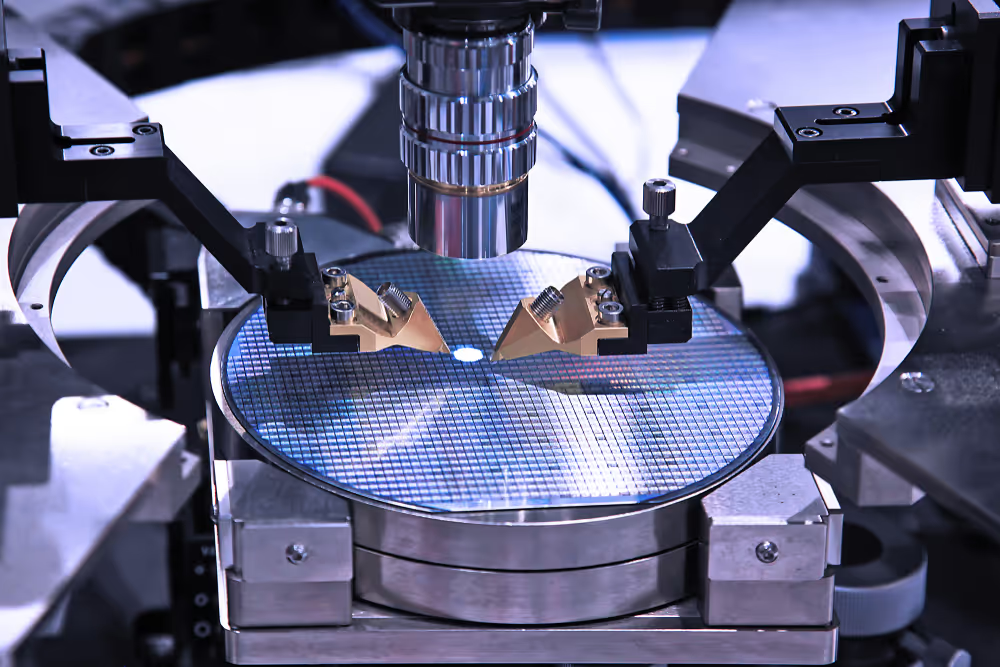

On the other hand, sequential batching refers to the practice of grouping lots or wafers for processing in a specific order to minimise setup changes on a machine. This method aims to maximise Overall Equipment Effectiveness (OEE) by reducing the frequency of setup adjustments needed when transitioning between different production runs. Examples in wafer fabrication include implant, photolithography (photo), and etch.

Essentially, the entire process flow in wafer manufacturing has to deal with batching processes. To give a rough idea: a typical complementary metal-oxide semiconductor (CMOS) architecture in the front-end of the line involves batching in up to 70% of its value added steps. In a recent poll launched by FabTime on what the top cycle time contributors are, the community placed batching at number 5[1], behind tool downs, tool utilisation, holds, and one-of-a-kind tools. Batching creates lot departures in bursts, and hence it inherently causes variability in arrivals downstream. Factory Physics states that:

“In a line where releases are independent of completions, variability early in a routing increases cycle time more than equivalent variability later in the routing.” [2]

Successfully controlling this source of variability will inevitably result in smoother running down the line. However, trying to reduce variability in arrival rates downstream can lead to smaller batch sizes or shorter campaign lengths, affecting the effectiveness of the batching machines themselves.

The many complexities of batching

In wafer fabs, and even more so in those with high product mix, batching is particularly complicated. As described in Factory Physics:

"In simultaneous batching, the basic trade-off is between effective capacity utilisation, for which we want large batches, and minimal wait to batch time, for which we want small batches.” [2]

For sequential batching, changing over to a different setup of the machine will cause the new arriving lots to wait until the required setup is available again.

In both cases, we’re talking about a decision to wait or not to wait. The problem can easily be expressed mathematically if we’re dealing with single product manufacturing and a low number of machines to schedule. However, as one can imagine, the higher the product mix, the higher the possible setups and machines. Then the problem complexity increases, and the size of the solution space explodes. That’s not all, there are other factors that might come into play and complicate things even more. Four different examples are:

- Timelinks or queue time constraints: a maximum time in between processing steps

- High-priority lots: those that need to move faster through the line for any reason

- Downstream capacity constraints: machines that should not get starved at any cost

- Pattern matching: when the sequence of batching processes need to match a predefined pattern, such as AABBB

Strategies to deal with batching

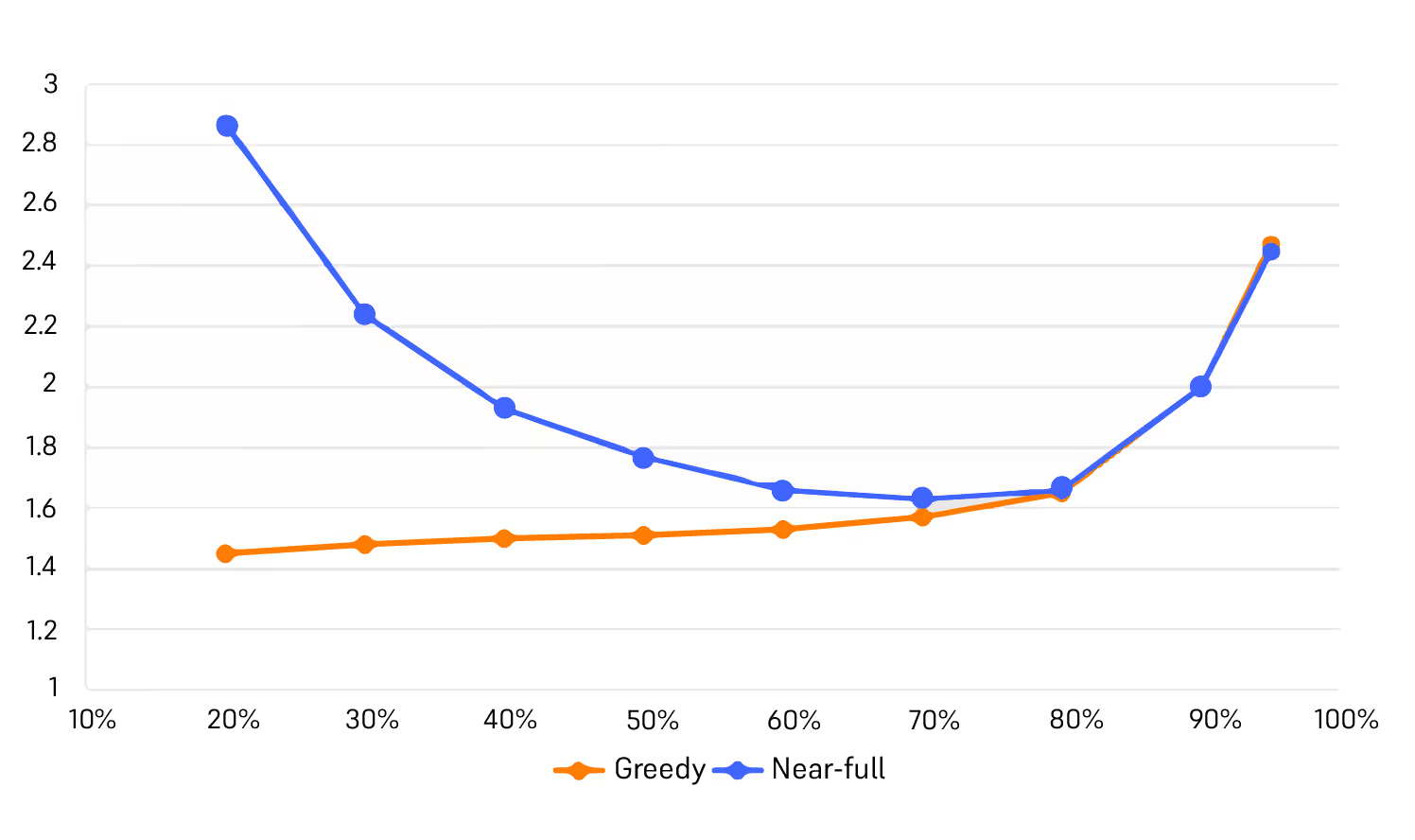

Historically, the industry has used policies for batching; common rules of thumb that could essentially be split up into ‘greedy’ or ‘full batch’ policies[3]. Full batch policies require lots to wait until a full batch is available. They tend to favour effective capacity utilisation and cost factors, while they negatively impact cycle time and variability. Greedy policies don’t wait for full batches and favour cycle time. They assume that when utilisation levels are high, there will be enough WIP to make full batches anyway. For sequential batching on machines with setups, common rules include minimum and maximum campaign length, which have their own counterpart configurations for greedy vs full batching.[3]

The batching formation required in sequential or simultaneous batching involves far more complex decisions than that of loading a single lot into a tool, as it necessitates determining which lots can be grouped together. Compatibility between lots must be considered, and practitioners must also optimize the timing for existing lots on the rack to await new arrivals, all with the goal of maximising batch size. [4]

Industrial engineers face the challenge of deciding the best strategy to use for loading batch tools, such as those in the diffusion area. In an article by FabTime [4], [5] the impact of the greedy vs full or near full batch policy is compared. The greedy heuristic reduces queuing time and variability but may not be cost-effective. Full batching is cost-effective but can be problematic when operational parameters change. For instance, if a tool's load decreases (becomes less of a bottleneck), a full batch policy may increase cycle time and overall fab variability. On the other hand, a greedy approach might cause delays for individual lots arriving just after a batch is loaded, especially critical or hot lots with narrow timelink windows. Adapting these rules to changing fab conditions is essential.

In reality, these two policies are extreme settings in a spectrum of possible trade-offs between cost and cycle time (and sometimes quality). To address the limitations of both the greedy and full batch policies, a middle-ground approach exists. It involves establishing minimum batch size rules and waiting for a set duration, X minutes, until a minimum of Y lots are ready for batching. This solution usually lacks robustness because the X and Y values depend on various operational parameters, different recipes, product mix, and WIP level. As this rule-based approach incorporates more parameters, it demands greater manual adjustments when fab/tool settings change, inevitably leading to suboptimal tool performance.

In all of the above solutions, timelink constraints are not taken into consideration. To address this, Sebastian Knopp[6] recently developed an advanced heuristic based on disjunctive graph representation. The model's primary aim was to diminish the problem size while incorporating timelink constraints. The approach successfully tackled real-life industrial cases but of an unknown problem size.

Over the years, the wafer manufacturing industry has come up with various methodologies to help deal with the situation above, but they give no guarantee that the eventual policy is anywhere near optimal and their rules tend to stay as-is without adjusting to new situations. At times, this rigidity has been addressed using simulation software, enabling factories to experiment with various batching policy configurations. However, this approach proved to be resource-intensive and repetitive, with no guarantee of achieving optimal results.

How optimization can help master the batching problem

Optimization is the key to avoiding the inherent rigidity and unresponsiveness of heuristic approaches, helping to effectively address the batching problem. An optimization-based solution takes into account all batching constraints, including timelinks, and determines the ideal balance between batching cost and cycle time, simultaneously optimizing both objectives.

It can decide how long to wait for the next lots, considering the accumulating queuing time of the current lots and the predicted time for new lots to arrive. No predetermined rules are in place; instead, the mathematical formulation encompasses all possible solutions. With a user-defined objective function featuring customised weights, an optimization solver autonomously identifies the optimal trade-off, eliminating the need for manual intervention.

The challenge with traditional optimization-based solutions is the computational time when the size and complexity of the problem increase. In an article by Mason et al.[7], an optimization-based solution is compared to heuristics. While optimization outperforms heuristics in smaller-scale problems, its performance diminishes as problem size increases. Notably, these examples did not account for timelink constraints.

This tells us that the best practice is to try to break down the overall problem into smaller problems and use optimization to maximise the benefit. At Flexciton, advanced decomposition techniques are used to break down the problem to find a good trade-off between reduced optimality from the original problem and dealing with NP-hard complexity.[8]

Many practitioners aspire to attain optimal solutions for large-scale problems through traditional optimization techniques. However, our focus lies in achieving comprehensive solutions that blend heuristics, mathematical optimization, like mixed-integer linear programming (MILP), and data analytics. This innovative hybrid approach can vastly outperform existing scheduling methods reliant on basic heuristics and rule-based approaches.

Going deeper into the solution space

In a batching context, the solution space represents the numerous ways to create batches with given WIP. Even in a small wafer fab with a basic batching toolset, this space is immense, making it impossible for a human to find the best solution in a multi-product environment. Batching policies throughout history have been like different paths for exploring this space, helping us navigate complex batching mathematics. Just as the Hubble space telescope aided space exploration in the 20th century, cloud computing and artificial intelligence now provide unprecedented capabilities for exploring the mathematical world of solution space, revealing possibilities beyond imagination.

With the advent of these cutting-edge technologies, it is now a matter of finding a solution that satisfies the diverse needs of a fab, including cost, lead time, delivery, quality, flexibility, safety, and sustainability. These objectives often conflict, and ultimately, finding the optimal trade-off is a business decision, but the rise of cloud and AI will enable engineers to pinpoint a batching policy that is closest to the desired optimal trade-off point. Mathematical optimization is an example of a technique that historically had hit its computational limitations and, therefore, its practical usefulness in wafer manufacturing. However, mathematicians knew there was a whole world to explore, just like astronomers always knew there were exciting things beyond our galaxy. Now, with mathematicians having their own big telescope, the wafer manufacturers are ready to set their new frontiers.

Authors

Ben Van Damme, Industrial Engineer and Business Consultant, Flexciton

Dennis Xenos, CTO and Cofounder, Flexciton

References

[1] FabTime Newsletter: Issue 24.03

[2] Wallace J. Hopp, Mark L. Spearman, Factory Physics: Third Edition. Waveland Press, 2011

[3] Lars Mönch, John W. Fowler, Scott J. Mason, 2013, Production Planning and Control for Semiconductor Wafer Fabrication Facilities, Modeling, Analysis, and Systems, Volume 52, Operations Research/Computer Science Interfaces Series

[5] FabTime Newsletter: Issue 9.03

[6] Sebastian Knopp, 2016, Complex Job-Shop Scheduling with Batching in Semiconductor Manufacturing, PhD thesis, l’École des Mines de Saint-Étienne

[7] S. J. Mason , J. W. Fowler , W. M. Carlyle & D. C. Montgomery, 2007, Heuristics for minimizing total weighted tardiness in complex job shops, International Journal of Production Research, Vol. 43, No. 10, 15 May 2005, 1943–1963

[8] S. Elaoud, R. Williamson, B. E. Sanli and D. Xenos, Multi-Objective Parallel Batch Scheduling In Wafer Fabs With Job Timelink Constraints, 2021 Winter Simulation Conference (WSC), 2021, pp. 1-11

More resources

Stay up to date with our latest publications.

.avif)

.avif)

.avif)