Could Reinforcement Learning Play a Part in the Future of Wafer Fab Scheduling? [Tech Paper Review]

Jannik Post – one of our optimization engineers – takes a look at the background of the Reinforcement Learning methodology, before reviewing two recent publications which apply Reinforcement Learning to scheduling problems.

A discipline of Machine Learning called Reinforcement Learning has received much attention recently as a novel way to design system controllers or to solve optimization problems. Today, Jannik Post – one of our optimization engineers – takes a look at the background of the methodology, before reviewing two recent publications which apply Reinforcement Learning to scheduling problems.

The exciting prospect of Reinforcement Learning

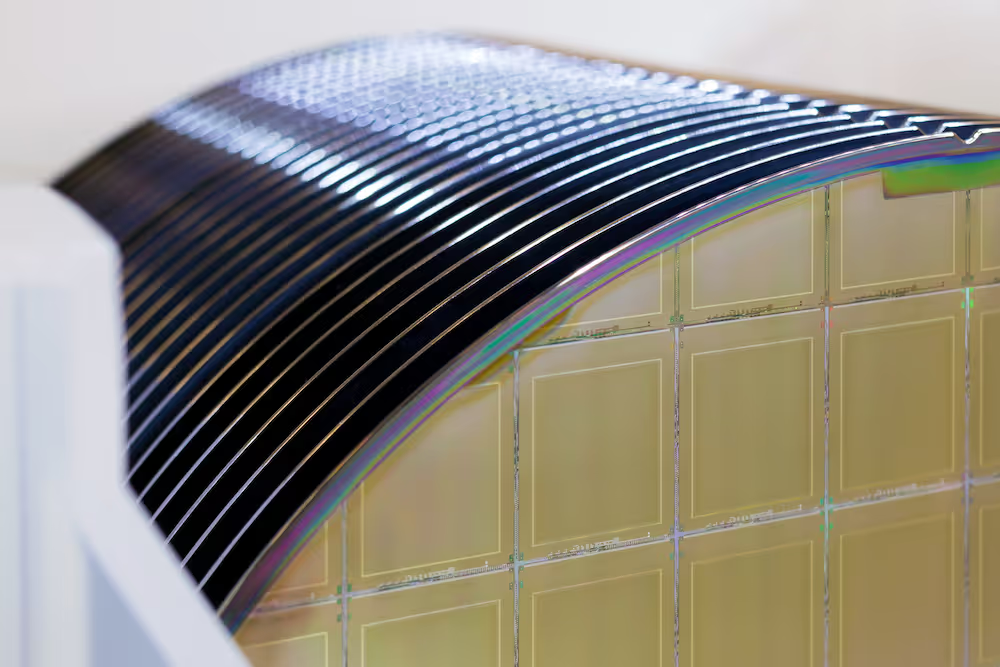

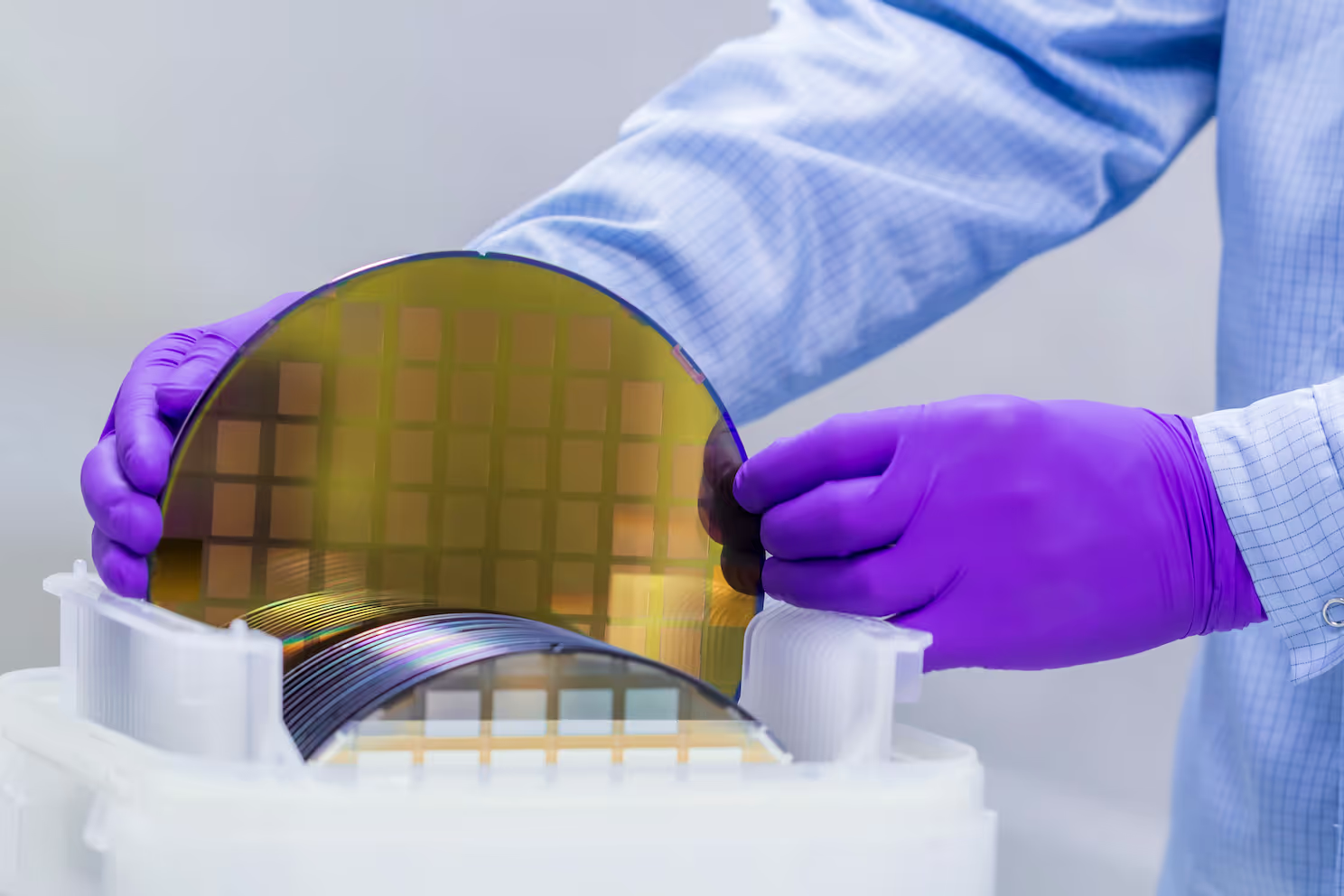

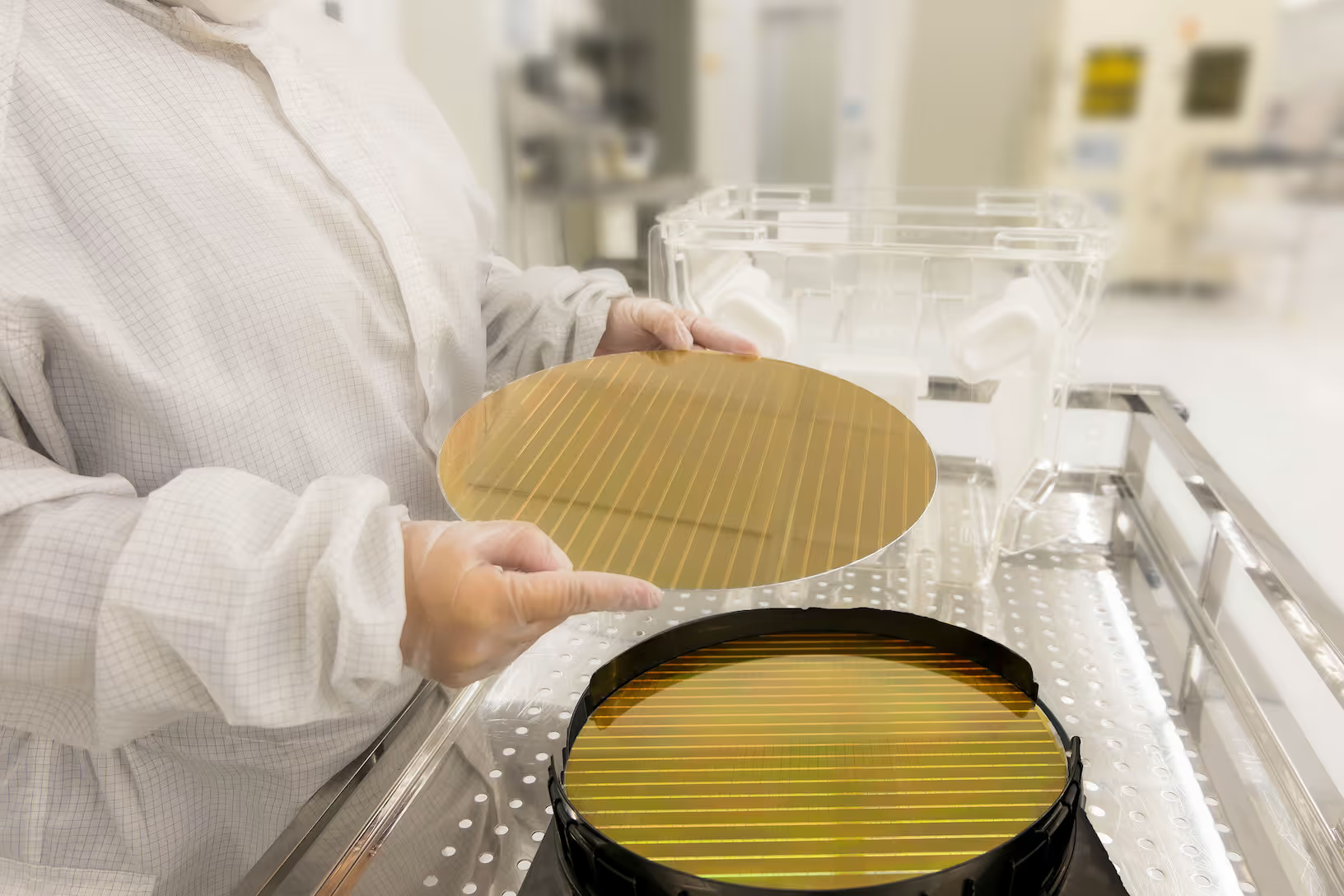

Traditionally, semiconductor fabs have relied on real-time dispatching systems to provide their operators with the dispatch decisions – with their ability to show the current state of the work in progress within seconds. These systems may follow rules based on heuristics or derive them from domain knowledge, which makes their design a lengthy process that requires deep knowledge of the fab processes. Maintenance of the contained logic also requires continuous attention from subject matter experts. As well as this, these systems have very limited awareness of the global effects of decisions at toolset level – therefore making them susceptible to providing suboptimal decisions.

More advanced approaches to wafer fab scheduling rely on optimization models, which can take many factors into account, e.g., the effect of dispatching decisions on bottleneck tools further downstream. These solutions will generally require a slightly longer computation time to achieve high-quality solutions.

Reinforcement Learning (RL) promises to avoid the downsides of both common dispatching systems and optimization approaches. So, how does it work? At the heart of RL there is an agent* which performs a task by taking decisions or controlling a system. The goal is to teach this agent to make close to optimal decisions by allowing it to explore different options and providing feedback on the quality of its decision. Good decisions are rewarded whilst suboptimal decisions are punished. Of course, this training will not be performed in a live environment, but rather by simulating thousands of scenarios that might occur to prepare the agent for any possible situation.

A common example of Reinforcement Learning is self-driving cars, but it can easily be seen how it could be productive when used in other environments, such as dispatching in a wafer fab. In theory, it could be utilised to dispatch wafers to tools in a way that optimizes certain KPIs – such as throughput.

Reinforcement Learning for Job Shop Scheduling** problems

Numerous recent publications have explored the use of RL for production control. However, the approaches are still in their early stages and applied to problems much less complex than semiconductor scheduling. Nevertheless, they demonstrate the potential to play a part in future solution strategies. Two approaches stood out to us when reviewing the literature:

“Learning to Dispatch for Job Shop Scheduling via Deep Reinforcement Learning” (2020)

This paper by Zhang et al. describes an approach to designing an agent that generalises its knowledge beyond what it has been trained to do, enabling it to handle unseen problem instances. This is achieved by initially conducting a large amount of diverse training scenarios. The model can flexibly handle instances of different sizes, e.g., with varying numbers of tools.

The agent is first trained on large numbers of scenarios and will thereby learn to exploit common patterns and perform well in instances not encountered before. After the training, the agent can be deployed to solve new instances. As training is conducted separately from solving an instance, the latter can be performed in less than a minute. The performance on benchmarking problems is compared against optimization models and simple dispatching heuristics. The Reinforcement Learning approach yields a makespan – the total duration of the schedule from start to finish – between 10-30% longer than when computed through optimization, but around 30% shorter than what simple heuristics achieve.

“A Reinforcement Learning Environment for Job Shop Scheduling” (2021)

This paper published by Tassel et al. sets out to design a reinforcement learning environment to optimize job shop scheduling (JSS) problems as an alternative to optimization models. The objective in this approach is to reduce periods in the schedule where tools are not in use, which is shown to correlate with a minimisation of makespan. The agent is designed as a dispatcher and is trained on a single scenario at a time by running a real world simulation over and over. As the goal is to generate an optimized solution for the instance, the best solution achieved during training will be saved. Training time and solution time are thus the same in this approach and are limited to 10 minutes to reflect production requirements. In this approach, there is no intention to generalise the behaviour of the agent to other instances.

The authors disclose a makespan of just 10-15% worse than the best known benchmarks for job shop scheduling, and just 6-7% longer than time-constrained optimization approaches.

Flexciton’s view

At Flexciton, we are excited about bringing cutting-edge optimized scheduling to wafer fabs worldwide. We are always exploring new ways that could help us improve the service we provide our customers so it’s exciting to see new emerging technologies which may help solve scheduling challenges in the semiconductor industry. The two publications reviewed in this article both present promising new approaches that yield measurable improvements over simple dispatching heuristics, but still fall short of optimization.

Both approaches can cope with disruption and stochasticity of the environment, such as machine downtimes. Another commonality is that both can readily be applied to problems of different sizes. In both cases the authors respected the requirement for frequent schedule updates (Tassel et al.) and quick decision support (Zhang et al.) and still achieved optimized solutions. It is conceivable that reinforcement learning has the capability to teach an agent to make smart decisions in the present that will improve the future fab state and reduce bottlenecks.

However, as the use of RL for JSS problems is still a novelty, it is not yet at the level of sophistication that the semiconductor industry would require. So far, the approaches can handle standard small problem scenarios but cannot handle flexible problems or batching decisions. Many constraints need to be obeyed in wafer fabs (e.g., timelinks and reticle availability) and it is not easily guaranteed that the agent will adhere to them. The objective set for the agent must be defined ahead of training, which means that any change made afterwards will require a repeat of training before new decisions can be obtained. This is less problematic for solving the instance proposed by Tassel et al., although their approach relies on a specifically modelled reward function which would not easily adapt to changing objectives.

Lastly, machine learning approaches can lead to situations where the decisions taken by the agent will be hidden in a black box. When the insights into the rationale behind decisions are limited, troubleshooting becomes difficult and trust into the solution is hard to establish.

Flexciton’s way

Using wafer fab scheduling to meet KPIs such as increased throughput and reduced cycle time is a challenge that requires a flexible, quick, and robust solution. We have developed advanced mathematical hybrid optimization technology that combines the capabilities of optimization models with the quickness of simple dispatching systems. When needed, the objective parameters and constraints can be adjusted without the need to rewrite or redesign extensive parts of the solution. It can therefore easily be adapted to optimize bottleneck toolsets, a whole fab or even multiple fabs.

Flexciton’s scheduling software produces an optimized schedule every five minutes and easily integrates with existing dispatching systems. The intuitive interface enables users to investigate decisions in a wider context, which helps during troubleshooting and increases trust in the dispatching decisions.

References

[1] Zhang, Song, Cao, Zhang, Tan, Xu (2020). “Learning to Dispatch for Job Shop Scheduling via Deep Reinforcement Learning.”

[2] Tassel, Gebser, Schekotihin (2021). “A Reinforcement Learning Environment for Job-Shop Scheduling.”

[3] Five reasons why your wafer fab should be using hybrid optimization scheduling (Flexciton Blog)

Notes

* – We use the term ‘agent’ to describe a piece of software that will make decisions and/or take actions in its environment to achieve a given goal

** – The job shop is a common scheduling problem in which multiple jobs are processed on several machines. Each job consists of a sequence of tasks, which must be performed in a given order, and each task must be processed on a specific machine.

More resources

Stay up to date with our latest publications.

.avif)

.avif)

.avif)