Blog & News

We upload to our blog every couple of weeks, sharing insightful articles from our engineers as well as company news an our opinions on recent industry topics. Subscribe to our mailing list to get great content delivered straight to your inbox.

Meet Flexciton at the Smart Manufacturing Pavilion in Munich and Upcoming Webinars

Meet Flexciton at the Smart Manufacturing Pavilion in Munich and Upcoming Webinars

Flexciton’s schedule for the next month features multiple opportunities to engage with our team and gather insights on manufacturing efficiency. We begin at SEMICON Europa 2025 in Munich, exhibiting at the Smart Manufacturing Pavilion and hosting two talks from CEO Jamie Potter. We then move online for two webinars, addressing backend scheduling challenges on November 27, and the path to frontend fab autonomy with Intel and KUKA on December 4.

SEMICON Europa 2025 | Munich, 18–21 November

Next week, our team heads to Munich for SEMICON Europa 2025, where Flexciton will exhibit within the Smart Manufacturing Pavilion (booth B1734) — a new space launched in collaboration with SEMI Europe and other industry leaders as part of the E2E Smart Manufacturing European Chapter.

Visitors can explore how AI, digital twins, predictive maintenance, and advanced optimisation are transforming semiconductor operations. Stop by for a conversation (and a hot drink) and see how Flexciton is helping fabs accelerate their journey to autonomy.

Our CEO Jamie Potter will also deliver two presentations during our time in Munich:

- Practical Path to Autonomy: How AI Planning & Scheduling Transforms Today’s Fabs

Wednesday 19 Nov | 15:50 | Fab Management Forum, Room 14c - Transforming Production Planning with Autonomous Technology and Prescriptive Analytics – A Joint Case Study with Seagate Technology

Thursday 20 Nov | 15:40 | Smart Manufacturing Executive Forum, Hall C2

Upcoming Webinars

Following SEMICON Europa, we’ll host two webinars diving deeper into key manufacturing challenges and the role of advanced optimisation.

Mastering Backend Complexity: How APS Transforms Backend Scheduling

Thursday 27 Nov | 17:00 UTC+8 | 17:00 SGT | 17:00 MYT | 09:00 GMT

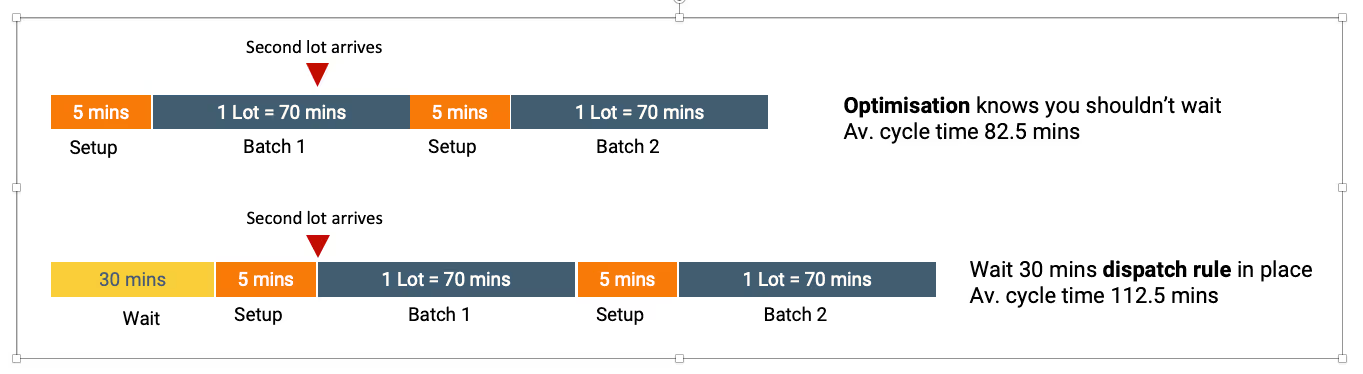

Backend manufacturing has become too complex for spreadsheets and simple dispatch rules. Join Sebastian Steele, Product Director at Flexciton, to explore how Advanced Planning & Scheduling (APS) can transform Assembly & Test and Advanced Packaging operations. The session will show how APS helps teams balance workloads across multiple lines, synchronise complex multi-die routes, and prevent bottlenecks before they occur. Participants will see how the solution improves on-time delivery, increases utilisation by reducing setup and changeover losses, maintains QTimer compliance automatically, and provides predictive visibility into potential production issues, all while eliminating the daily manual effort that traditional spreadsheets and rule-based dispatching require.

The Road to Fab Autonomy: Building on Automation, Optimisation, and Visibility

Thursday 4 Dec | 16:00 GMT | 17:00 CET | 08:00 PST | 10:00 CST

In partnership with Intel AFS and KUKA, this session examines how interoperable technologies can help fabs progress step by step along the SEMI Smart Manufacturing “Automation & Autonomy Maturity Framework.” Speakers Dennis Xenos (Flexciton), Paul Schneider (Intel AFS), and Christian Felkel (KUKA) will share practical examples demonstrating how fabs can advance their capabilities in material handling automation, smart scheduling and planning, and operational visibility. The webinar highlights how autonomy can be achieved through incremental, data-driven improvements rather than major rebuilds, showing how collaborative and interoperable solutions enable fabs to gain flow, throughput, and resilience as they move through the framework’s M0–M4 stages.

From Munich to our online webinars, Flexciton continues to demonstrate how intelligent optimisation is redefining frontend and backend operations - making autonomous manufacturing practical, scalable, and within reach.

If you’d like to connect with our team to discuss our products, implementations, sales, or any other inquiries, you can submit questions here.

Intel and Flexciton Announce Partnership

Intel and Flexciton Announce Partnership

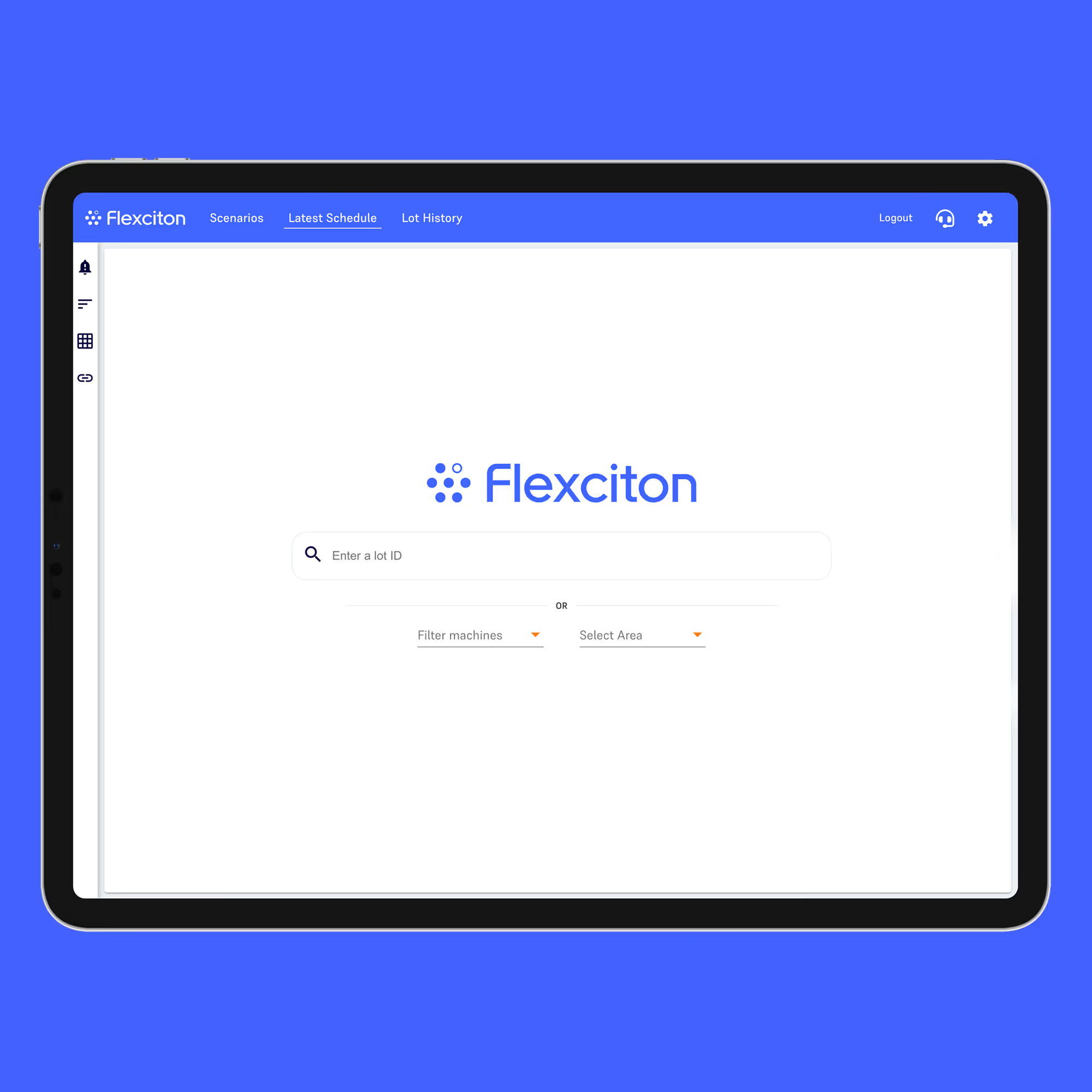

LONDON – September 25th, 2025 – Flexciton, a leader in autonomous planning and scheduling for semiconductor manufacturing, today announced a partnership with Intel. This collaboration will provide semiconductor manufacturers with a comprehensive, end-to-end set of software solutions to increase the level of automation and accelerate their transition to autonomous factory operations.

The partnership combines the power of Intel® Automated Factory Solutions (Intel® AFS's) software suite, including Intel® Operations Recon, and Intel® Factory Pathfinder, with Flexciton’s autonomous technology suite of Advanced Production Planning and Scheduling. The two companies will offer a synergic, holistic approach that provides complete visibility and control over complex semiconductor production workflows, enabling smart, autonomous decision-making and significant gains in key performance indicators.

"I am incredibly excited about this partnership. Intel® AFS solutions have been developed and tested within the most advanced fabs, and we see a great synergy with our cutting-edge planning and scheduling technologies,"

said Jamie, CEO & Cofounder, Flexciton.

"With Intel® Factory Pathfinder and Intel® Operation Recon combined with our Flex Planner and Scheduler suite, we will be able to provide an end-to-end optimisation solution that empowers our customers to unlock new levels of automation and significantly increase factory efficiency."

The partnership is designed to meet the growing demand for greater operational efficiency in the semiconductor industry. By leveraging high-speed simulations, AI, and advanced optimisation, the combined approach will enhance factory automation and deliver tangible benefits, including complete visibility of shop-floor operations, optimised planning and scheduling, and a significant uplift in factory key metrics such as throughput and cycle times.

Paul Schneider, Intel Principal Engineer, Director, said:

"By combining our deep factory automation expertise with Flexciton's innovative scheduling solutions, we are providing manufacturers with the critical tools they need to enhance their operational efficiency and maintain a competitive edge."

______

About Flexciton: Flexciton partners with semiconductor manufacturers to power their transition towards autonomous factories. Our suite of intelligent planning and scheduling applications combines advanced optimisation techniques with the power of AI to orchestrate complex fab workflows and achieve critical revenue-to-shop-floor alignment. Flexciton’s Autonomous Technology transforms fab operations by eliminating manual and reactive decision-making processes. This dramatically improves factory throughput and cycle times, enhances labour efficiency, and optimises overall costs and resource utilisation. Trusted by industry leaders including Seagate Technology, Renesas, and Microchip, Flexciton drives the next phase of digitalisation and transformation to an autonomous factory. Headquartered in London, UK, Flexciton operates globally with dedicated teams located in Europe and the US. www.flexciton.com

About Intel® Automated Factory Solutions: Intel® Automated Factory Solutions (Intel® AFS) is a comprehensive software suite that optimises industrial processes. It utilises advanced technologies such as Digital Twins, predictive analytics, high-speed simulation, and AI to improve efficiency and reduce downtime in factory operations and other complex operational processes with many interdependencies.

Intel® Factory Pathfinder: A high-speed discrete event simulator and digital twin designed for factory prediction and optimisation. It can function independently or integrate with production systems to streamline product assignments and reduce order fulfilment times.

Intel® Operations Recon: Provides a graphical digital twin of factory production equipment and automated systems, boosting operational visibility and enabling real-time troubleshooting and material movement simulations. www.intel.com/content/www/us/en/software/automated-factory-solutions.html

Accelerating the Future Panel Discussion: Key Takeaways from Industry Leaders

Accelerating the Future Panel Discussion: Key Takeaways from Industry Leaders

The semiconductor industry's journey toward fully autonomous manufacturing is underway, driven by advanced technologies and strategic investment. Staying ahead in smart manufacturing technologies has become paramount for global competitiveness. This topic was the focal point of the recent panel discussion webinar, hosted by Jamie Potter, Flexction CEO & Cofounder. The panel featured industry leaders representing fabs and suppliers: Matthew Johnson, VP of Wafer Fab Operations at Seagate; Patrick Sorenson, Industrial Engineer at Microchip Technology; Francisco Lobo, CEO of Critical Manufacturing; and Madhav Kidambi, Technical Marketing Director at Applied Materials.

Survey Insights: Where Are We Now?

The panel discussion was initiated with a presentation of the findings from Flexciton's inaugural Front End Manufacturing Insights survey, conducted among fabs in the US, Europe, and Asia. Key takeaways included:

- A majority of respondents see autonomous manufacturing as achievable within the next decade.

- Data standardization and integration remain major barriers, delaying scalable solutions.

- Cloud computing, IoT and Mathematical Optimization stand as the top three advanced technologies that fabs have adopted so far.

These insights laid a strong foundation for a lively discussion, highlighting the shared vision while addressing divergent strategies and challenges.

Insights from Industry Experts

Pragmatism Over Perfection in Data Models

Francisco Lobo emphasized the importance of starting with what’s available when building scalable solutions.

“Instead of building a complete model from scratch, leverage existing standards and your MES infrastructure. Begin with a pragmatic approach and evolve as you learn.”

This iterative strategy ensures companies can start deriving value early, without waiting years for a perfect model to be developed.

Strategic Investments In Downturns

While many fabs postpone investments during downcycles, Matthew Johnson emphasizes that smart manufacturing investments should be continuous rather than cyclical. He highlighted the strategic advantage of such approach:

“In down cycles, you often need these solutions the most. For example, using smart manufacturing to scale metrology tools through sampling can significantly stretch your existing resources without capital-heavy investments.”

His insight underscores how downturns provide a window to refine processes for long-term gains.

Getting Leadership Buy-in

Securing leadership support for smart manufacturing investments remains challenging when benefits aren't immediately apparent. Patrick Sorenson shares that the ROI justification was easier during the recent upcycle:

"If we just get a few more lots out of the fab when we have more demand than capacity, that will pay for itself."

In other scenarios, focus on demonstrating benefits through yield improvements, capital avoidance, or labor efficiency.

Industry Alignment on the Vision

Madhav Kidambi observed a growing consensus around the end goal of autonomous manufacturing, even as companies differ in their pathways:

“The vision of Lights Out manufacturing is clear, but strategies are evolving as companies learn how to justify and sequence investments to sustain the journey.”

Ecosystem Collaboration and The Path Towards Autonomy

A key theme emerging from the discussion is the importance of collaboration between suppliers and fabs. This includes:

- Open platforms and integration capabilities

- Standardized data protocols

- Partner ecosystems for specialized solutions

- Shared innovation initiatives

As the industry progresses toward autonomous manufacturing, success will depend on:

- Maintaining continuous investment in smart technologies

- Taking pragmatic approaches to data integration

- Developing clear ROI frameworks

- Fostering collaboration across the ecosystem

- Building upon existing systems and standards

As Matt from Seagate concludes,

"Fab operation is really a journey of continuous improvement, and the pursuit of smart technologies is a fundamental tenet of our strategy to ensure that we meet the objectives as an organization."

Watch the Full Webinar

The conversation is packed with actionable insights on overcoming barriers, achieving quick wins, and navigating the complexities of smart manufacturing adoption. Don’t miss out—click here to watch the full discussion recording.

Innovate UK invests in breakthrough technology developed by Flexciton and Seagate

Innovate UK invests in breakthrough technology developed by Flexciton and Seagate

London, UK – 1 Oct – Flexciton, a UK-based software company at the forefront of autonomous semiconductor manufacturing solutions, is excited to announce investment from Innovate UK in a strategic collaboration with Seagate Technology’s Northern Ireland facility. Innovate UK, the UK’s innovation agency, drives productivity and economic growth by supporting businesses to develop and realize the potential of new ideas. As part of their £11.5 million investment across 16 pioneering projects, this collaboration will help develop and demonstrate cutting-edge technology to boost semiconductor manufacturing efficiency and enhance the UK’s role in the global semiconductor supply chain.

Jamie Potter, CEO and Cofounder of Flexciton, commented:

"We are thrilled to partner with Seagate Technology to bring yet another Flexciton innovation to market. By combining our autonomous scheduling system with Flex Planner, we are enhancing productivity in semiconductor wafer facilities and driving greater adoption of autonomous manufacturing."

The partnership aligns directly with the UK government’s National Semiconductor Strategy, which seeks to secure the UK’s position as a key player in the global semiconductor industry. Flexciton’s contribution to this strategy is not just a testament to its cutting-edge technology but also highlights the company’s role in reinforcing supply chain resilience and scaling up manufacturing capabilities within the UK.

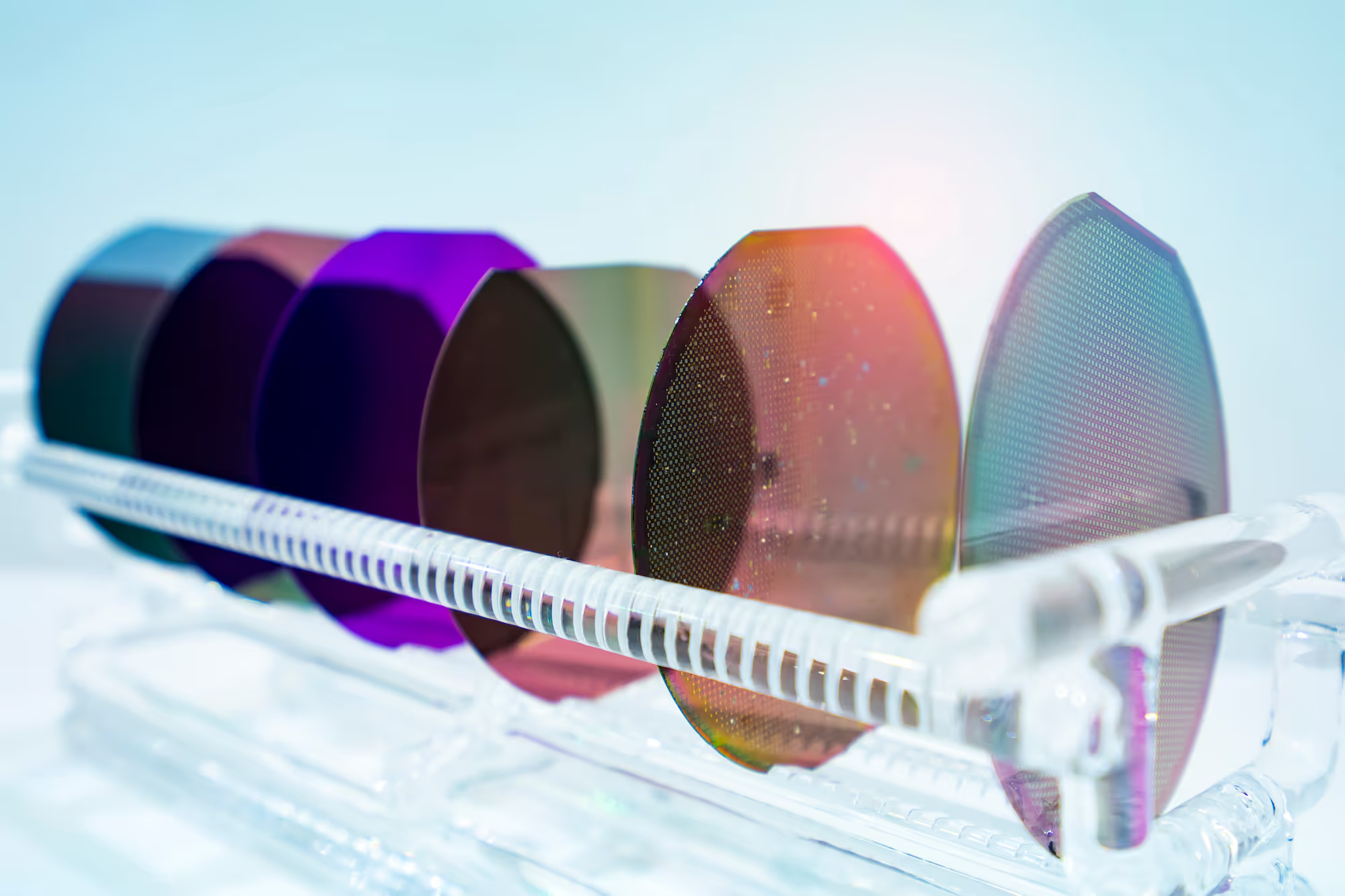

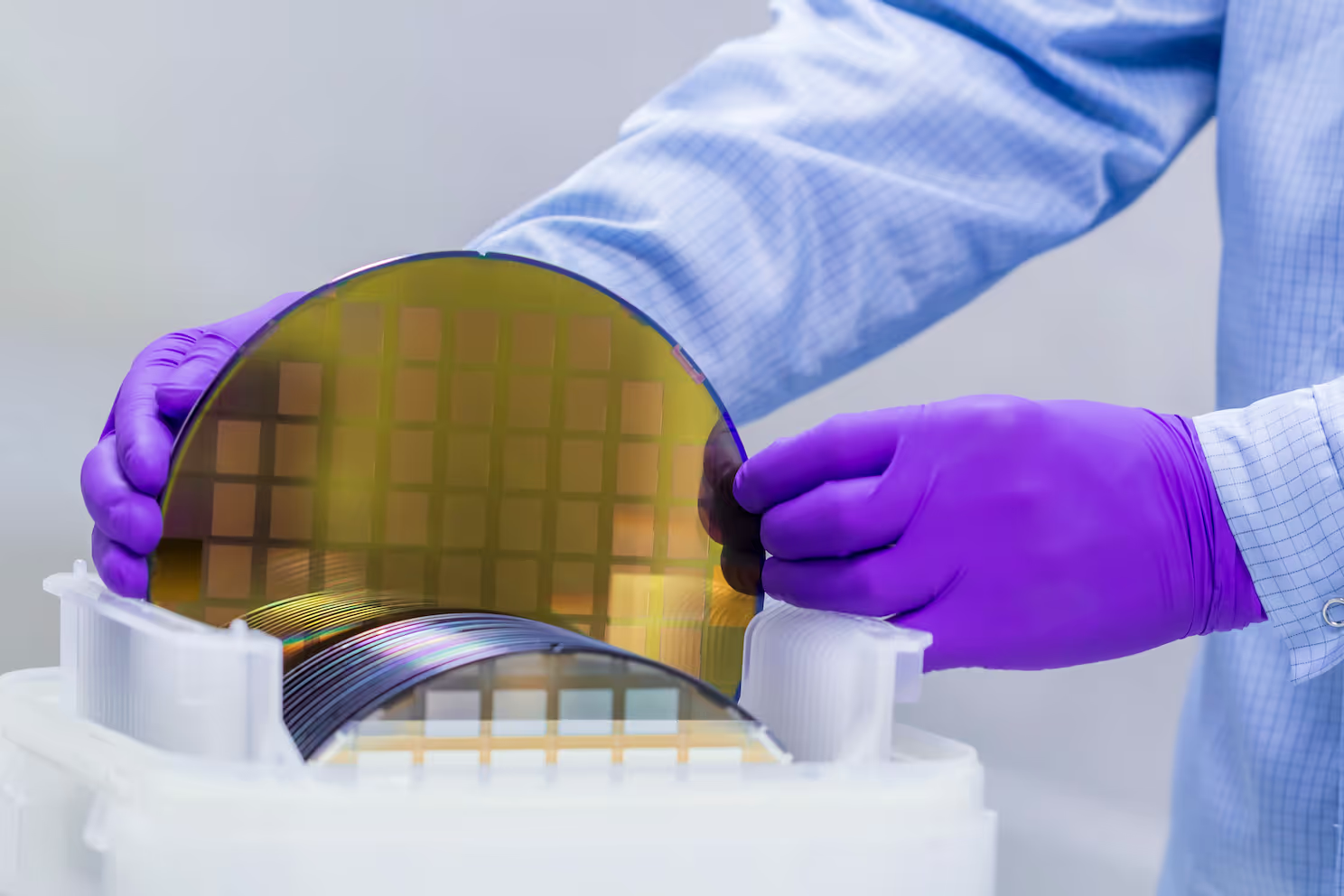

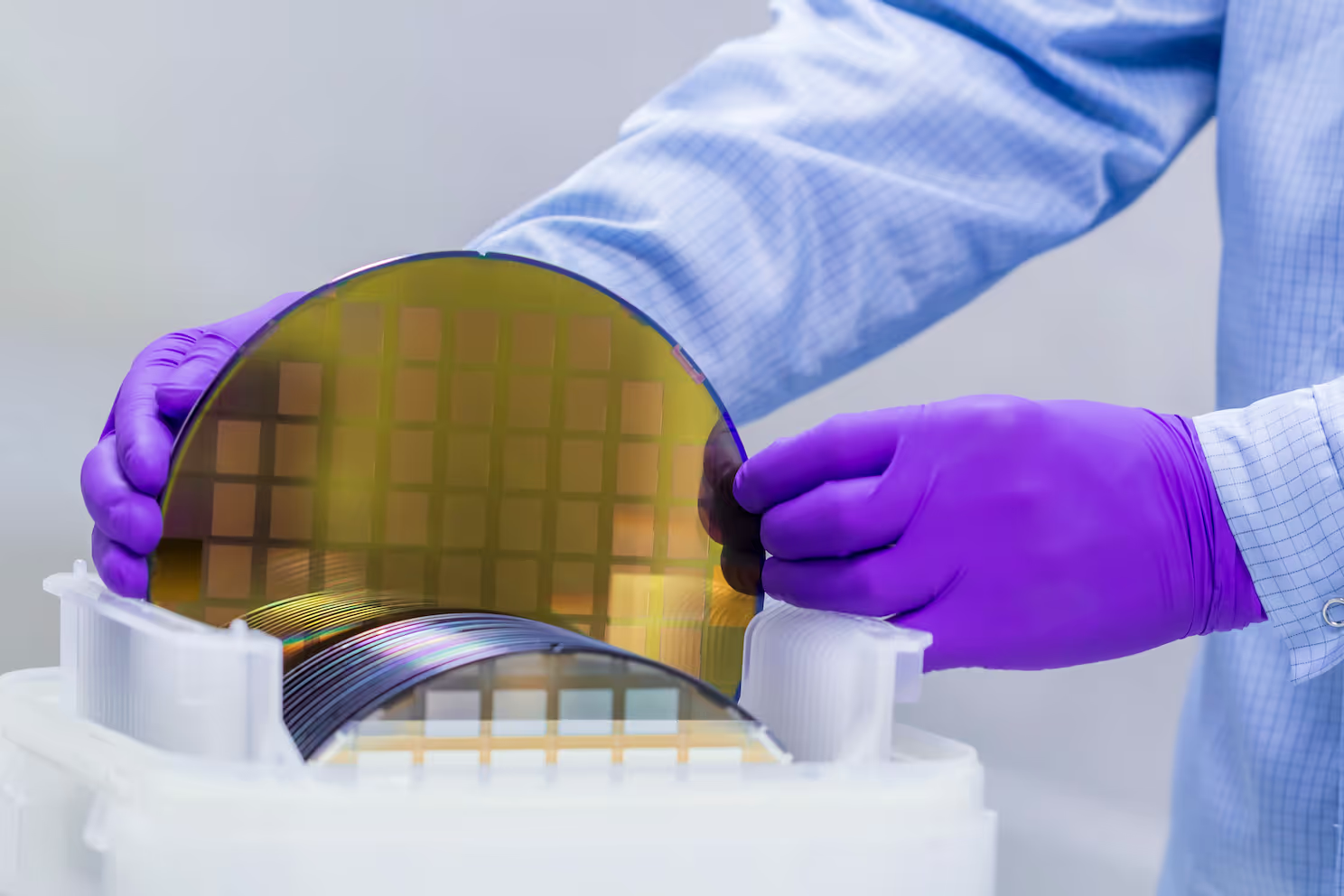

Flex Planner: A breakthrough solution for chip manufacturing

At the heart of this project is Flex Planner, the first closed-loop production planning solution for semiconductor manufacturing with the ability to control the flow of WIP in a fab over the next 2-4 weeks, autonomously avoiding dynamic bottlenecks, reducing cycle times, and improving on-time delivery performance.

Supporting the UK's semiconductor growth

The UK government’s investment in semiconductor innovation underlines its commitment to fostering cutting-edge solutions that bolster the sector’s growth. The semiconductor industry is projected to grow from £10 billion to £17 billion by 2030, with initiatives like this collaboration driving the innovation necessary to achieve these goals.

Flexciton’s partnership with Seagate exemplifies how collaboration between technology innovators and manufacturers can lead to transformative advances in the industry. The funding from Innovate UK enables both companies to develop and test solutions that not only enhance productivity but also position the UK as a critical link in the global semiconductor ecosystem.

About Flexciton

Flexciton is pioneering autonomous technology for production scheduling and planning in semiconductor manufacturing. Leveraging advanced AI and optimization technology, we tackle the increasing complexity of chipmaking processes. By simplifying and streamlining wafer fabrication with our next-generation solutions, we enable semiconductor fabs to significantly enhance efficiency, boost productivity, and reduce costs. Empowering manufacturers with unmatched precision and agility, Flexciton is revolutionizing wafer fabrication to meet the demands of modern semiconductor production.

For media inquiries, please contact: media@flexciton.com

The Pathway to the Autonomous Wafer Fab

The Pathway to the Autonomous Wafer Fab

Over the next 6 years, the semiconductor industry is set to receive around $1tn in investment. The opportunities for growth – driven by the rapid rise of AI, autonomous and electric vehicles, and high-performance computing – are enormous. To support this anticipated growth, over 100 new wafer fabs are expected to emerge worldwide in the coming years (Ajit Manocha, SEMI 2024).

However, a significant challenge looms: labor. In the US, one-third of semiconductor workers are now aged 55 or older. Younger generations are increasingly drawn to giants like Google, Apple and Meta for their exciting technological innovation and brand prestige, making it difficult for semiconductor employers to compete. In recent years, the likelihood of employees leaving their jobs in the semiconductor sector has risen by 13% (McKinsey, 2024).

To operate these new fabs effectively, the industry must find a solution. The Autonomous Wafer Fab, a self-optimizing facility with minimal human intervention and seamless production, is looking increasingly likely to be the solution chipmakers need. This vision, long held by the industry, now needs to be accelerated due to current labor pressures.

Thankfully, rapid advancements in artificial intelligence (AI) and Internet of Things (IoT) mean that the Autonomous Wafer Fab is no longer a distant dream but an attainable goal. In this blog, we will explore what an Autonomous Wafer Fab will look like, how we can achieve this milestone, the expected outcomes, and the timeline for reaching this transformative state.

What will an Autonomous Wafer Fab look like?

Imagine a wafer fab where the entire production process is seamlessly interconnected and self-regulating, free to make decisions on its own. In this autonomous environment, advanced algorithms, IoT, AI and optimization technologies work in harmony to optimize every aspect of the manufacturing process. From daily manufacturing decisions to product quality control and fault prediction, every step is meticulously coordinated without the need for human intervention.

Key features of an Autonomous Wafer Fab:

Intelligent Scheduling and Planning: The heart of the autonomous fab lies in its scheduling and planning capabilities. By leveraging advancements such as Autonomous Scheduling Technology (AST), the fab has the power to exhaustively evaluate billions of potential scenarios and guarantee the optimal course for production. This ensures that all constraints and variables are considered, leading to superior outcomes in terms of throughput, cycle time, and on-time delivery.

Real-Time Adaptability: An autonomous fab is equipped with sensors and IoT devices that continuously monitor the production environment. These devices can feed real-time data into the scheduling system, allowing it to dynamically adjust schedules and production plans in response to any changes or disruptions.

Digital Twin: Digital Twin technology mirrors real-time operations through storing masses of data from sensors and IoT devices. This standardized data schema allows for rapid introduction of new technologies and better scalability. Moreover, by simulating production processes, it helps to model possible scenarios – such as KPI adjustments – within the specific constraints of the fab.

Predictive maintenance: Predictive maintenance systems will anticipate equipment failures before they occur, reducing downtime and extending the lifespan of critical machinery. This proactive approach ensures that the fab operates at peak efficiency with minimal interruptions. Robotics will carry out the physical maintenance tasks identified by these systems, and when human intervention is necessary, remote maintenance capabilities will allow technicians to diagnose and address issues without being on-site.

The Control Room: In an autonomous fab, decision-making is driven by data and algorithms. The interconnected system can balance trade-offs between competing objectives, such as maximizing throughput while minimizing cycle time, with unparalleled precision. That said, critical decisions such as overall fab objectives may still be left to humans in the “control room”, who could be on the fab site or 9000 km away…

How can we get there?

Achieving the vision of an Autonomous Wafer Fab requires a multi-faceted approach that integrates technological innovation, strategic investments, and a cultural shift towards embracing automation. Here are the key steps to pave the way:

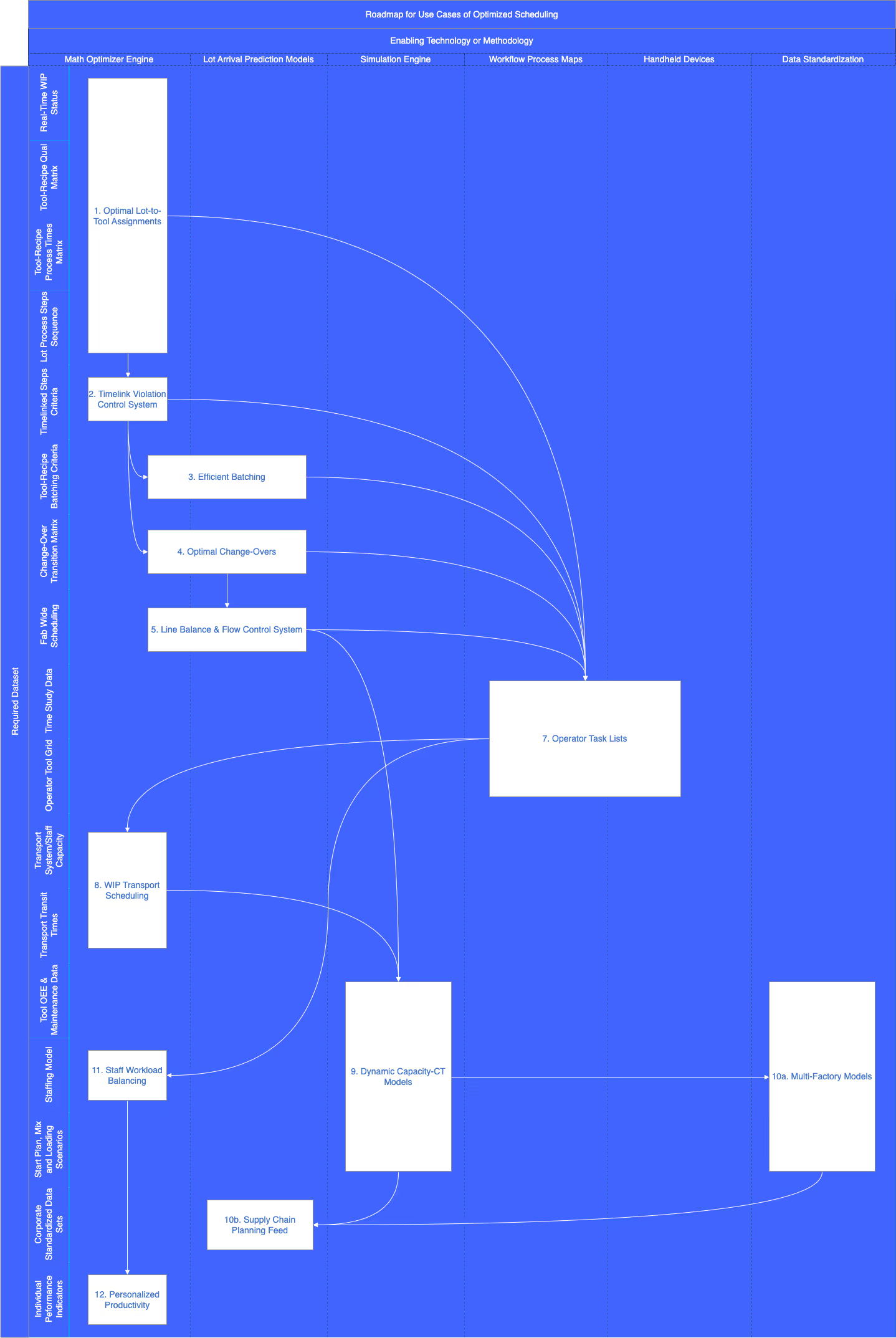

A Robust Roadmap: All fabs within an organization need to have a common vision. Key milestones need to be laid out to help navigate each fab through the transition with clear actions at each stage. SEMI’s smart manufacturing roadmap offers an insight into what this could look like.

Investing in Novel Technologies: The pivotal step towards autonomy is investing in the latest technologies, including AI, machine learning, AST, and IoT. These technologies form the backbone of the autonomous fab, enabling intelligent planning and scheduling, real-time monitoring, and adaptive control.

Data Integration and Analytics: A crucial aspect of autonomy is the seamless integration of data from various sources within the fab. By harnessing big data analytics, fabs can not only gain deep insights into their operations, but they will have the correct data in place to support autonomous systems further down the line.

Developing Skilled Workforce: While the goal is to minimize human intervention, the semiconductor industry will still require skilled professionals who can manage and maintain advanced systems. Investing in workforce training and development to fill the current void is essential to ensure a smooth transition.

Collaborative Ecosystem: Even the biggest of chipmakers is unlikely to reach the autonomous fab all on their own. Collaboration with technology providers, research institutions, and industry partners will be key. Sharing knowledge and best practices can accelerate the development and deployment of autonomous solutions.

Pilot Programs and Gradual Implementation: Transitioning to an autonomous fab should be approached incrementally. Starting with pilot programs to test and refine technologies in a controlled environment will help identify challenges and demonstrate the benefits. Gradual implementation allows for continuous improvement and adaptation.

How will fabs benefit?

The transition to an Autonomous Wafer Fab promises a multitude of benefits that will revolutionize semiconductor manufacturing:

Enhanced Efficiency: By optimizing production schedules and processes, autonomous fabs will achieve higher throughput and better resource utilization. This translates to increased production capacity and reduced operational costs.

Better Quality: Advanced process control and real-time adaptability ensure consistent product quality, minimizing defects and rework. This leads to higher yields and greater customer satisfaction.

Reduced Downtime: Predictive maintenance and automated decision-making reduce equipment failures and production interruptions. This results in higher uptime and more reliable operations.

Improved Flexibility: Autonomous fabs can quickly adapt to changing market demands and production requirements. This flexibility enables manufacturers to respond rapidly to customer needs and stay competitive in a dynamic industry.

Cost Savings: The efficiencies gained from autonomous operations lead to significant cost savings. Reduced labor intensity, lower material waste, and optimized energy consumption contribute to a more cost-effective production process.

Sounds great, but when will it become a reality?

The journey towards an Autonomous Wafer Fab is well underway, but the timeline for full realization varies depending on several factors, including technological advancements, industry adoption, and investment levels. However, significant progress is expected within the next decade.

Short-Term (1-3 Years):

- Implementation of pilot programs and continual adoption of AI, IoT, AST and other advanced technologies.

- Incremental improvements in scheduling, process control, and maintenance practices.

Medium-Term (3-7 Years):

- Broader adoption of autonomous solutions across the industry.

- Enhanced data integration and analytics capabilities.

- Development of a skilled workforce to support autonomous operations.

Long-Term (7-10 Years and Beyond):

- Full realization of the Autonomous Wafer Fab with minimal human intervention.

- Industry-wide standards and best practices for autonomous manufacturing.

- Continuous innovation and refinement of autonomous technologies.

Conclusion

The pathway to the Autonomous Wafer Fab is a transformative journey that holds immense potential for the semiconductor industry. By embracing advanced technologies, fostering collaboration, and investing in the future workforce, fabs can unlock unprecedented levels of efficiency, quality, and flexibility. Autonomous Scheduling Technology, as a key pillar, will play a crucial role in this evolution, driving the industry towards a future where production is seamless, self-optimizing, and truly autonomous. The vision of an Autonomous Wafer Fab is not just a distant possibility but an imminent reality, poised to redefine the landscape of semiconductor manufacturing.

Now available to download: our new Autonomous Scheduling Technology White Paper

We have just released a new White Paper on Autonomous Scheduling Technology (AST) with insights into the latest advancements and benefits.

Click here to read it.

Switching to Autonomous Scheduling: What is the Impact on Your Fab?

Switching to Autonomous Scheduling: What is the Impact on Your Fab?

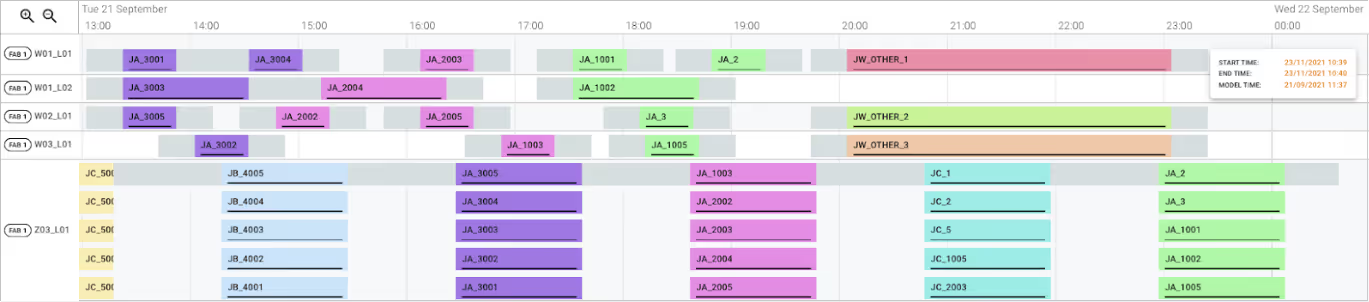

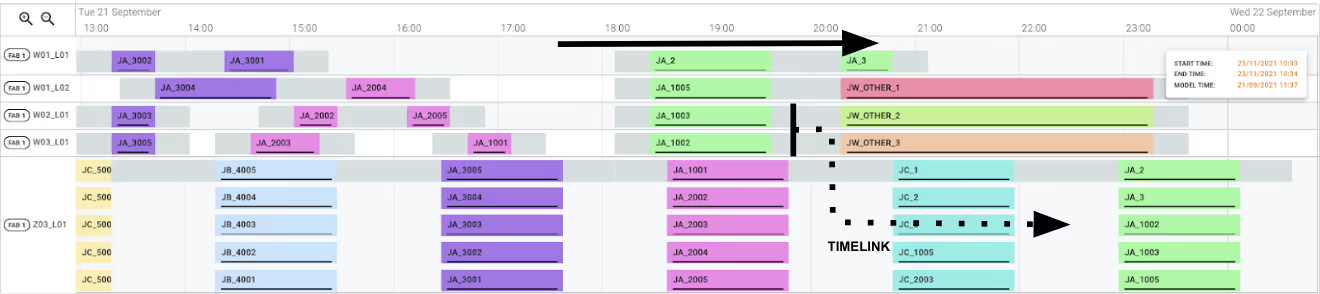

In the fast-paced world of semiconductor manufacturing, efficient production scheduling is crucial for chipmakers to maintain competitiveness and profitability. The scheduling methods used in wafer fabs can be classified into two main categories: heuristics and mathematical optimization. Both methods aim to achieve the same goal: to provide the best schedules within their capabilities. However, because they utilize different problem-solving methodologies, the outcome is dramatically different. Simply put, heuristics generates solutions by making decisions based on if-then rules predefined by a human, while optimization algorithms search through billions of possible scenarios to automatically select the most optimal one.

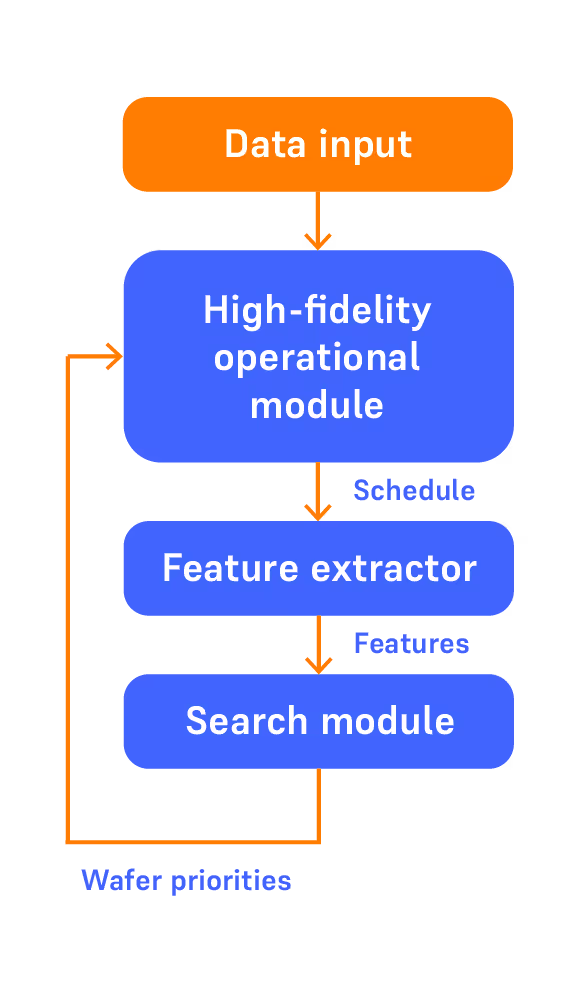

Autonomous Scheduling Technology (AST) features mathematical optimization combined with smart decomposition, allowing the quick delivery of optimal production schedules. Whether you are a fab manager or industrial engineer, the experience and results of applying Autonomous Scheduling in your fab are fundamentally different compared to a heuristic scheduler.

Here's how switching to AST can impact your fab.

Consistent and Superior KPIs Guaranteed

Autonomous Scheduling Technology (AST) evaluates all constraints and variables in the production process simultaneously, ensuring optimal decision-making. Unlike heuristics schedulers, which require ongoing trial and error with if-then rules to solve the problem, AST allows the user to balance trade-offs between high level fab objectives. With its forward-looking capability, it can assess the consequences of scheduling decisions across the entire production horizon and generate schedules that guarantee that the fab's global objectives are met. The tests we have conducted against a heuristic-based scheduler have proven that Autonomous Scheduling delivered superior results. Book a demo to find out more.

Never miss a shipment

One of the most critical aspects of fab operations is meeting On-Time-Delivery deadlines. With AST, schedules are optimized towards specific fab objectives, ensuring that production targets align with business goals. Mark Patton, Director of Manufacturing Seagate Springtown, confirmed that adopting Autonomous Scheduling in his fab allowed him to:

"improve our predictability of delivery by meeting weekly customer commits. With a lengthy cycle time build, this predictability and linearity has been key to enabling the successful delivery and execution of meeting commits consistently."

Reduced workload (by at least 50%)

The reactive nature of heuristic-based schedulers places a significant burden on industrial engineers, who must constantly – and manually – tune rules and adjust parameters. To ensure these systems run optimally, fab managers must dedicate at least one industrial engineer to working full-time on maintaining them. With AST, the workload is significantly reduced due to the system's ability to optimize schedules autonomously (without human intervention). This means there will be no more firefighting when the WIP profile changes. This reduction in labor intensity frees up engineers to engage in value-added activities.

Reduced rework, improved yield

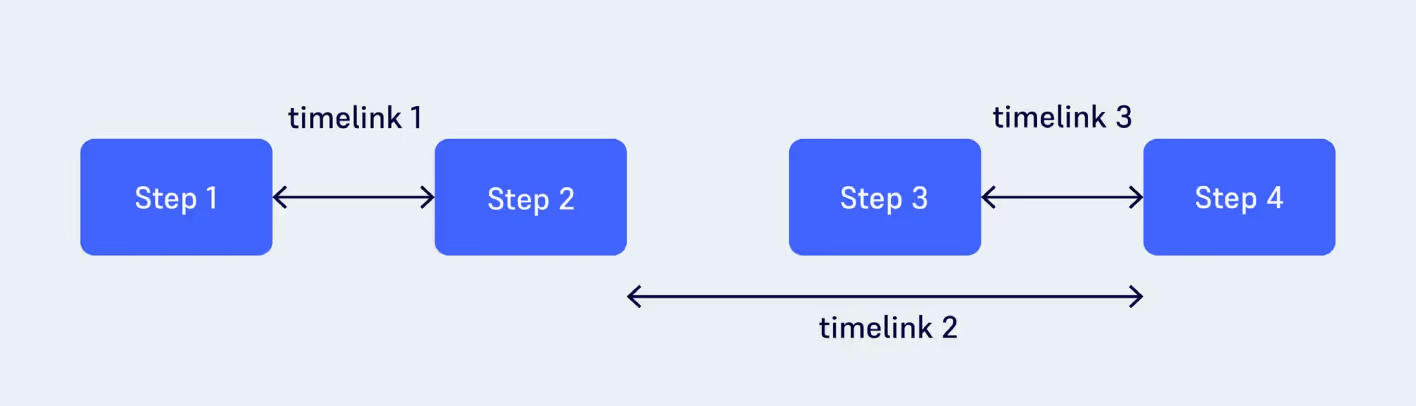

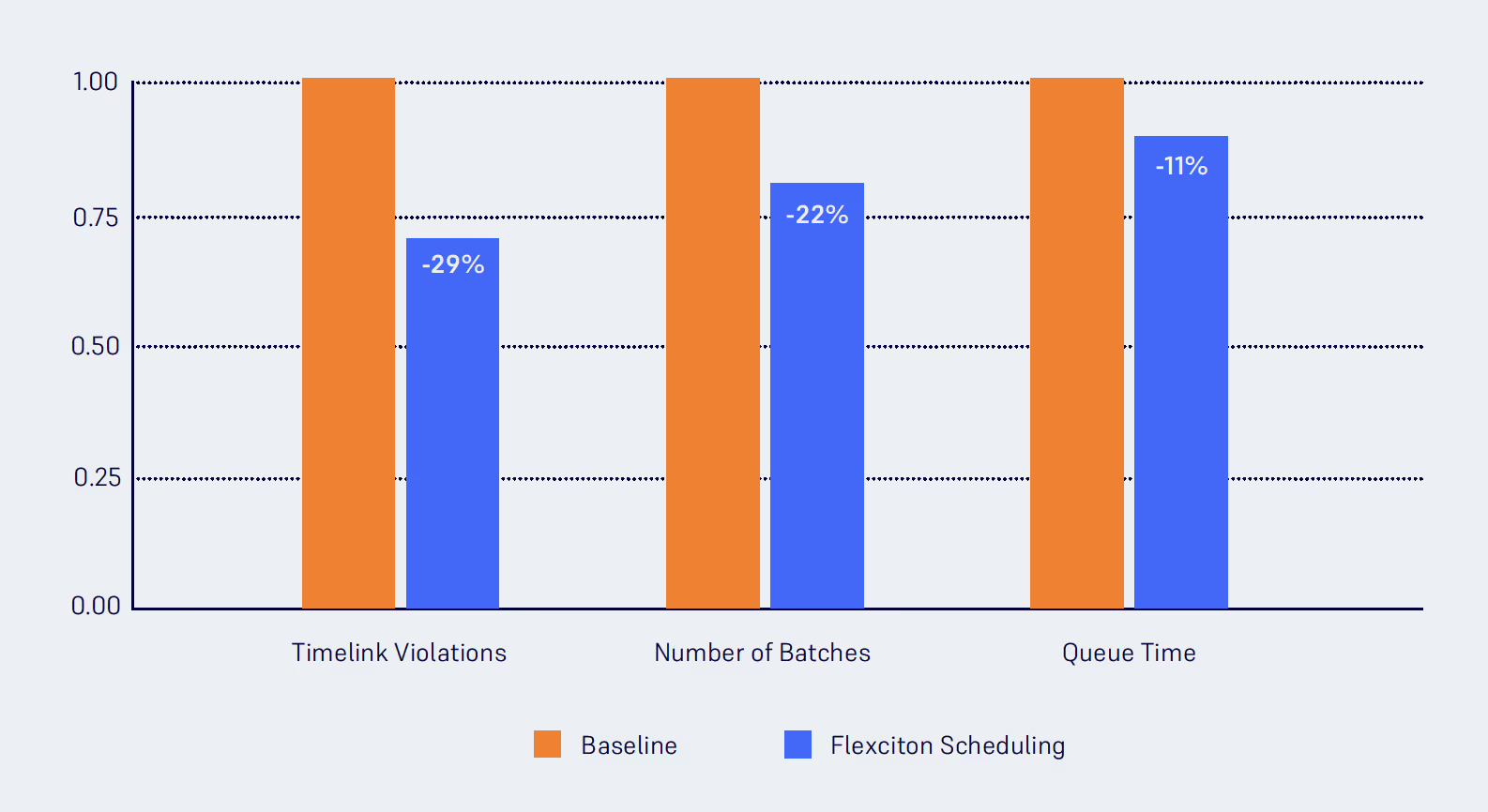

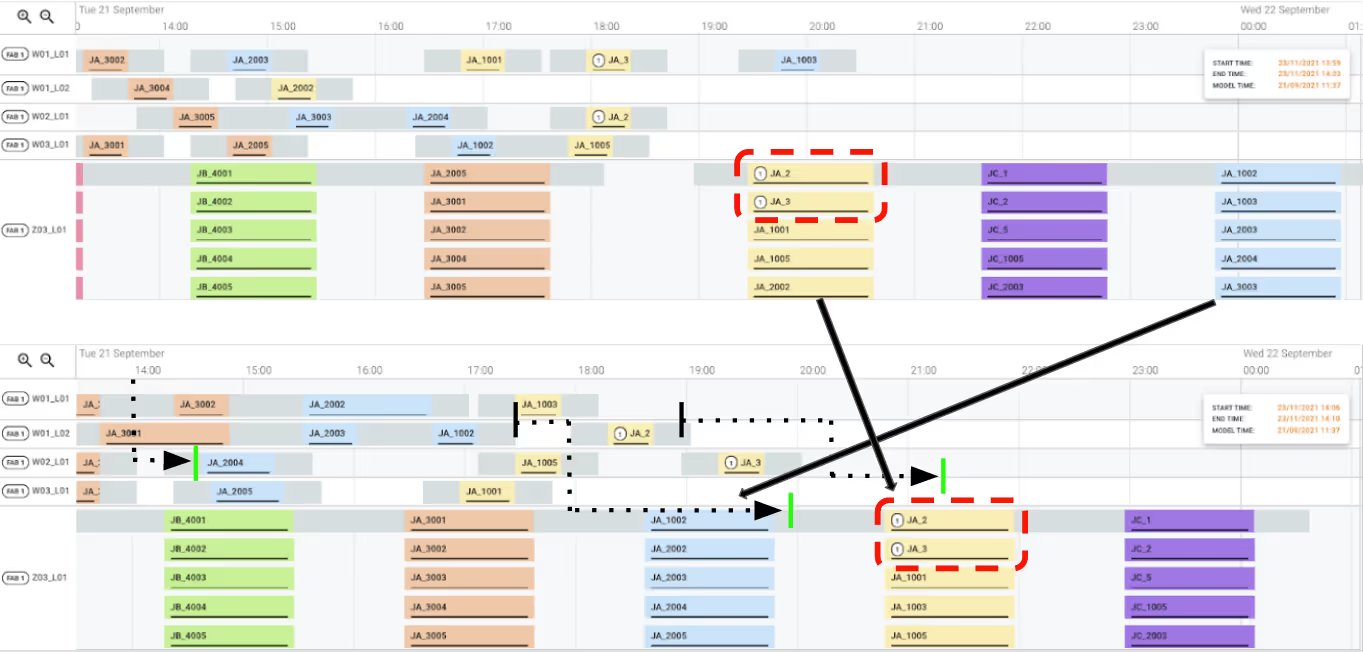

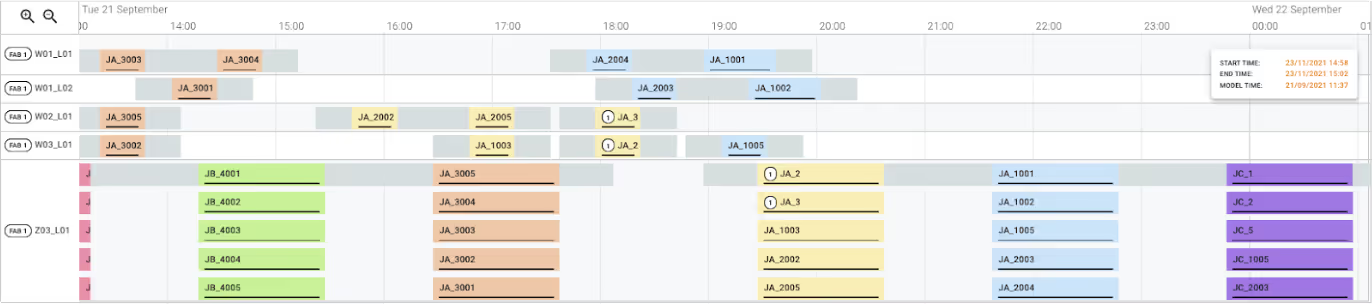

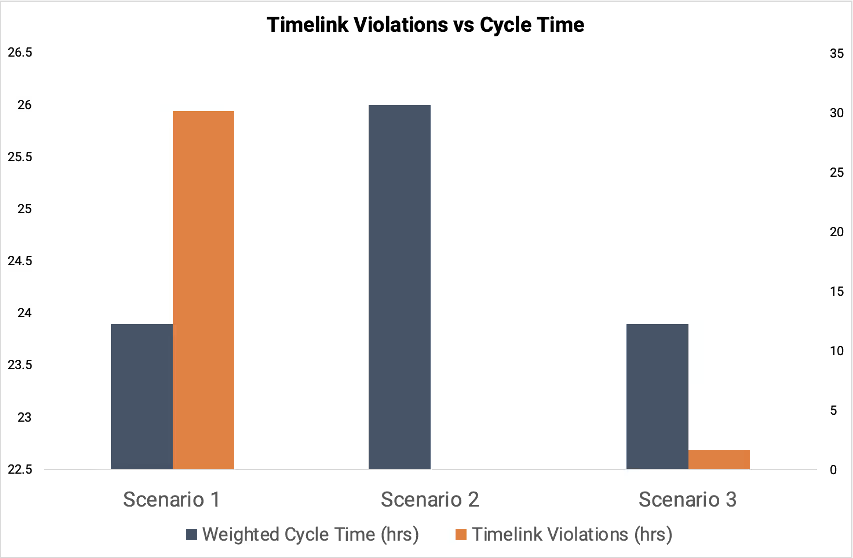

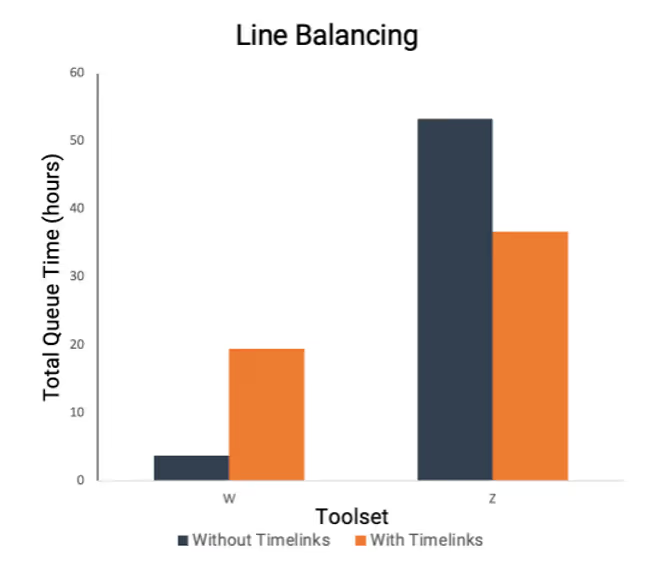

Some areas of a fab are notoriously challenging to optimize. For example, the diffusion and clean area is home to very complex time constraints, also known as timelinks. When timelinks are violated, wafers either require rework or must be scrapped. Either way, it's a considerable cost for a fab. Autonomous Scheduling Technology is highly effective at managing conflicting KPIs with its multi-objective optimization capabilities. AST dynamically adjusts to changes in the fabrication process to consistently eliminate timelink violations whilst maximizing throughput.

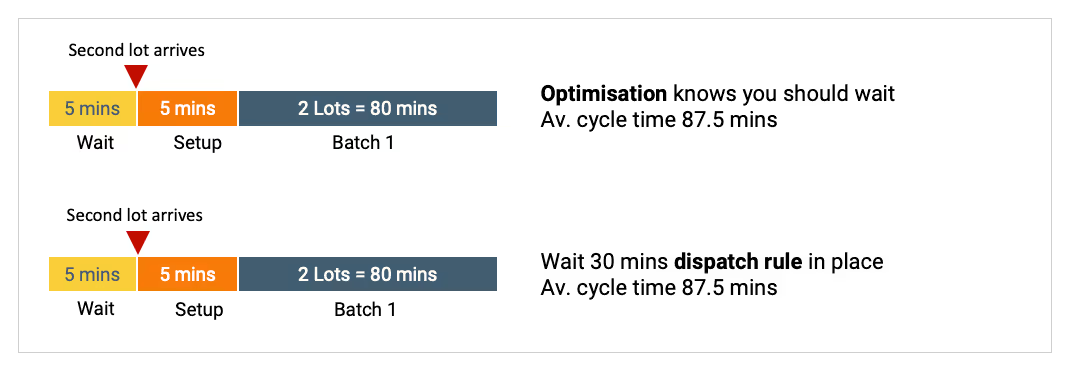

Confidence in Balancing Trade-offs

With its ability to look ahead, Autonomous Scheduling Technology can predict the consequences of different trade-off settings. This capability is particularly valuable when balancing competing objectives like throughput and cycle time. Users of legacy schedulers would typically move sliders to adjust the settings and wait a considerable amount of time to assess whether the adjustments generate the desired scheduling behavior. If not, further iterations are required, and the process repeats. In contrast, AST can evaluate billions of potential scenarios and determine the optimal balance between conflicting goals. For example, it can predict the exact impact of prioritizing larger batches over shorter cycle times, allowing fab managers to make informed decisions with confidence. This strategic foresight ensures that the best possible trade-offs are made, optimizing the whole fab to meet overarching objectives.

Conclusion

In an industry where efficiency and precision are paramount, Autonomous Scheduling Technology provides a distinct competitive advantage. It equips fabs with the tools to consistently outperform legacy systems, streamline operations, and ultimately drive greater profitability. By investing today in upgrading their legacy scheduling systems to Autonomous Scheduling Technology, wafer fabs are not only optimizing their current operations but also taking an important step toward the autonomous fab of the future.

Now available to download: our new Autonomous Scheduling Technology White Paper

We have just released a new White Paper on Autonomous Scheduling Technology (AST) with insights into the latest advancements and benefits.

Click here to read it.

The Flex Factor with... Lio

The Flex Factor with... Lio

Meet Lio, a driving force behind client success as Flexciton's Technical Customer Lead. Discover more about her keen eye for collaboration and passion for innovation in this edition of The Flex Factor.

Tell us what you do at Flexciton?

I’m a Technical Customer Lead.

What does a typical day look like for you at Flexciton?

The day is incredibly busy and passes quickly while collaborating with the customer team and other teams at Flexciton, making rapid progress day by day. My focus revolves around ongoing customer work, such as our work at Renesas (analyzing their adherence, checking the Flex Global heat map, and listening to feedback from the client). Additionally, I often work on live demos and PoC projects. The nature of my tasks varies depending on the project stage, ranging from initial data analysis and integration to final stages where I collaborate with sales on deliverables and the story of the final report. While consistently moving forward with projects and meeting weekly targets, we concurrently establish our working methods and standardize processes to improve efficiency for future projects. For lunch, I usually go to Atis, my go-to place for fresh and nutritious meals. People in the office call it a salad, but I consider it the best healthy lunch with the highest ROI.

What do you enjoy most about your role?

I find the most enjoyment in witnessing the impact our product has on customers who need it. It's fulfilling to see their reactions when they share challenges, and I appreciate understanding how Flexciton can collaborate with them, providing that extra element for improvement.

If you could summarize working at Flexciton in 3 words, what would they be?

Creative, Fast, Collaborative.

Given the fast-paced evolution of technology, what strategies do you recommend for continuous learning and skill development in the tech field?

Stay closely connected to the client side. Understanding the technology they're developing and their current tech level (MES and other systems) provides insights into their readiness for Flexciton.

In the world of technology and innovation, what emerging trend or development excites you the most, and how do you see it shaping our industry?

The semiconductor industry's rapid evolution and diversity are fascinating. The competition between TSMC and Samsung Foundry in advanced GAA (gate-all-around) technology is particularly intriguing. While Samsung claims to be ahead, industry voices suggest a bluff with poor yields. The competition is ongoing, and I wonder if TSMC will maintain its lead or if there will be a paradigm shift in the industry.

Tell us about your best memory at Flexciton?

Meeting the Renesas team at their fab in Palm Bay and witnessing one of their operators' reaction to our app was a memorable experience. Kodi, a talented young manufacturing specialist, was genuinely impacted by our technology which was amazing to see in person. After returning home, he even had a piece of code named after him by Amar.

Do you think you have what it takes to work at Flexciton? Visit our careers page to browse our current openings.

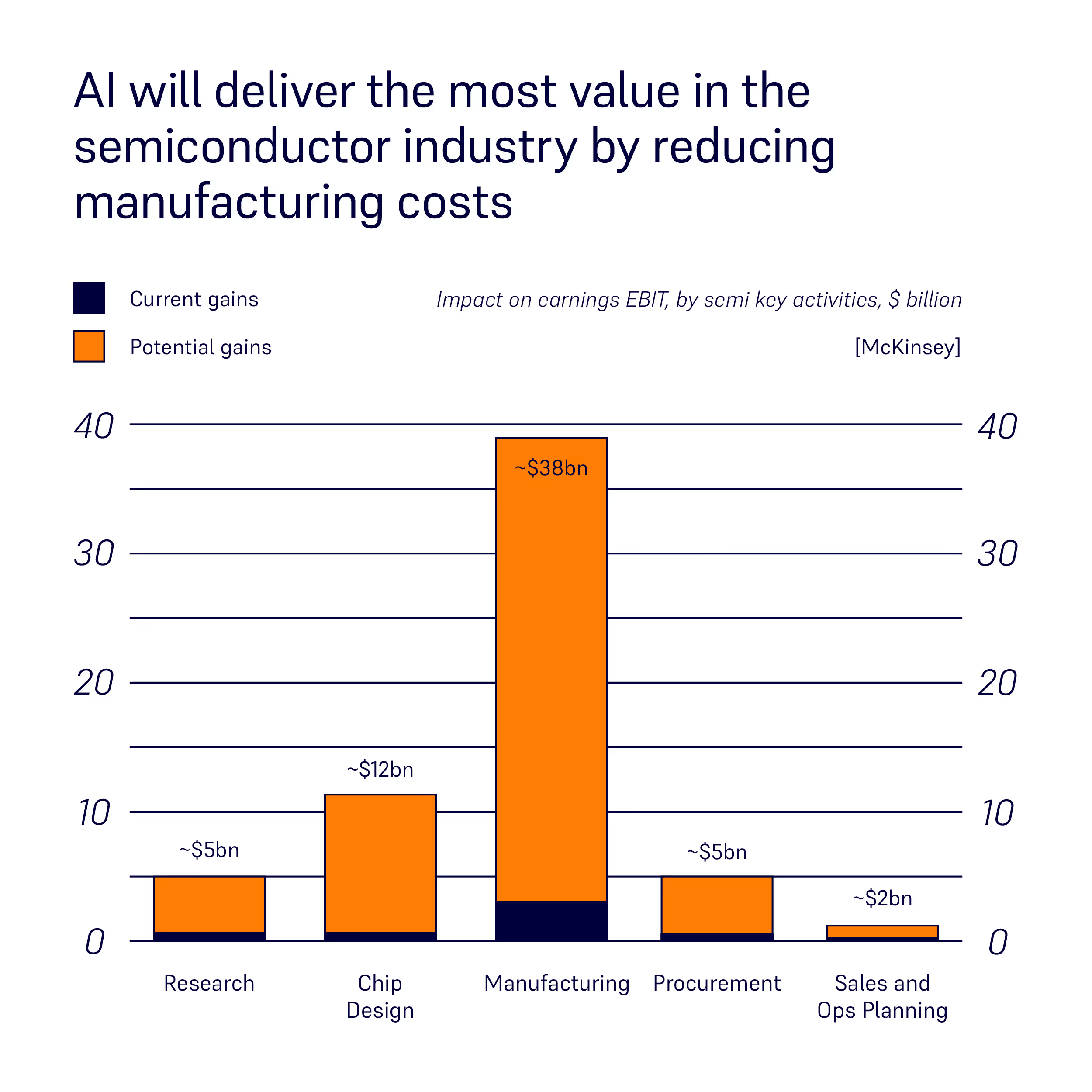

Harnessing AI's Potential: Revolutionizing Semiconductor Manufacturing

Harnessing AI's Potential: Revolutionizing Semiconductor Manufacturing

The dominant technological theme of the year is unmistakably clear: artificial intelligence (AI) is no longer a distant future, but a transformative present. From the startling capabilities of conversational ChatGPT to the seamless navigation of autonomous vehicles, AI is demonstrating an unprecedented ability to manage complexity and enhance decision-making processes. This wave of innovation begs the question: how can the semiconductor industry, which stands at the heart of technological progress, leverage AI to navigate its own intricate challenges?

Complexity-driven Challenges

Semiconductor wafer fabs are marvels of modern engineering, embodying a complexity that rivals any known man-made system. These intricate networks of toolsets and wafer pathways require precision and adaptability far beyond the conventional methods of management. The difficulty of this task is compounded by the current challenges that hinder its dynamic pace: a protracted shortage of skilled labor, technological advancement in product designs, and the ever-present volatility of the supply chain.

The latest generation of products is the pinnacle of complexity, with production processes that involve thousands of steps and incredibly intricate constraints. This complexity is not just a byproduct of design; it is an inherent challenge in scaling up production while keeping costs within reasonable limits.

The semiconductor supply chain is equally complicated and often susceptible to disruptions that are becoming all too common. In this context, the requirement for skilled labor is more pronounced than ever. Running fab operations effectively demands a workforce that's not just technically skilled but also capable of innovative thinking to solve problems of competing objectives, improve processes, and extract more value. No small task in an environment already brimming with complexity.

The Need for AI in Semiconductor Manufacturing

As we delve into Industry 4.0, we find ourselves at a crossroads. The software solutions of today, while advanced, are not the panacea we once hoped for. The status quo has simply reshuffled the problems we face; we've transitioned from relying on shop floor veterans' tacit knowledge and intuition to a dependency on people who oversee and maintain the data in digital systems. These experts manning the screens are armed with MES, reporting, and legacy scheduling software, all purporting to streamline operations. Yet, the core issue remains: these systems still hinge on human intelligence to steer the intricate workings of the fabs.

At the core of these challenges lies a common denominator: the need for smarter, more efficient, and autonomous systems that can keep pace with the industry's rapid evolution. This is precisely where AI enters the frame, poised to address the shortcomings of current Industry 4.0 implementations. AI is not just an upgrade—it's a paradigm shift. It has the capability to assimilate the nuanced knowledge of experienced engineers and operators working in a fab and translate it into sophisticated, data-driven decisions. By integrating AI, we aim to break the cycle of displacement and truly solve the complex problems inherent in wafer fabs management. The potential of AI is vast, ready to ignite a revolution in efficiency and strategy that could reshape the very fabric of manufacturing.

Building AI for the Semiconductor Industry

Flexciton is the first company that built an AI-driven scheduling solution on the back of many years of scientific research and successfully implemented it into the semiconductor production environment. So how did we do it?

Accessing the Data

The foundation lies in data – clean, accessible, and comprehensive data. Much like the skilled engineers who intuitively navigate the fab's labyrinth, AI requires a map – a dataset that captures the myriad variables and unpredictable nature of semiconductor manufacturing.

Despite the availability of necessary data within fabs, it often remains locked in silos or relegated to external data warehouses, making it difficult to access. Yet, partnerships with existing vendors can unlock these valuable data reserves for AI applications.

Finding People Who Can Build AI

The chips that enable AI are designed and produced by the semiconductor industry, but the AI-driven applications are developed by people who are not typically found within the sector. They align with powerhouses like Google and Amazon or deep-tech companies working on future-proof technologies. This reveals a broader trend: the allure of semiconductors has diminished for the emerging STEM talent pool, overshadowed by the glow of places where state-of-the-art tech is being built. Embracing this drift, Flexciton planted its roots in London, a nexus of technological evolution akin to Silicon Valley. This strategic choice has enabled us to assemble a diverse and exceptional team of optimization and software engineers representing 22 nationalities among just 43 members. It's a testament to our commitment to recruiting premier global talent to lead the charge in tech development, aiming to revolutionize semiconductor manufacturing.

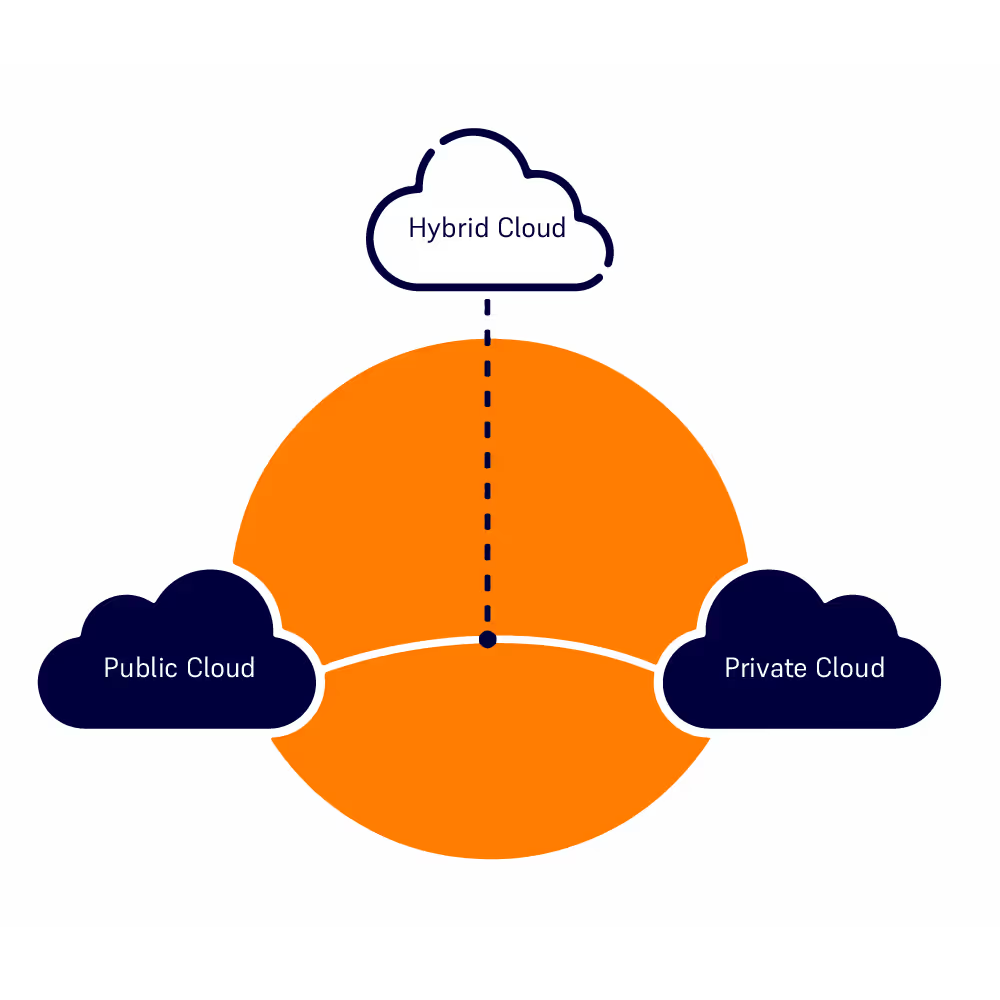

AI Needs Cloud

The advent of cloud computing marks a significant milestone in technological evolution, enabling the development and democratization of technology based on artificial intelligence. At the core of AI development lies the need for vast computing power and extensive data storage capabilities. The cloud environment offers the ability to rapidly provision resources at a relatively low cost. With just a few clicks, a new server can be initialized, bypassing the traditional complexities of hardware installation and maintenance typically handled by IT personnel.

Furthermore, the inherent scalability of the cloud means that not only can we meet our current computing needs but we can also seamlessly expand our resources as new technologies emerge. This flexibility provides collaborating fabs with the latest technology while avoiding the pitfalls of significant initial investment in equipment that requires regular maintenance and eventually becomes obsolete.

Security within the cloud is an area where misconceptions abound. As a cloud-first company, we often address queries about data security. It's crucial to understand that being cloud-first does not equate to possessing your data. In fact, your data is securely stored in Microsoft Azure data centers, which are bastions of security. Microsoft's commitment to cyber security is reflected in its employment of more than 3,500 professionals whose job is to ensure that data centers are robust and a fortress for data, offering peace of mind that often surpasses the security capabilities of private data centers.

Effective Deployment of AI in Fabs

The introduction of AI-driven solutions within a fab environment entails a significant change in existing processes and workflows and often results in decision-making that diverges from the traditional. This can unsettle teams and requires a comprehensive change management strategy. Therefore the implementation process must be planned as a multifaceted endeavor and deeply rooted in human collaboration.

A successful deployment begins with assembling the right team—a blend of industrial engineers with intimate knowledge of fab operations, and technology specialists who underpin the AI infrastructure. This collective must not only include fab management and engineers but also those who are the lifeblood of the shop floor—individuals who intimately understand the fab's heartbeat.

When it comes to actual deployment, the process is iterative and data-centric. Setting clear objectives is pivotal. The AI must be attuned to the Fab's goals—be it enhancing throughput or minimizing cycle times. Often, the first output may not align with operational realities—a clear indication of the AI adage that the quality of input data dictates the quality of output. It is at this juncture that the expertise of Fab professionals becomes crucial, scrutinizing and correcting the data, and refining the schedules until they align with practical Fab dynamics. With objectives in place and a live scheduler operational, the system undergoes rigorous in-FAB testing.

Change management is the lynchpin in this transformative phase. The core of successful AI adoption is rooted in the project team's ability to communicate the 'why' and 'how'—to educate, validate, and elucidate the benefits of AI decisions that, while novel, better align with overarching business goals and drive performance metrics forward.

Making AI Understandable and Manageable

The aversion to the enigmatic 'black box' is universal. In the world of fabs, it can be a barrier to trust and adoption —operational teams must feel empowered to both grasp and guide the underlying mechanisms of AI models.

We made a considerable effort to refine our AI scheduler by incorporating a feature that enables the user to influence the objective of what our AI scheduler is tasked to achieve and also to understand the decision. Once a schedule is created, engineers can look through those decisions and inspect and interrogate them to understand why the scheduler made these decisions.

Case Studies: Success Stories of AI Deployment

I firmly believe that we are on the cusp of a transformative era in semiconductor manufacturing, one where AI-driven solutions will yield unprecedented benefits. To illustrate this, let's delve into some practical case studies.

The first involves implementing Flexciton's AI scheduler within the complex diffusion area of a wafer fab—a zone notorious for its intricate processes. We aimed to achieve a trifecta of goals: maximize batch sizes, minimize rework, and significantly reduce reliance on shop floor decision-making. The challenge was magnified by the fab's limited IT and IE resources at the time of deployment. Partnering with an existing vendor whose systems were already integrated and had immediate access to essential data facilitated a rapid and efficient implementation with minimal engagement of the fab's IT team. This deployment led to remarkable improvements: clean tools saw 25% bigger batches, and rework in the diffusion area was slashed by 36%.

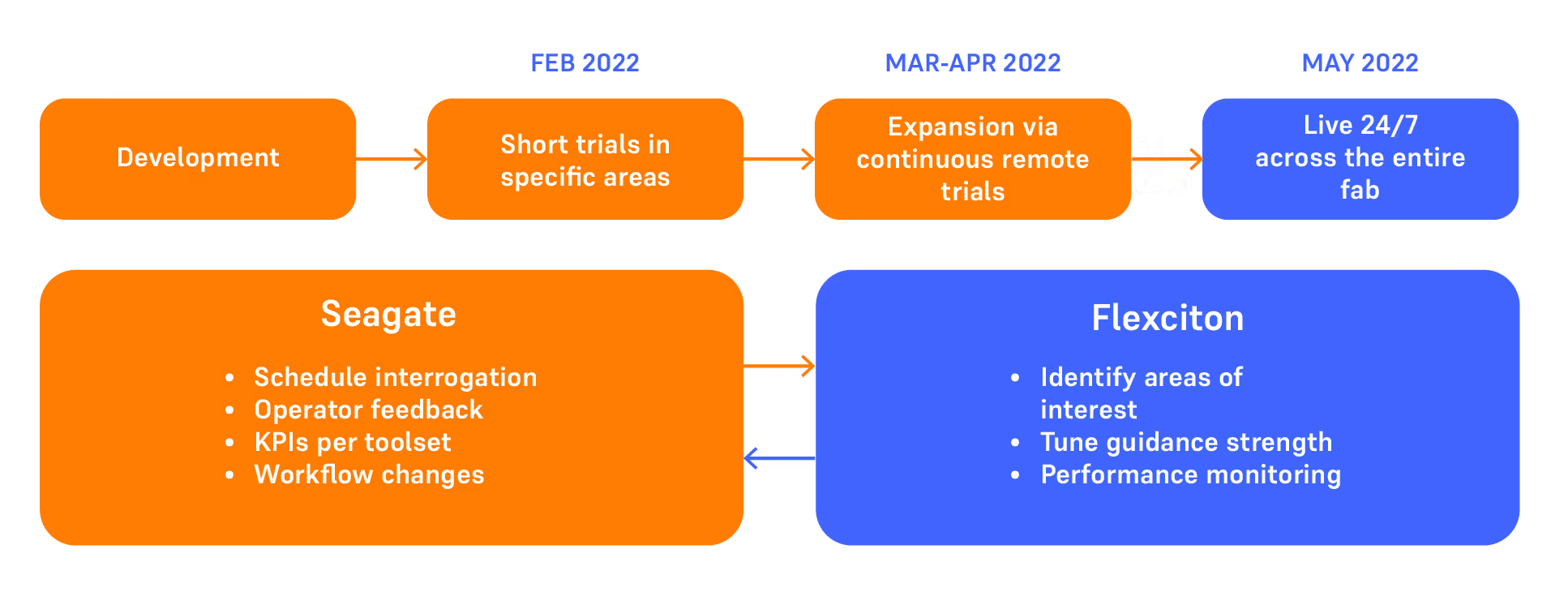

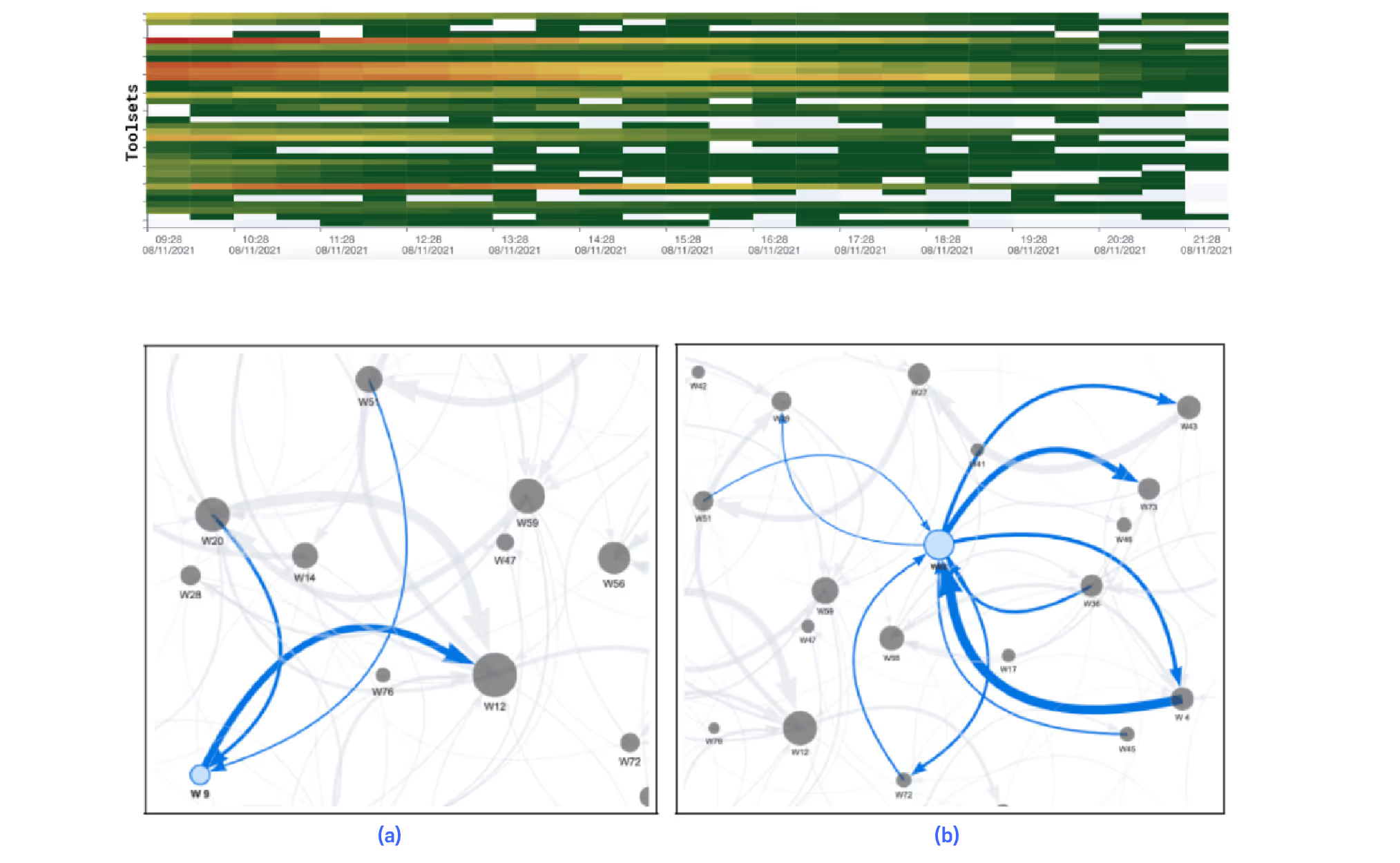

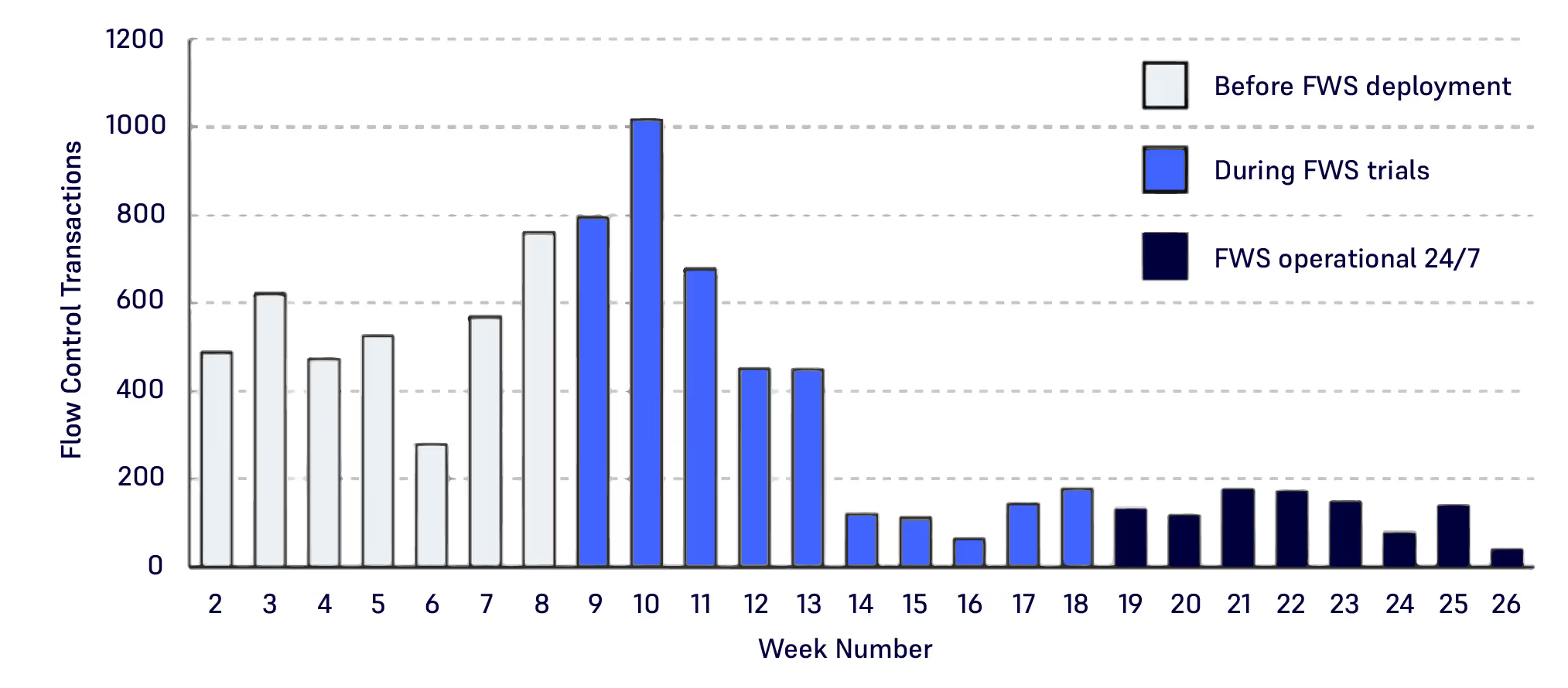

Another case study details a full fab deployment, where the existing rules-based scheduling system was replaced with Flexciton's AI scheduler. The goal was to enhance capacity and reduce cycle times. The deployment was staged, beginning with simpler areas starting with metrology tools, through the photolithography area and eventually scaling to the entire fab, yielding a global optimization of work-in-process (WIP) flow. The result was a significant increase in throughput and a staggering 75% reduction in manual flow control transactions, a testament to the AI's ability to autonomously optimize WIP flow and streamline operations.

The Autonomous Future of Semiconductor Manufacturing

In closing, the semiconductor industry stands on the precipice of a new era marked by autonomy. AI technology, with its capacity to make informed decisions without human input, has demonstrated not only the potential for improved KPIs but also a significant reduction in the need for human decision-making. The future of semiconductor manufacturing is one where AI-driven solutions consistently deliver superior production results, alleviating the human workload and steering fabs towards their objectives with unprecedented precision and efficiency.

As we embrace this autonomous future, it becomes clear that the integration of AI in semiconductor manufacturing is not just an enhancement of the status quo but a reinvention of it. With each fab that turns to AI, the industry moves closer to realizing a vision where technology and human ingenuity converge to create a landscape of limitless potential.

Author: Jamie Potter, CEO and Cofounder, Flexciton

The Flex Factor with... Will

The Flex Factor with... Will

Introducing Will, Lead Backend Engineer at Flexciton. Explore his daily tasks, ranging from crafting backend architecture to overseeing the codebase and managing technical debt in this month's edition of The Flex Factor.

Tell us what you do at Flexciton?

I am a lead backend engineer and the software development practice lead. My work involves designing the backend architecture, managing the codebase structure and technical debt, pushing for best practices across the wider engineering team and contributing features to my delivery team.

What does a typical day look like for you at Flexciton?

I usually start my morning by scanning through the production logs from our deployments and seeing if anything looks suspect and in need of an investigation. From there it will depend on what I am focused on for that week which tends to vary a fair amount. The majority of my time is spent coding features or doing large scale design work. Some days I get to spend refactoring and restructuring our codebase, occasionally I will get to work in the devops or optimisation space which I always look forward to. In any given week there will be a handful of ongoing projects at various stages, from architectural designs to software development practice work that needs to be structured and prioritised. No day goes by without me writing at least some code, but there is a fair amount of admin work to do as well.

What do you enjoy most about your role?

The diversity of the work I get to do. My work often overlaps with optimisation and devops so I can find myself speaking the lots of different people throughout the day. There are many opportunities to dive deeper into a topic with various team members willing to support you. Since joining I have worked with terraform, CI pipelines, infrastructure, hardware configuration, optimisation, frontend, customer deployments, database optimisation and management, the application backend and much more.

If you could summarise working at Flexciton in 3 words, what would they be?

Collaborative, Challenging, Diverse.

What emerging technology do you believe will have the biggest impact on our lives in the next decade?

I think the next decade is going to be made great by lots of smaller contributions made across technology from both hardware and software. I don’t have much hope for AGI / useful AGI this decade but there is a lot going on to be excited about. From a hardware perspective we have companies making huge progress in designing chips specifically for model training, and at the other end of the spectrum more companies are putting satellites into orbit to enable global access to high speed internet. AI has fuelled the search in identifying stable structures for proteins and crystals, pushing frontiers of new medicines and treatments, as well as material science. Memory safety in programming languages has started to draw attention from governments too with languages like Rust (and potentially Hylo in the future) likely to lead for memory safe applications. It will be interesting to see how the landscape changes over the next few years and see companies start to shift their codebases over.

What’s the best piece of advice you’d give to someone starting a career in the tech industry today?

I think the best piece of advice would be to throw away any notion of imposter syndrome from the start. Programming, and tech in general, is massive, and its certainly true that the more you know, the more you realise you do not know. Everyone will take a different path throughout their career and find themselves being expert in one topic and (momentarily) hopeless in another. When the topics that you know nothing about come along, its best to embrace that and start finding opportunities to learn. It is important to convince yourself that while you may not be able to learn everything, you could learn anything and find joy in accruing that knowledge as you progress in your career. Bearing this in mind, I would say come into tech because you love it and because you want to learn. There is such as good community across programming languages and industries, anyone who wants to learn can easily find help.

Tell us about your best memory at Flexciton?

I can’t think of one great memory that stands out, but what makes Flexciton great is all the little things that happen week after week such that by Sunday evening, I am looking forward to speaking with my team in Monday standup.

C is for Cycle Time [Part 2]

C is for Cycle Time [Part 2]

Part 2

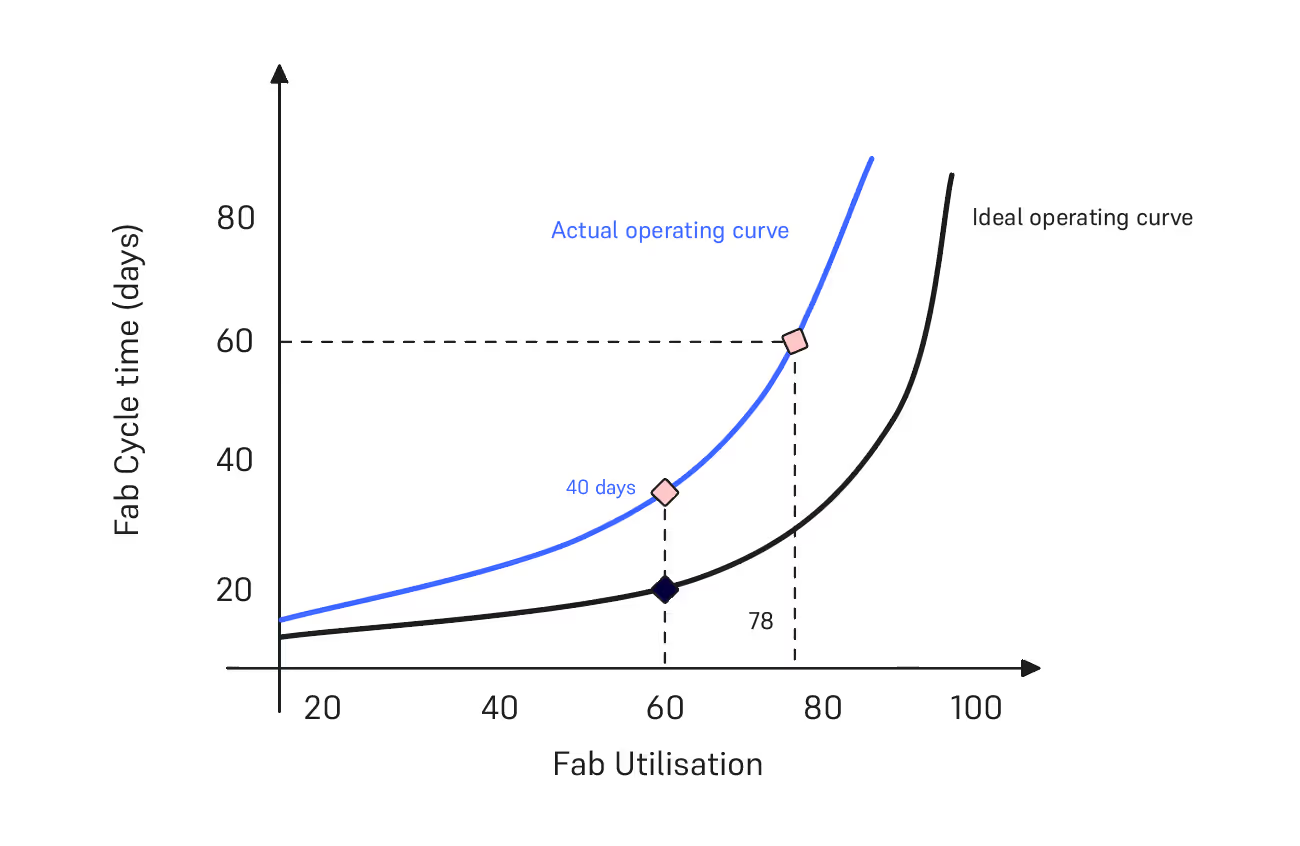

In the first part of 'C for Cycle Time', we explored the essence of cycle time in front-end wafer fabs and its significance for semiconductor companies. We introduced the operating curve, which illustrates the relationship between fab cycle time and factory utilization, as well as the power of predictability and the ripple effects cycle time can have across the supply chain.

In part 2, we will explore strategies to enhance cycle time through advanced scheduling solutions, contrasting them with traditional methods. We will use the operating curve, this time to demonstrate how advanced scheduling and operational factors, such as product mix and factory load, can significantly impact fab cycle time.

How wafer fabs can improve cycle time

By embracing the principles of traditional Lean Manufacturing, essentially focused on reducing waste in production, cycle time can be effectively reduced [1]. Here are a few strategies that can help improve fab cycle time:

- Improving maintenance strategies, for example moving from reactive to more proactive maintenance can improve cycle time with fewer breakdowns and more predictable tool availability [2].

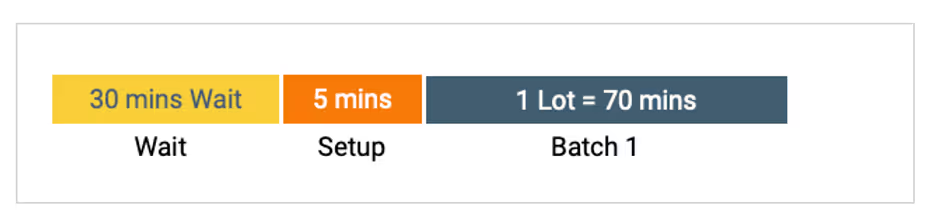

- As noted in part 1, minimizing wasted time in batch formation and reducing the frequency of rework due to defects improves cycle time.

- Purchasing faster tools. Although, this can be a time-consuming and costly undertaking. In-facility expansion may take up to a year, while the commencement of a new facility could extend to three years [3].

- Establishing optimal batching in diffusion poses a considerable challenge, given the intricate process constraints within the diffusion area, such as timelinks, as we’ve explained in a recent blog.

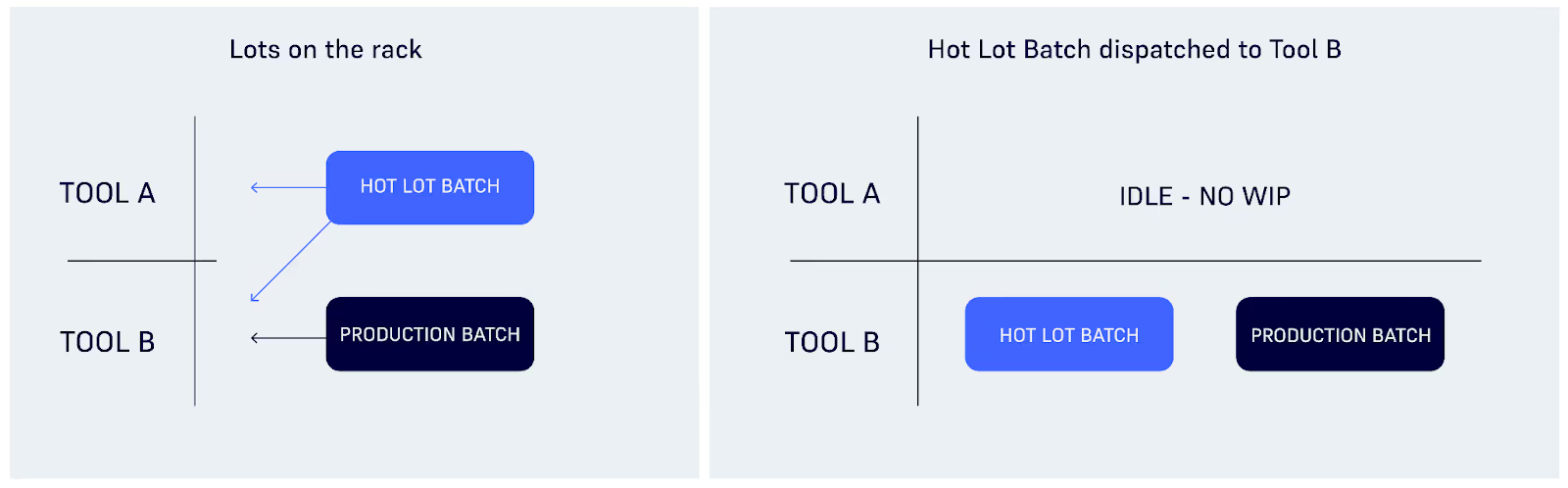

- Balancing cycle time of hot lots with average fab cycle time. Fabs often assign higher priority to hot lots, which can negatively impact the average cycle time of production lots [4].

- Developing the skills of existing operators and expediting the onboarding process for new operators could be another means of reducing variability in production, thus impacting cycle time.

The implementation of an advanced AI scheduler can facilitate most of the strategies noted above, leading to an improvement in cycle time with significantly less effort demanded from a wafer fab compared to alternatives such as acquiring new tools. In the next sections we are going to see how this technology can make your existing tools move wafers faster without changing any hardware!

Applying an advanced AI scheduler to improve cycle time

In this section, we delve into how an advanced AI scheduler (AI Scheduler) can maintain factory utilization while reducing cycle time.

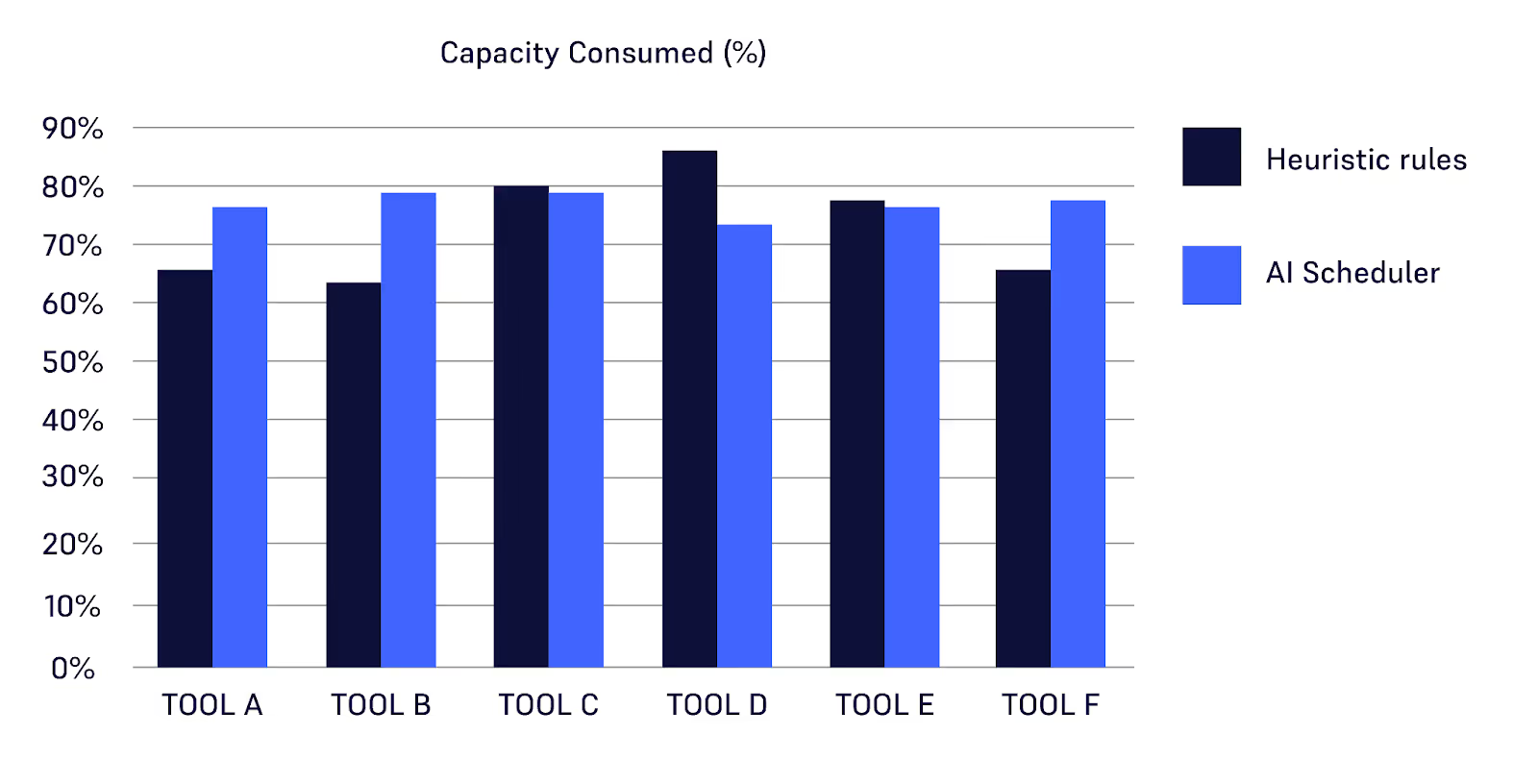

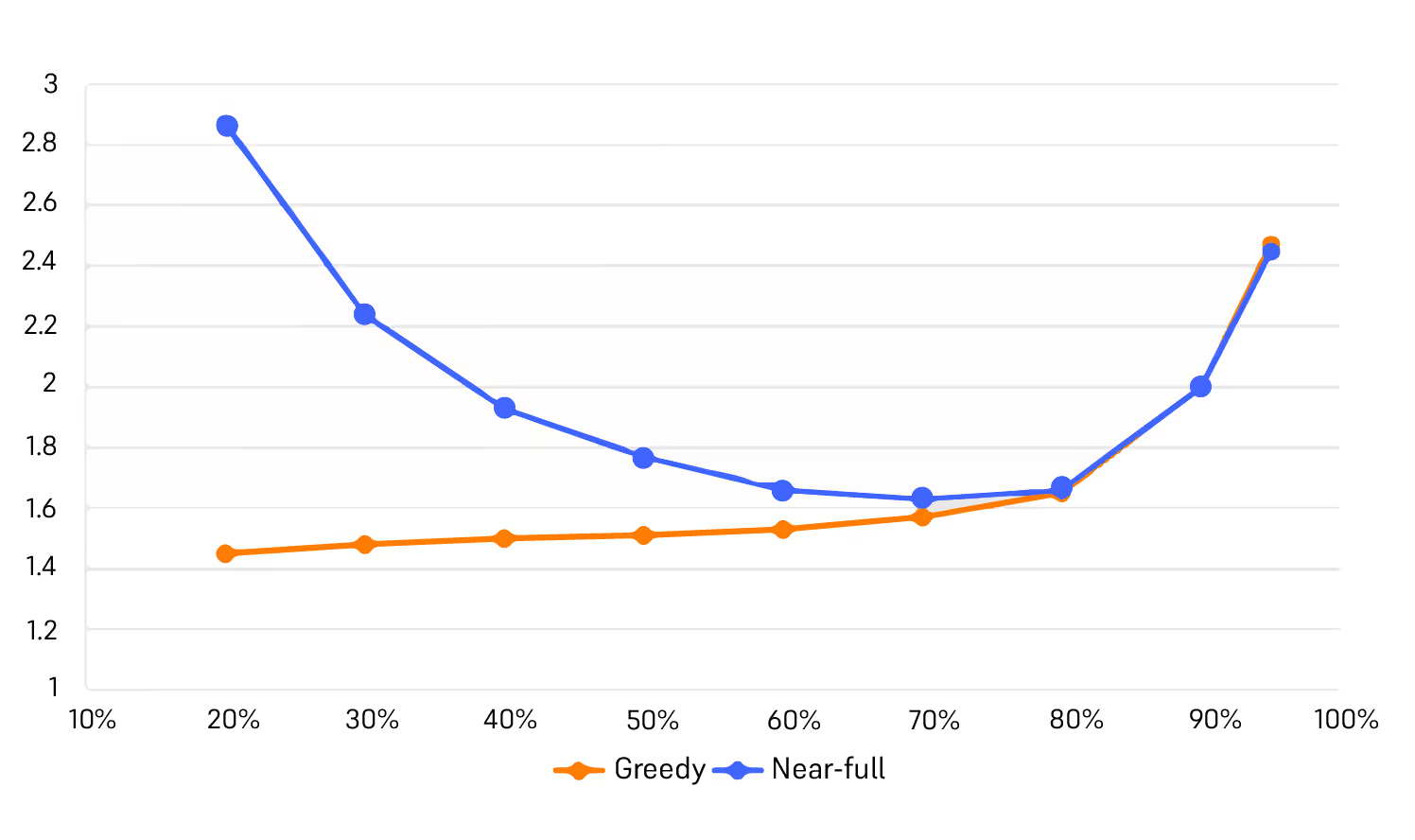

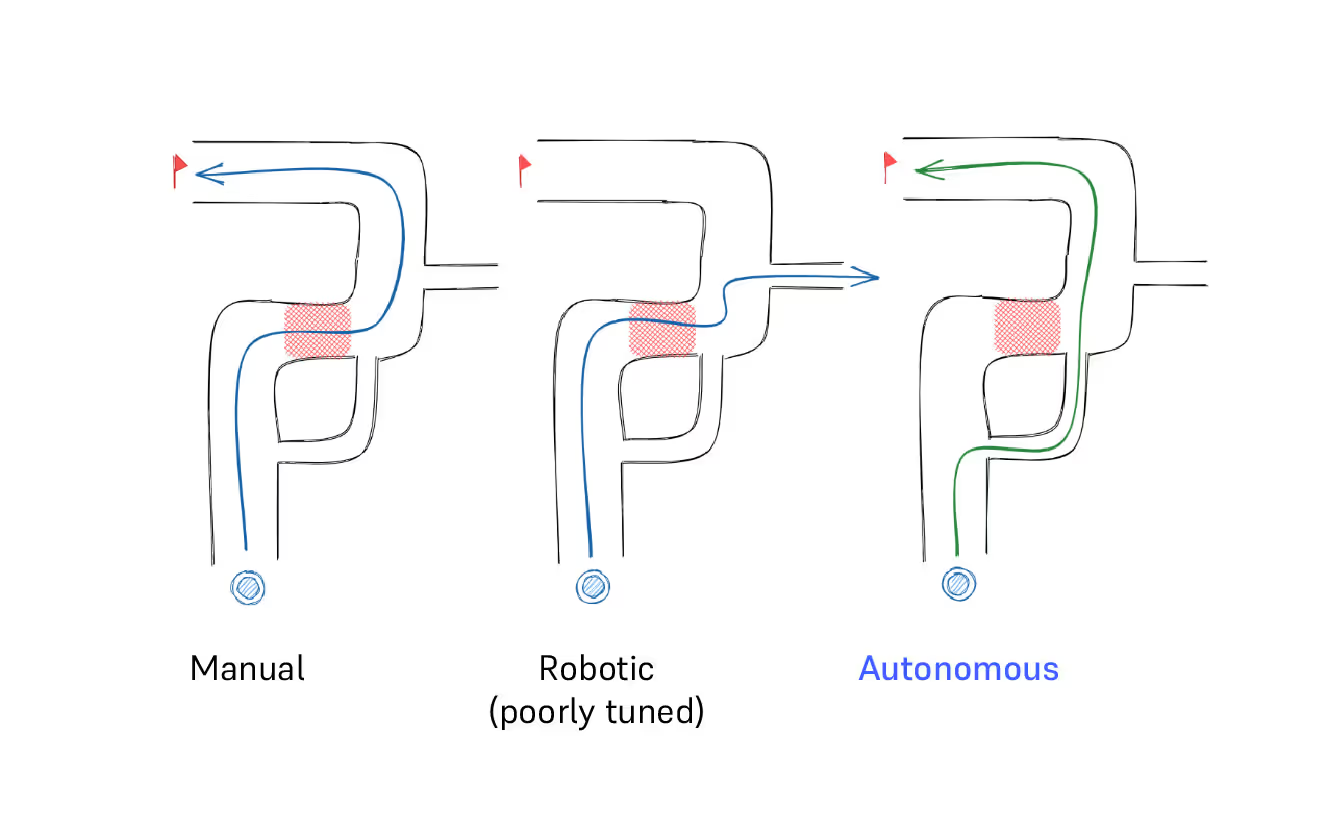

First let’s define what an AI Scheduler is. It is an essential fab software that has a core engine powered by AI models such as mathematical optimization. It possesses the ability to adapt to ongoing real-time changes in fab conditions, including variations in product mixes, tool downtimes, and processing times. Its output decisions can achieve superior fab objectives, such as improved cycle time, surpassing the capabilities of heuristic-based legacy scheduling systems. More aspects of an advanced AI scheduler can be found in our previous article, A is for AI. The AI Scheduler optimally schedules fab production in alignment with lean manufacturing principles. It achieves this by optimally sequencing lots and strategically batching and assigning them to tools.

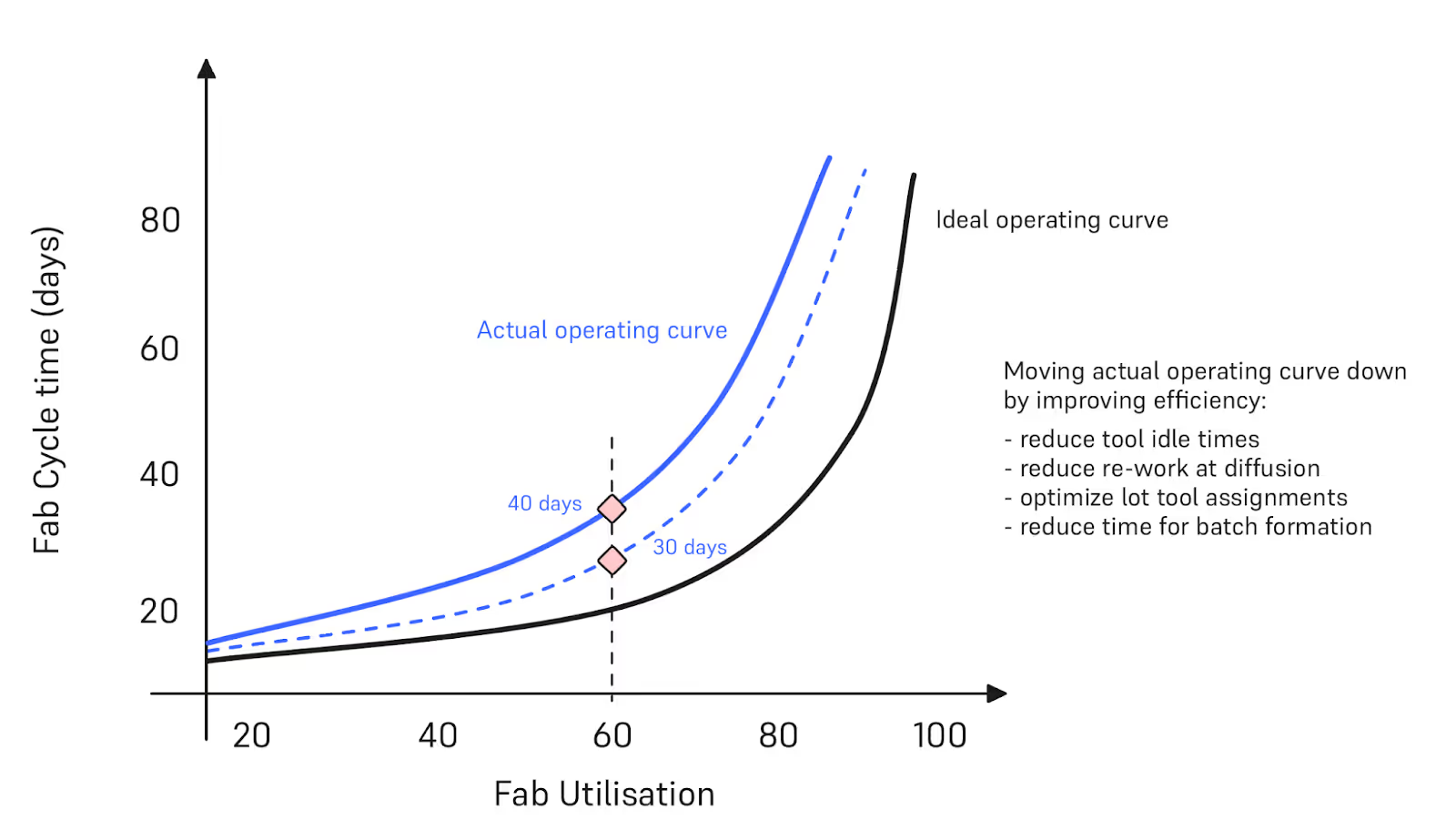

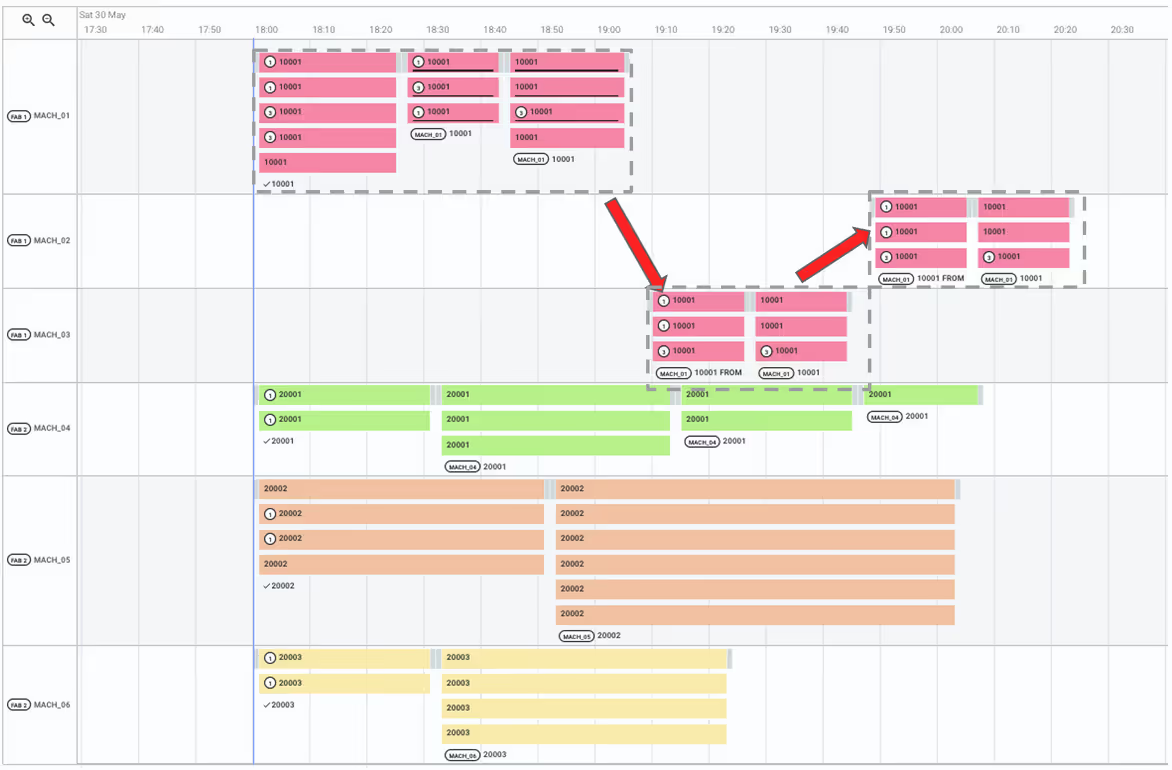

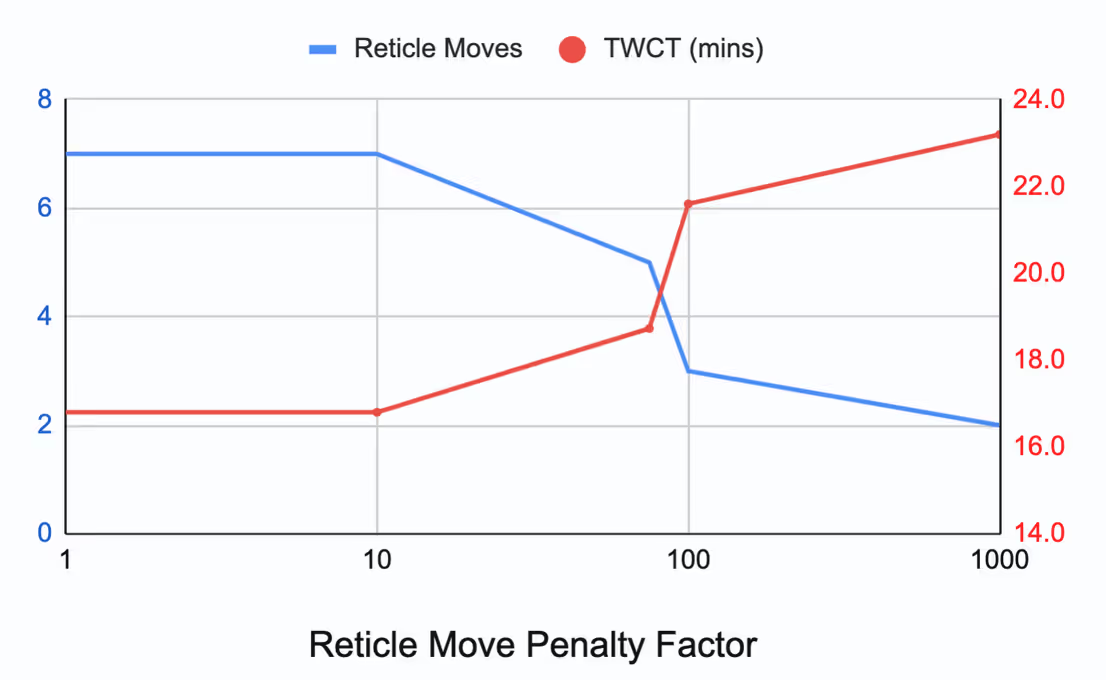

Figure 5 shows an example of how an AI Scheduler can successfully shift the cycle time from the original operating curve closer to the theoretical operating curve. As a result, cycle time is now 30 days at 60% factory utilization. This can be accomplished by enhancing fab efficiency through measures such as minimizing idle times, reducing re-work, and mitigating variability in operations, among other strategies. In the next sections, we will show two examples in metrology and diffusion how cycle time is improved with optimal scheduling.

Reducing queuing times and tool utilization variability in metrology

Many wafer fabs employ a tool pull-system for dispatching. In this approach, operators typically decide which idle tool to attend to, either based on their experience or at times, randomly. Once at the tool, they then select the highest priority lots from those available for processing. A drawback of this system is that operators don't have a comprehensive view of the compatibility between the lots awaiting processing, those in transit to the rack, and the tools available. This limited perspective can lead to longer queuing times and underutilized tools, evident in Figure 6.

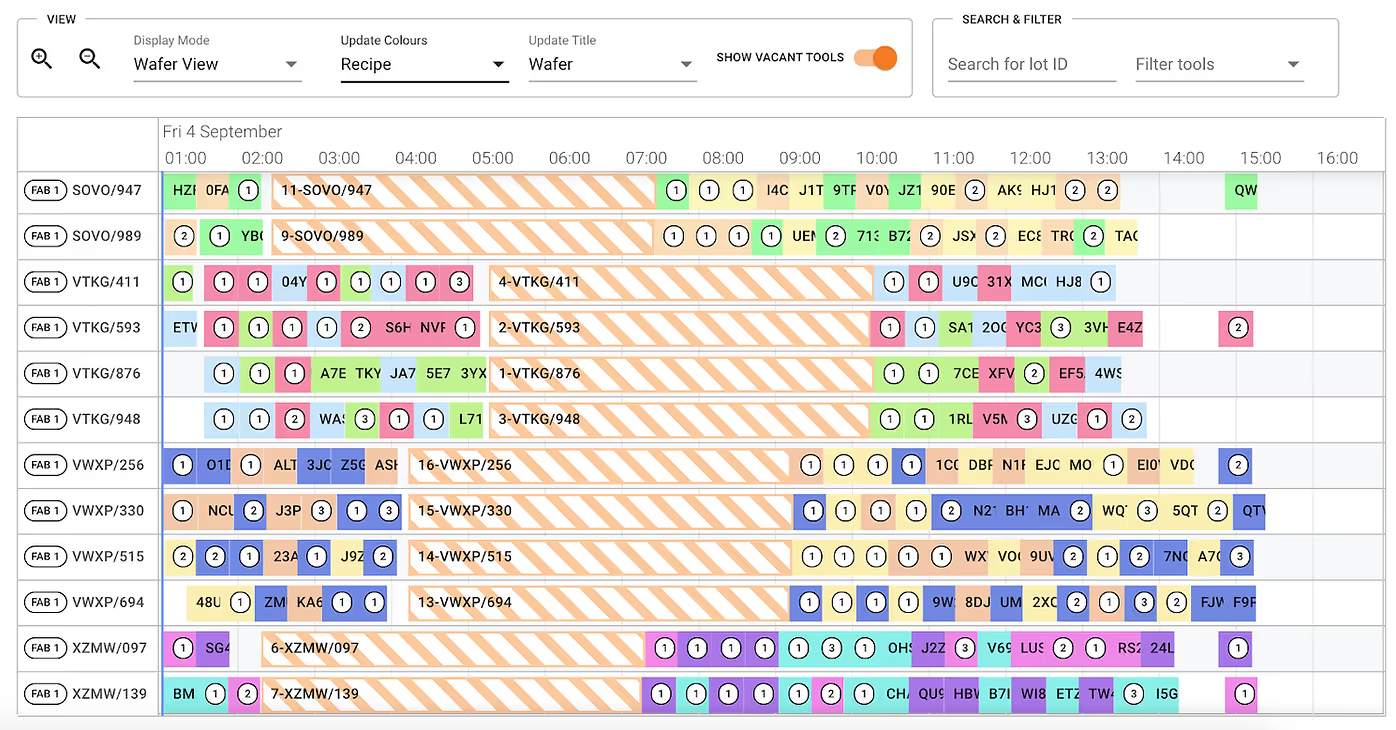

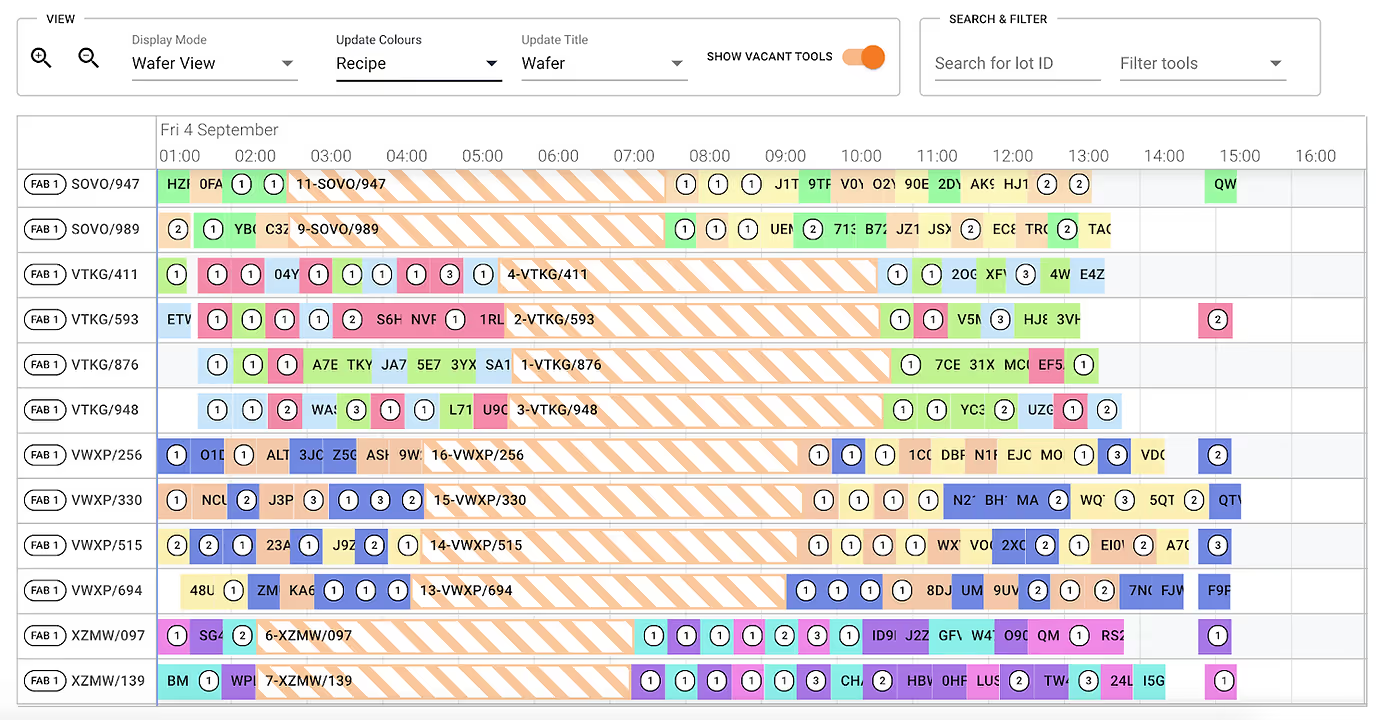

An AI Scheduler addresses these inefficiencies. By offering an optimized workflow, it not only shortens the total cycle time but also minimizes variability in tool utilization. This in turn indirectly improves the cycle time of the toolset and overall fab efficiency. For example, Seagate deployed an AI Scheduler to photolithography and metrology bottleneck toolsets that were impacting cycle time. The scheduler reduced queue time by 4.3% and improved throughput by 9.4% at the photolithography toolset [5]. In the metrology toolset, the AI Scheduler reduced variability in tool utilization by 75% which resulted in reduced cycle time too, see Figure 7 [6].

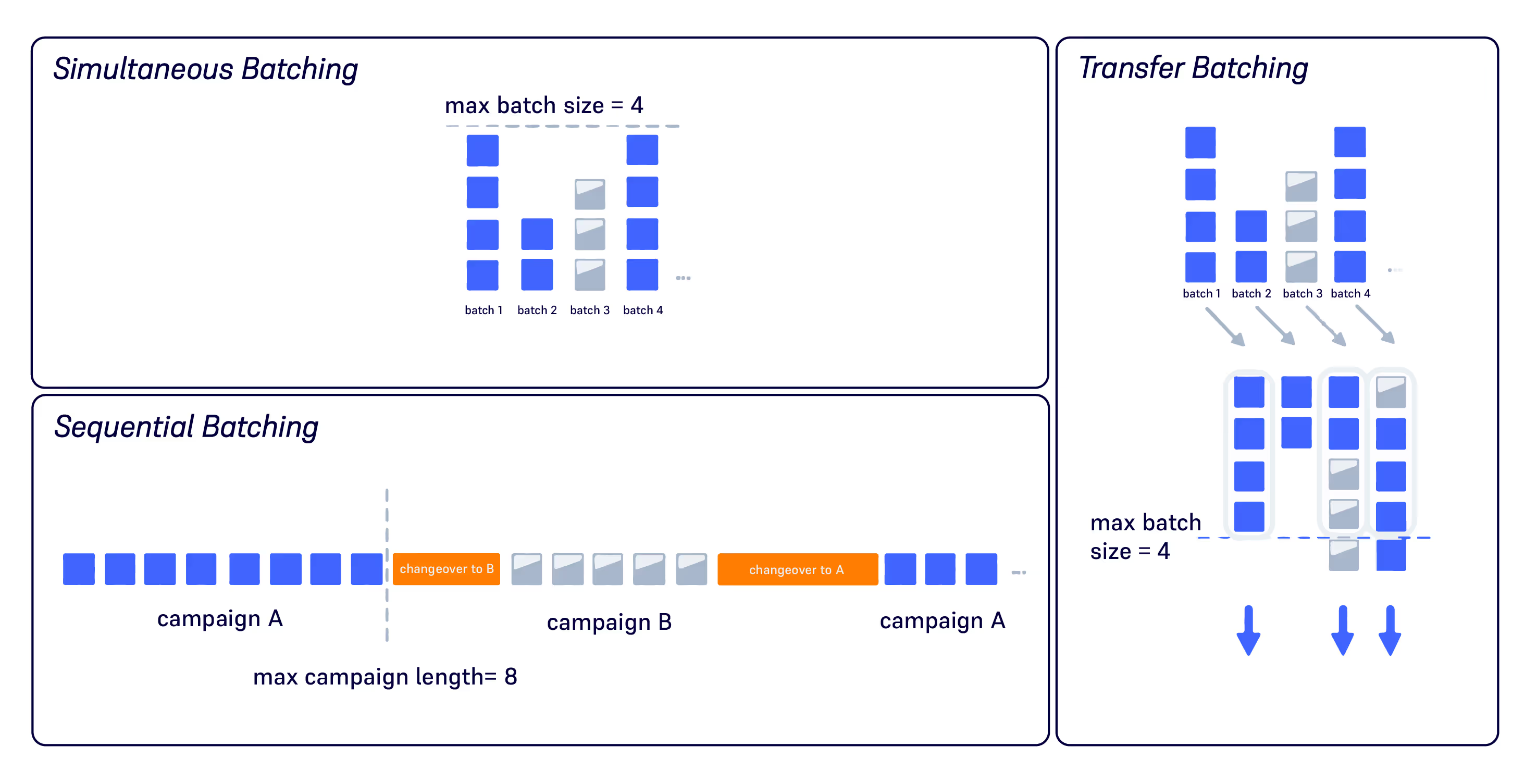

Improving cycle time and optimal batching in diffusion

Diffusion is a toolset that poses operational complexities due to its intricate batching options and several coupled process steps between cleaning and various furnace operations [7]. Implementing an AI Scheduler can mitigate many of these challenges, leading to reduced cycle time:

- Strategic Batching can reduce total cycle time in diffusion. To maximize the benefit of an AI Scheduler, the fab should provide good quality data.

- Automated Furnace Loading: Typically, diffusion loading is accomplished via a pull-system from the furnace. This means that operators would revisit the cleaning area to manually pick the best batches, based on upcoming furnace availability. This approach often demands substantial resources and time, thereby increasing cost or cycle time. The AI Scheduler curtails this time considerably, freeing up operators for other essential tasks, which indirectly may reduce cycle time elsewhere.

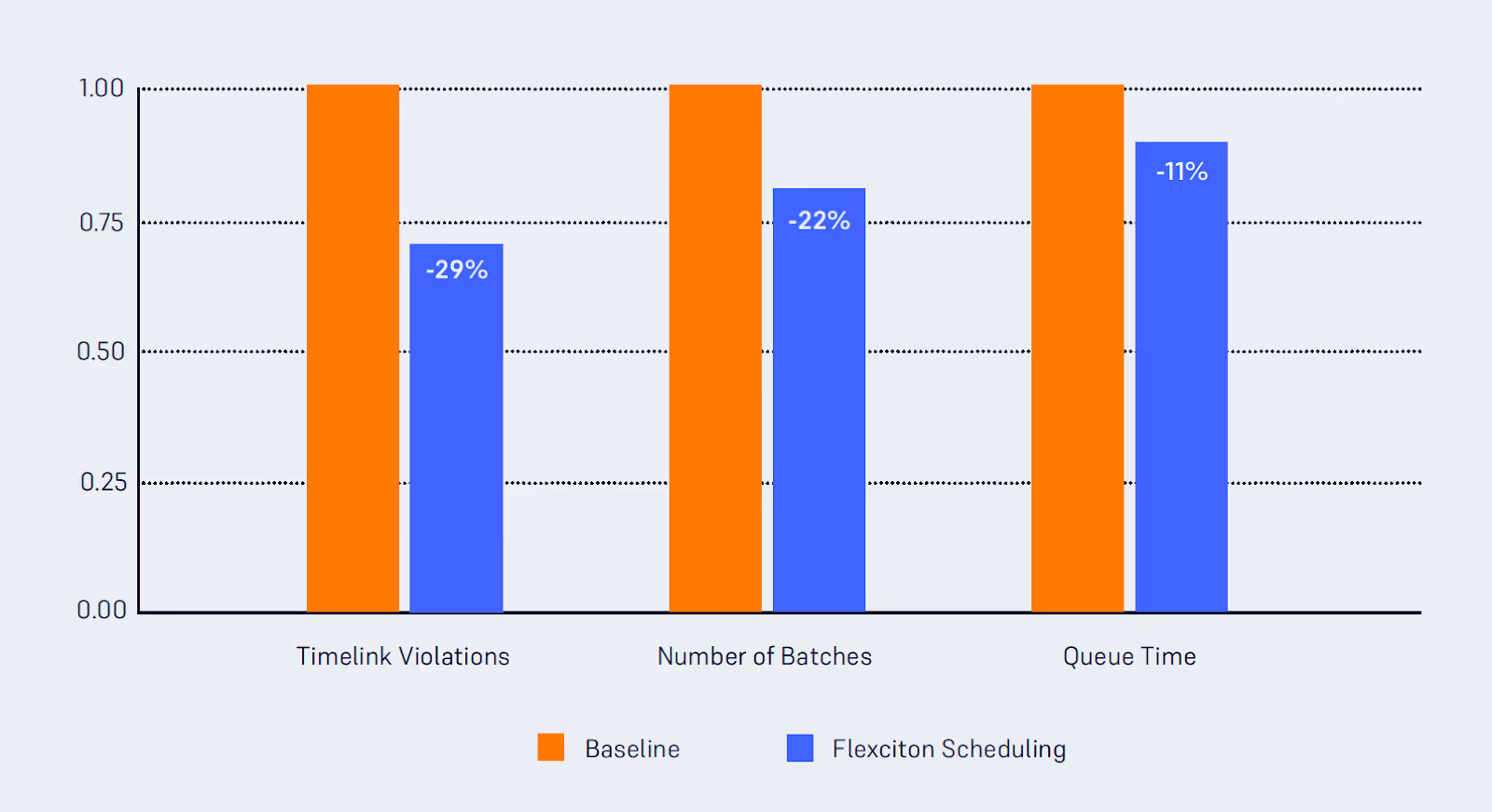

- Reduction of Timelinks Violations: A recent pilot implementation of an AI Scheduler in diffusion at a Renesas fab underscored its effectiveness. As displayed in Figure 8, timelink violations were significantly reduced. This minimizes the necessity for rework, further cutting down the cycle time, as explained earlier in the article.

Maximizing the value of the AI Scheduler by integrating with other applications

In the above examples of photo, metrology and diffusion toolsets, the AI Scheduler can support operators to achieve consistently high performance. To enhance the efficiency of the scheduling system in fabs predominantly run by operators with minimal AMHS (Automated Material Handling Systems) presence, pairing the scheduler with an operator guidance application, as detailed in one of our recent blogs on user-focused digitalisation, can be a valuable approach. This software will suggest the next task required to be executed by an operator.

The deployment of an AI Scheduler should focus on bottleneck toolsets - specifically, those that determine the fab's cycle time. Reducing the cycle time of a toolset will be inconsequential if that toolset is not a bottleneck. Consequently, fabs should consider the following two approaches:

- Ensure the deployment of the AI Scheduler on the most critical toolsets to effectively address dynamic bottlenecks. This ensures that as bottlenecks shift, the AI Scheduler can promptly reduce the cycle time of the newly identified bottlenecked toolset. By doing so, fabs can consistently maintain a low cycle time.

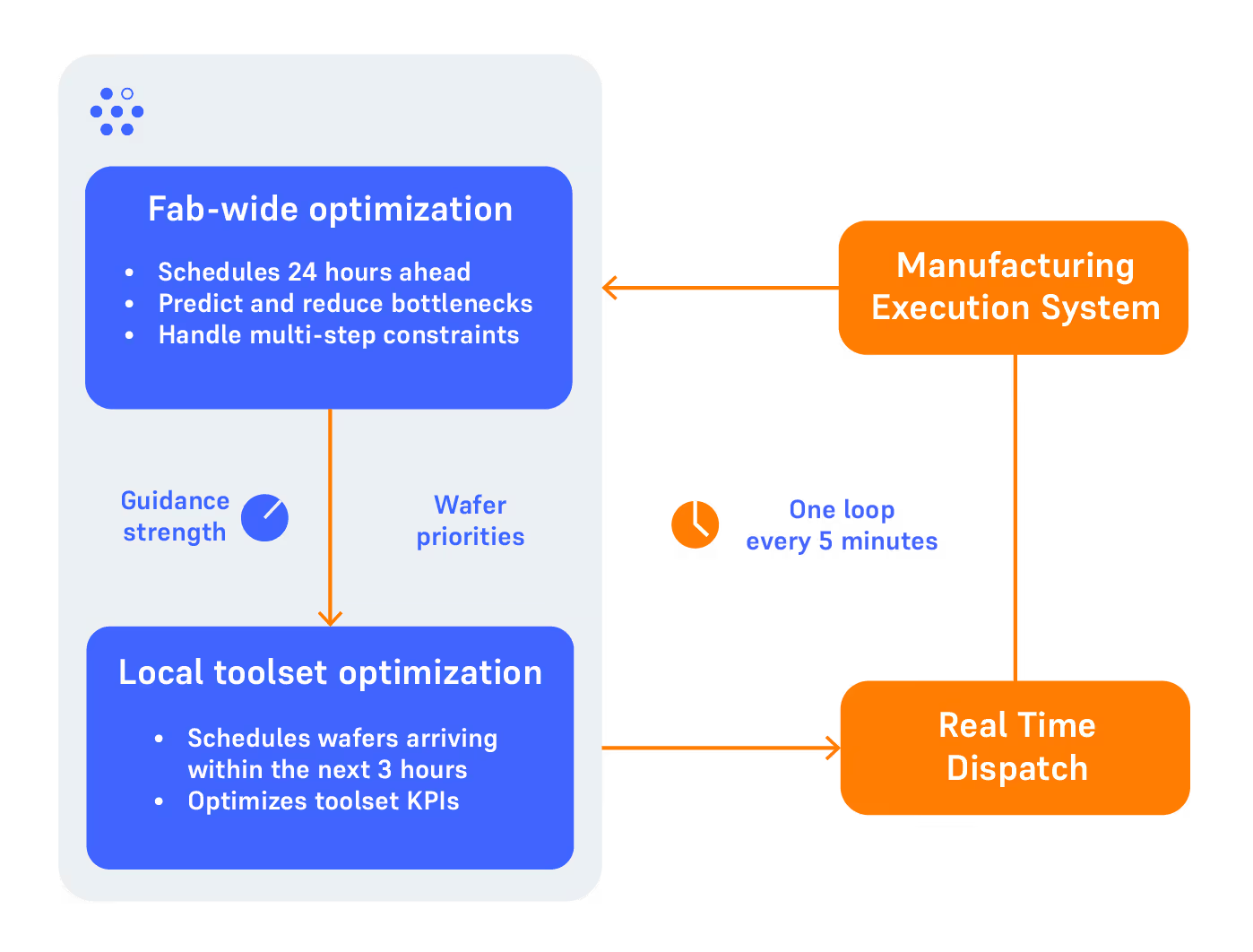

- The introduction of a global (or a fab wide) application layer – such as a solution that looks across all the toolsets and all lots across the whole line – can help coordinate all deployed AI Schedulers. This application should indicate which toolsets are bottlenecks and it should also adjust lot priorities or production targets per toolset to ensure a smooth flow across the line. The interaction between global applications and local scheduling applications can be seen in recent papers [9] [10].

Dealing with dynamic changes in the fab and understand trade-offs between competing objectives

Another factor to consider is that the actual operating curve of the fab is moving constantly based on changes in the operating conditions of the fab. For example, if the product mix changes substantially, this may impact the recipe distribution enabled in each tool and subsequently, the fab cycle time vs factory utilization curve would shift. The operating curve can also change if the fab layout changes, for example when new tools are added.

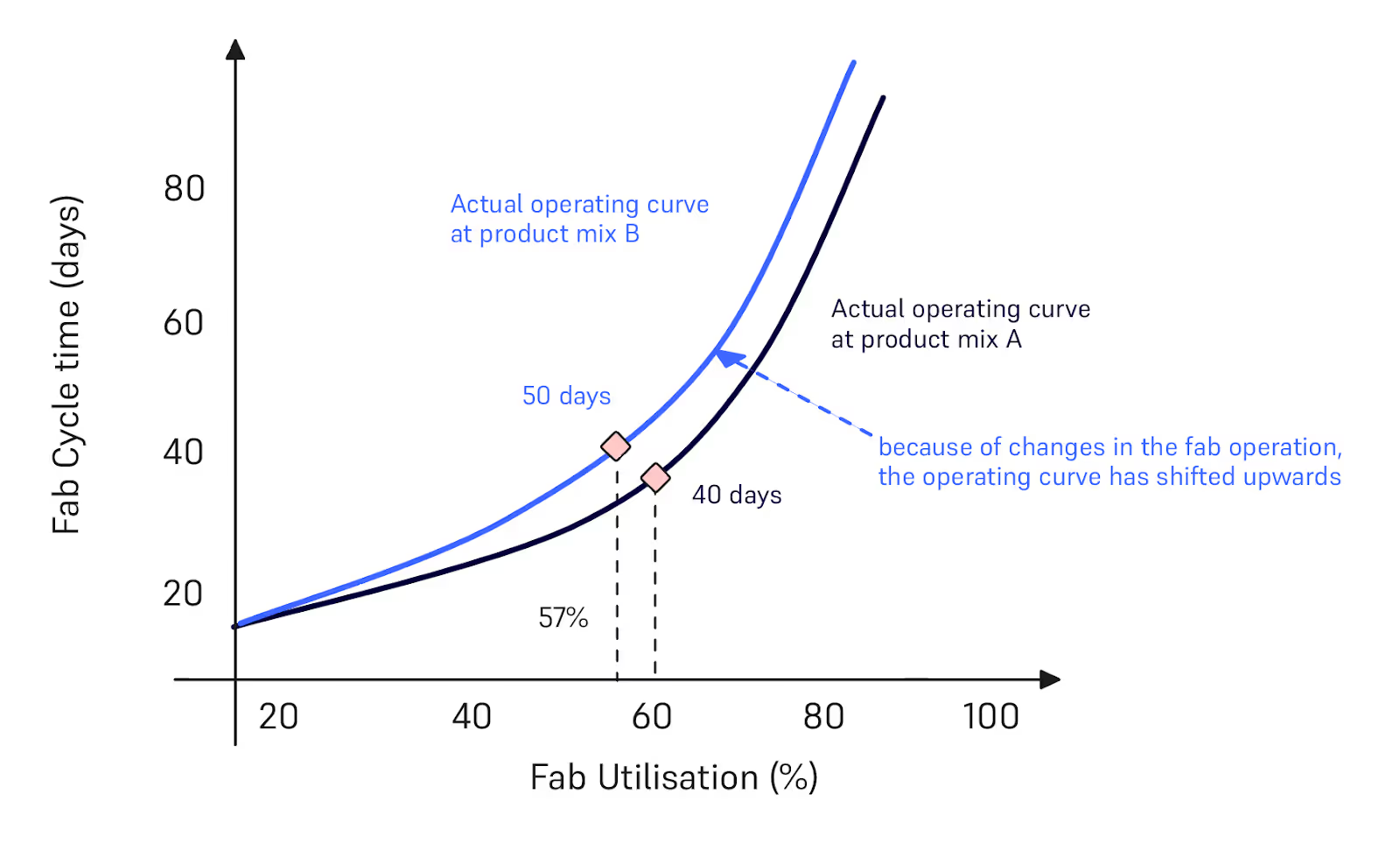

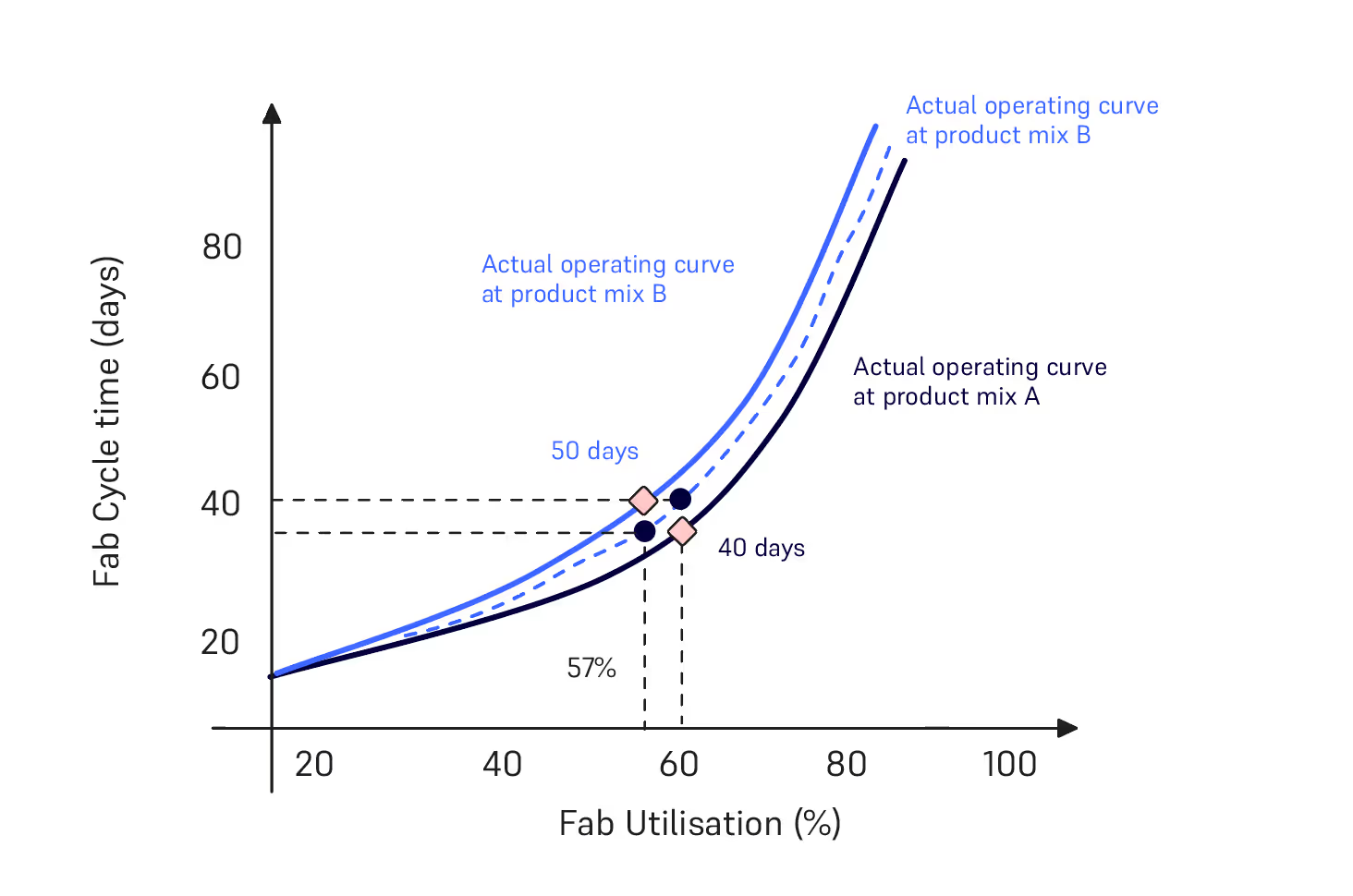

In Figure 9, we show an example wherein the cycle time versus factory utilization curve for product mix A shifts upward. This signifies an increased cycle time in the fab due to the recent changes in the product mix (and the factory utilization was slightly reduced under these new conditions). An autonomous AI Scheduler, as described by Sebastian Steele in a recent blog, should be able to understand the different trade-offs. For example, in Figure 10, the AI Scheduler could deal with the same utilization as before (60%) with product mix A, but the cycle time will stay at 50 days (10 days more than in the case with product mix A). Another alternative is that the user can then decide if they want to customize this trade-off so that the fab can move back to the same cycle time with this new product mix B at 40 days but staying with lower utilization at 57%.

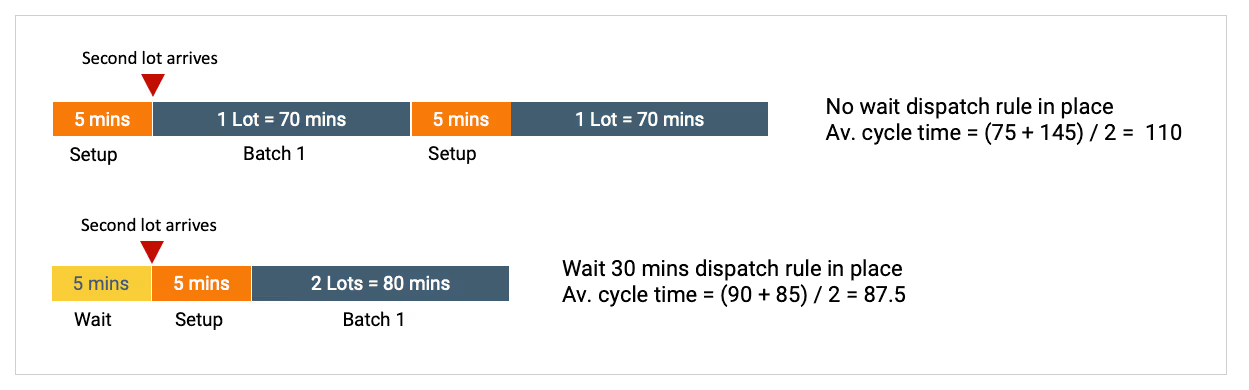

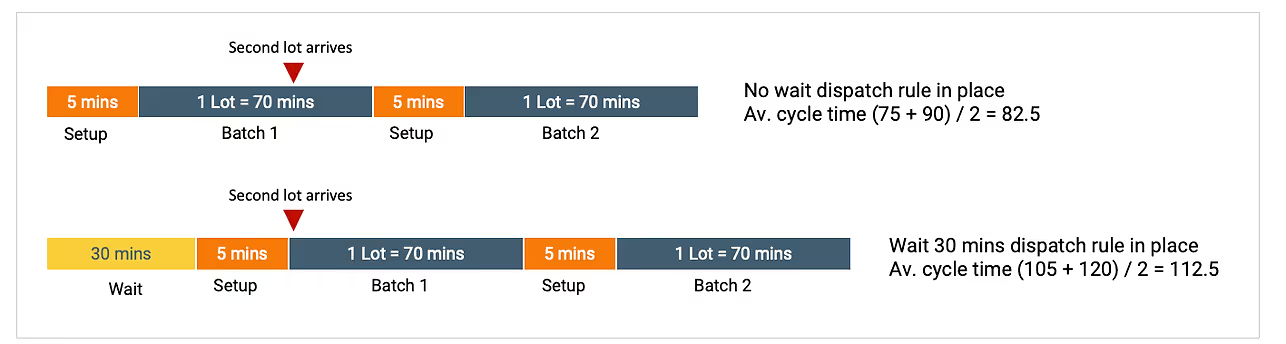

Trade-offs between different objectives at local toolsets may impact the fab cycle time. Consider the trade-offs in terms of batching costs versus cycle time. For instance, constructing larger batches might be crucial for high-cost operational tools such as furnaces in diffusion and implant. However, this approach could lead to an extended cycle time for the specific toolset and, consequently, an overall increase in fab cycle time.

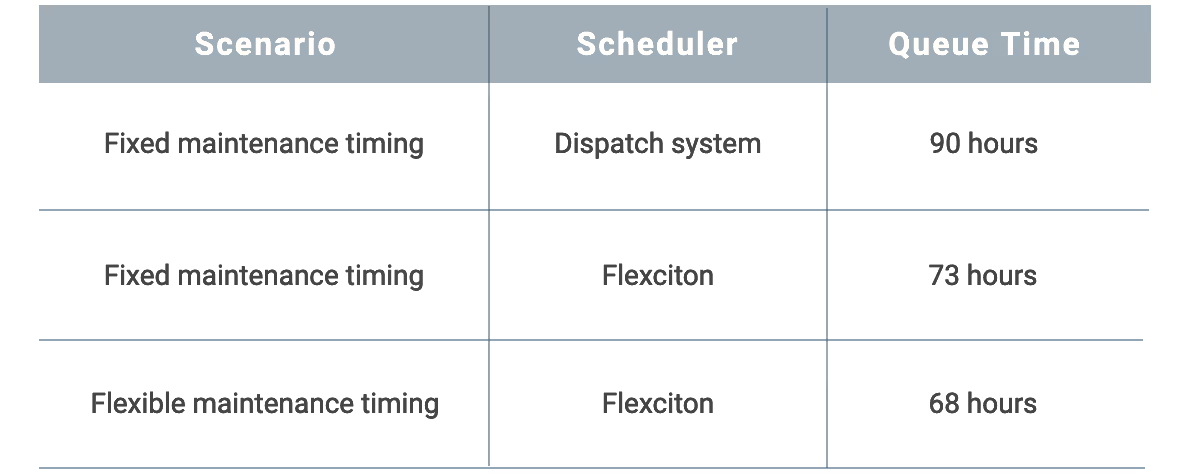

Tool availability and efficiency significantly affect cycle time, akin to the influence of product mix on operating curves. If tools experience reduced reliability over time, the operating curve may shift upward, resulting in a worse cycle time for the same utilization. While the scheduler cannot directly control tool availability, strategically scheduling maintenance and integrating it with lot scheduling can positively impact cycle time. A dedicated future article will delve into this topic in more detail.

Conclusion

The topic of the cycle time has been enriched with the introduction of an AI Scheduler, bringing a paradigm shift in how we perceive and manage the dynamics of front-end wafer fabs. As highlighted in our exploration, these schedulers do more than just automate – they optimize. By understanding and predicting the nuances of operations, from tool utilization to lot prioritization, advanced AI schedulers provide a roadmap to not just manage but optimize cycle time considering alternative trade-offs. In future articles we will talk about how scheduling maintenance and other operational aspects can be considered in a unified and autonomous AI platform that we believe would be the next revolution, after the innovations from Arsenal of Venice, Ford and Toyota.

Author: Dennis Xenos, CTO and Cofounder, Flexciton

References

- [1] James P. Ignizio, 2009, Optimizing Factory Performance: Cost-Effective Ways to Achieve Significant and Sustainable Improvement 1st Edition, McGraw-Hill, ISBN 978-0-07-163285-0

- [2] Lean Production, 2023, TPM (Total Productive Maintenance), URL.

- [3] Ondrej Burkacky, Marc de Jong, and Julia Dragon, 2022, Strategies to lead in the semiconductor world, McKinsey Article, URL.

- [4] Philipp Neuner, Stefan Haeussler, Julian Fodor, and Gregor Blossey, 2023, Putting a Price Tag on Hot Lots and Expediting in Semiconductor Manufacturing. In Proceedings of the Winter Simulation Conference (WSC '22). IEEE Press, 3338–3348.

- [5] Robert Moss, Dennis Xenos, Tina O’Donnell, 2023, Deployment of an Advanced Photolithography Scheduler at Seagate Technology, IFORS News, Volume 18, Issue 1, ISSN 2223-4373, pp. 8–10, URL.

- [6] Robert Moss, 2022, Ever-decreasing circles: how iterative modelling led to better performance at Seagate Technologies. Euro 2022 Conference, Finland, URL

- [7] Thomas Beeg, 2023, Impact of “time links” or controlled queue times, Factory Physics and Automation, URL.

- [8] Jamie Potter, 2023, Fab scheduling is now so complex that it needs next-generation intelligent software, Silicon Semiconductor Magazine, Volume 44, Issue 2, pp. 26-29, URL.

- [9] I. Konstantelos et al., 2022, "Fab-Wide Scheduling of Semiconductor Plants: A Large-Scale Industrial Deployment Case Study," 2022 Winter Simulation Conference (WSC), Singapore, pp. 3297-3308, doi: 10.1109/WSC57314.2022.10015364.

- [10] Félicien Barhebwa-Mushamuka. 2020, Novel optimization approaches for global fab scheduling in semiconductor manufacturing. Other. Université de Lyon. English. ⟨NNT : 2020LYSEM020⟩. ⟨tel-03358300⟩

C is for Cycle Time [Part 1]

C is for Cycle Time [Part 1]

This two-part article aims to explain how we can improve cycle time in front-end semiconductor manufacturing through innovative solutions, moving beyond conventional lean manufacturing approaches. In part 1, we will discuss the importance of cycle time for semiconductor manufacturers and introduce the operating curve to relate cycle time to factory utilization. Part 2 will then explore strategies to enhance cycle time through advanced scheduling solutions, contrasting them with traditional methods.

Part 1

Why manufacturers care about cycle time

Cycle time, the time to complete and ship products, is crucial for manufacturers. James P. Ignazio, in Optimizing Factory Performance, noted that top-tier manufacturers like Ford and Toyota have historically pursued the same goal to outpace competitors: speed [1]. This speed is achieved through fast factory cycle times.

This emphasis on speed had tangible benefits: Ford, for instance, could afford to pay workers double the average wage while dominating the automotive market. The Arsenal of Venice's accelerated ship assembly secured its status as a dominant city-state. Similarly, fast factory cycle times were central to Toyota’s successful lean manufacturing approach.

Furthermore, semiconductor manufacturers grapple with extended cycle times that can often span 24 weeks [2]. This article will focus on manufacturing processes in front-end wafer fabs as their contribution to the end product, such as a chip or hard drive disk head, spans several months. In contrast, back-end processes can be completed in a matter of weeks [3]. However, the principles discussed apply universally to back-end fabs without sacrificing generality.

Why Short Cycle Times Matter for Front-end Wafer Fabs

- Revenue acceleration: The quicker products reach customers, the faster revenue streams in. However, quantifying the precise financial impact due to cycle time is intricate and beyond this article's scope.

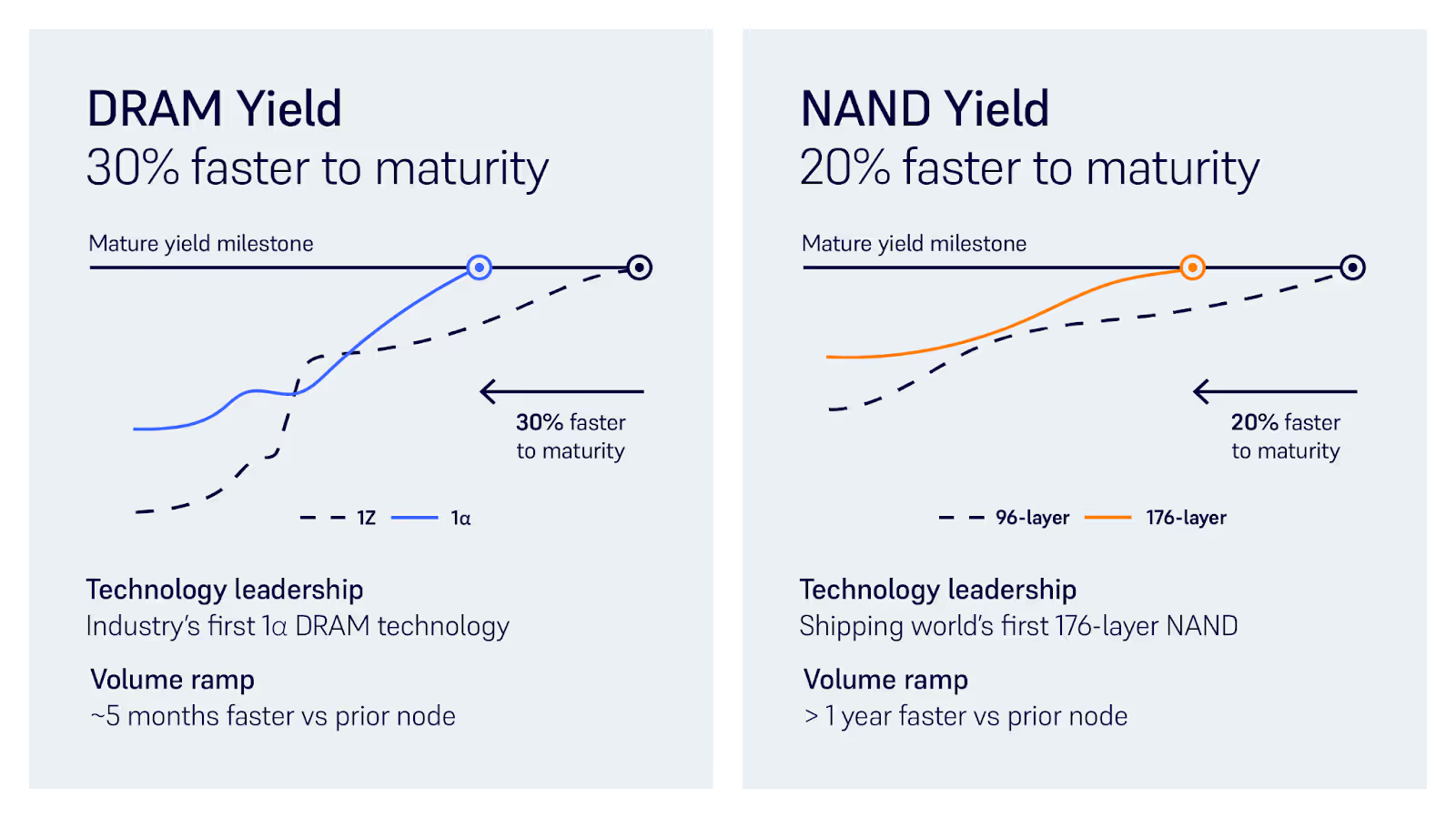

- Competitive advantage: Gaining a competitive advantage involves reducing cycle time in R&D wafers, which accelerates product launches. More than 20% of front-end fab production lines can be used for R&D wafer testing and iteration. Swift deliveries enhance a company's reputation, leading to more contracts. At the 2022 Winter Simulation Conference, Micron highlighted their rapid advancements: maturing 30% quicker in DRAM (five months ahead of the previous node) and 20% in NAND (a year faster than the prior node). See Figure 1.

- Agility in market responsiveness: A fab with shorter cycle times can swiftly adjust to market fluctuations, whether that is a surge in demand or a shift in product preferences, such as changes in product mix. It can also respond faster to changes in customer requirements.

- Risk mitigation: The shorter the cycle time, the quicker a fab can respond once defects have been detected as it takes less time to perform rework.

- Inventory management: Lower cycle times can reduce the amount of work-in-progress (WIP) in buffers or racks (intermediate stock), or stock at the end of the production line. This not only liberates tied-up capital but also wafers can move quicker with less WIP in the fab as it is shown in a later section introducing the operating curve.

Achieving Predictable Cycle Time

Less variability in cycle time helps a wafer fab to achieve better predictability in the manufacturing process. Predictability enables optimal resource allocation; for instance, operators can be positioned at fab toolsets (known as workstations) based on anticipated workload from cycle time predictions. Recognizing idle periods of tools allows for improved maintenance scheduling which will result in reduction in unplanned maintenance. In an upcoming article (Part 2), we'll explore how synchronizing maintenance with production can further shorten cycle times.

Measuring and monitoring the cycle time improves overall fab performance

Measuring and monitoring cycle times aids in identifying deviations from an expected variability. This, in turn, promptly highlights underlying operational issues, facilitating quicker issue resolution. Additionally, it assists industrial engineers in pinpointing bottlenecks, enabling a focused analysis of root causes and prompt corrective actions.

Supply chain stakeholders usually fail to understand the impact of cycle time

In the semiconductor industry, cycle time plays a pivotal role in broader supply chain orchestration:

- A predictable cycle time informs suppliers when to provide fresh batches of raw materials.

- Furthermore, it influences the downstream processes of Assembly & Test Operations (back-end facilities). Back-end facilities with a cycle time of less than a week gain enhanced predictability, allowing for more effective allocation of capacity and resources.

- Predictable cycle times will also inform safety inventory levels, freeing capital and optimizing storage space.

Cycle time is a component of the total lead time of a product (it also includes procurement, transportation, etc). Therefore, total lead time can be reduced if the long cycle times in the front-end wafer fabs are reduced. A reliable cycle time nurtures trust with suppliers, laying the foundation for favorable partnerships and agreements. In essence, cycle time is not just about production; it's the heartbeat of the semiconductor supply chain ecosystem.

Understanding how cycle time impacts product delivery times is essential for the semiconductor industry. In some analyses, you could see that cycle time is confused with capacity, as the authors in a McKinsey article stated “Even with fabs operating at full capacity, they have not been able to meet demand, resulting in product lead times of six months or longer” [4]. On the contrary, in a fab operating at full capacity, lead times of the products will increase as the average cycle time of manufacturing is increasing.

How to measure cycle time

Fab Cycle Time

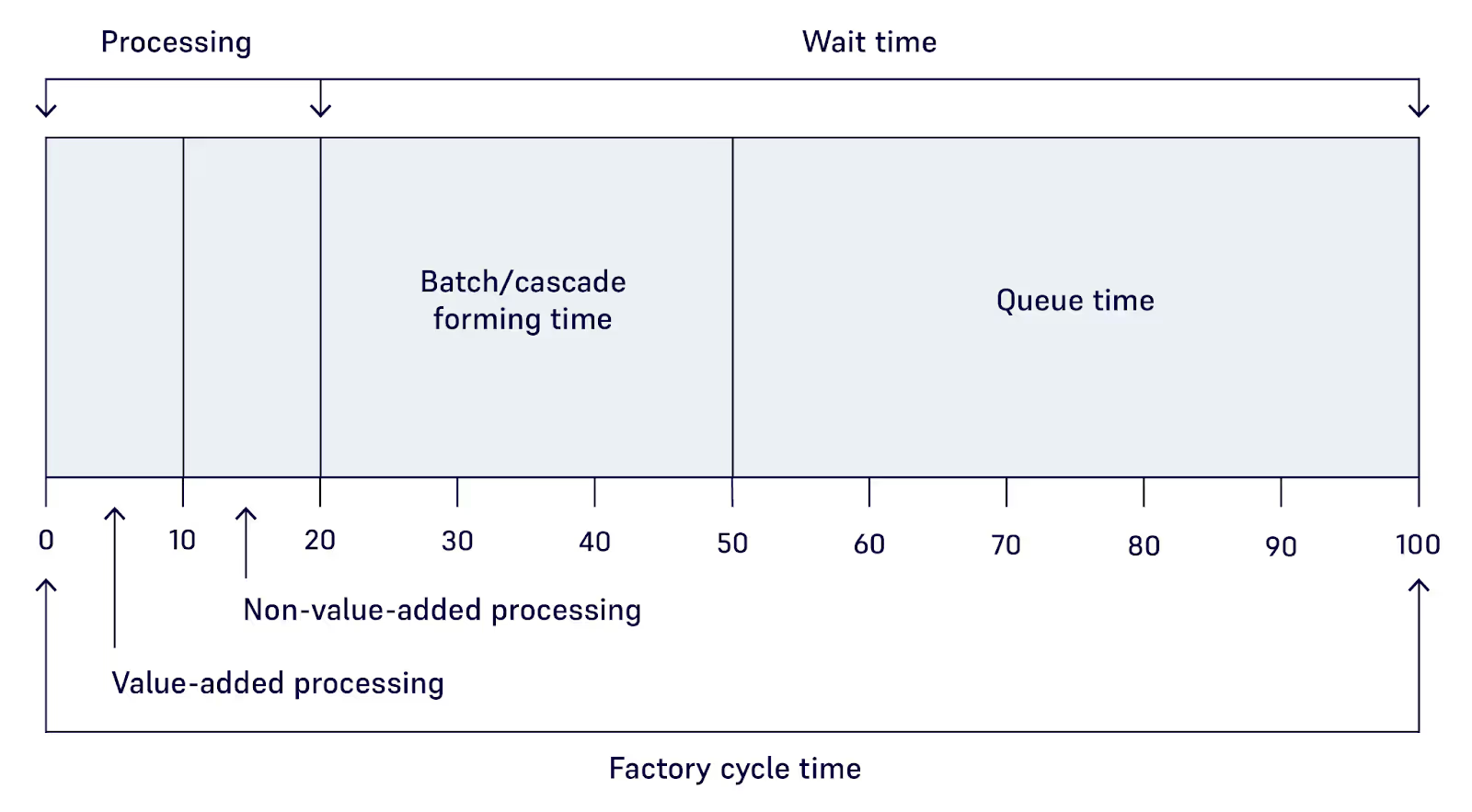

The fab cycle time metric defines the time required to produce a finished product in a wafer fab. The general cycle time term is also used to measure the time required to complete a specific process step (e.g. etching, coating) in a toolset, known as process step cycle time. The fab cycle time consists of the following time components as can be seen in Figure 2:

- Value-added processing time, which is the time taken to transform or assemble the unfinished product, which is a wafer in our case.

- Non-value-added processing time includes the time taken for inspection and testing, as well as the time for transferring the wafer between different steps.

- Time to prepare the products for processing: this refers to the time operators or tools required to form a batch, i.e. to select which lots should be bundled together for processing.

- Queue time: the time spent where the unfinished wafer is waiting to be processed because the tool required is busy, due to the tool processing another batch or undergoing maintenance.

To measure and monitor cycle time, wafer fabs must track transactional data for each lot, capturing timestamps for events like the beginning and completion of processing at a tool. This data is gathered and stored by a Manufacturing Execution System (MES). Such transactional information can be utilized for historical operations analysis or for constructing models to forecast cycle times influenced by different operational factors. This foundation is crucial for formulating the operational curve of the fab, which we'll delve into in the subsequent part of this blog. As outlined in an article by Deenen et al., there are methods to develop data-driven simulations that accurately predict future cycle times [3].

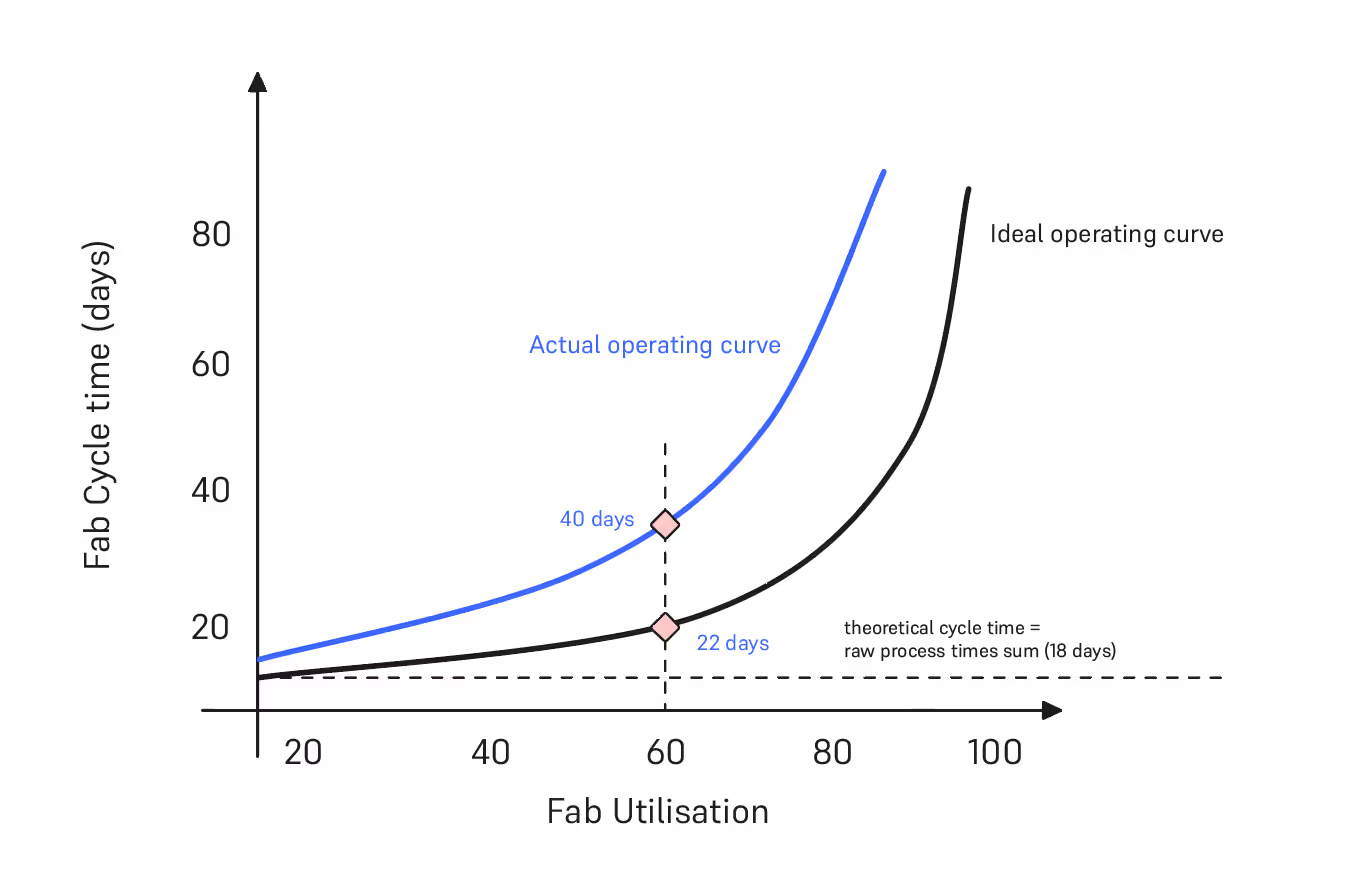

Fab operating curve: Fab Cycle Time versus Factory Utilization

As we mentioned earlier, historic data can be used to generate the operating curve of a fab which describes the cycle time in relation to the factory utilization. Figure 3 shows the graph of the fab cycle time in days versus the utilization of the fab (%). The utilization of the fab is defined as the WIP divided by the total capacity of the fab.

We have found this method useful in understanding the fundamental principles of cycle time. The operating curve helps to explain how factory physics impact fab KPIs such as cycle time and fab utilization by showing the changes in the operating points:

- The horizontal line, representing the summation of raw process times (known as theoretical cycle time), envisions a scenario with zero queuing time in the fab. This illustrates the impact of queuing time on cycle time as we increase WIP, moving right on the x-axis. Accumulation of queuing time becomes inevitable with the introduction of more WIP in the fab.

- The ideal operating curve represents the operation of a wafer-fab assuming that there is zero waste. This curve defines the minimum achievable cycle time for each fab load and the difference between this curve and theoretical cycle time is because of real life variability in the fab that cannot be eliminated completely, e.g. unplanned maintenances, inconsistent tool processing times.

- The cycle time tends to go to infinity, when you move towards 100% utilization of the fab.

- The actual operating curve, cycle time versus factory utilization, represents the current fab’s operation considering all the inefficiencies such as excessive inventory, variability in operations, idle times, poor batching and rework.

- Both curves assume average or constant values of the operational parameters of the fab for example a fixed number of tools installed, an average availability of each tool and labor, and a constant product mix.

- The actual operating curve describes the impact on cycle time if we load the fab with more WIP as shown in Figure 4. The fab management could use this information to make a decision about the trade-off between cycle time and factory utilization. Higher fab utilization is associated with a higher throughput (i.e. number of wafers per unit of time).

In Figure 3, you can see that the current fab cycle time is 40 days when the factory utilization is at 60%. Theoretically, we could reduce the cycle time to 22 days. The difference between these two points is due to the inefficiencies that contribute to the factory cycle time as explained in the introduction of this section. In Part 2 of this blog, we will explore the various types of inefficiencies and examine how innovation can shift the operating curve to achieve lower cycle times while maintaining the same fab utilization.

Summary

In summary, cycle time is not merely a production metric but the very pulse of the semiconductor manufacturing and supply chain. It governs revenues, shapes market responsiveness, and is pivotal in driving innovation. By understanding its nuances, semiconductor companies can not only optimize their operations but also gain a competitive edge. And while we've scratched the surface on its significance, the question remains: how can we further reduce and refine it? In part 2 of the C for Cycle Time blog, we will discover innovative techniques that promise to revolutionize cycle time management in wafer fabs.

Author: Dennis Xenos, CTO and Cofounder, Flexciton

References

- [1] James P. Ignizio, 2009 ,Optimizing Factory Performance: Cost-Effective Ways to Achieve Significant and Sustainable Improvement 1st Edition, McGraw-Hill, ISBN 978-0-07-163285-0

- [2] Semiconductor Industry Association, 2021, Blog, URL

- [3] Deenen, P.C., Middelhuis, J., Akcay, A. et al., 2023, Data-driven aggregate modeling of a semiconductor wafer fab to predict WIP levels and cycle time distributions. Flex Serv Manuf J. https://doi.org/10.1007/s10696-023-09501-1

- [4] Ondrej Burkacky, Marc de Jong, and Julia Dragon, 2022, Strategies to lead in the semiconductor world, McKinsey Article, URL.

Security and the Cloud: Should We Really Keep Everything On-prem?

Security and the Cloud: Should We Really Keep Everything On-prem?

Welcome to a nuanced exploration of pivotal considerations surrounding cloud adoption in the context of wafer fabrication. For those reading sceptically, uncertain about the merits of cloud integration, or perhaps prompted by concerns about lagging behind competitors—this blog endeavours to shed light on key areas of relevance.

Introduction

For those reading this blog, the chances are you (or perhaps your boss) remain unconvinced about the merits of cloud adoption, yet are open to participating in the ongoing debate. Alternatively, there might be a concern of falling behind industry peers, perhaps heightened by recent security incidents such as the hacking of X-Fab. By the end of this short article, you will have gained valuable insights into the significant areas of cloud security, with the anticipation that such information will contribute to a more informed decision-making process.

Firstly, this is about using a cloud service, not running your own systems in the cloud. There are good arguments for that too, but that’s not what this article is about. So, the areas deemed worthy of exploration within this context include:

- Security - It is a paramount concern that influences the reluctance of fabs to embrace cloud services, often accompanied by apprehension about entrusting sensitive data to cloud platforms.

- Type of service - If you’re running it on-premise, you’re probably managing it. Would you prefer to be buying software or a service?